- Artificial Intelligence, Machine Learning, Python, Web Scraping: Feedbacks/Queries -

Artificial Intelligence

Machine Learning Methods and Scripts

Human Brain: Have you tried to search online the keywords "number of neurons in the brain"? The answer is invariably 100 billions! There is another data that we use only 1% of brain and hence do the number 1 billion neurons not astonish you? Can we build a machine and the learning algorithm to deal with similar number of neurons?

Table of Contents

- Regression and Logistic Regression (GNU OCTAVE)

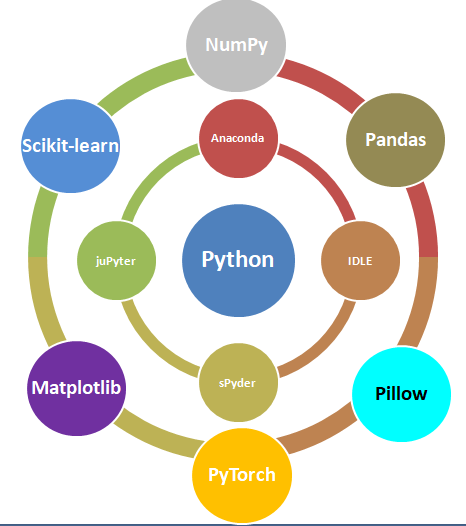

- Python install / update -|- OCTAVE vs. Python -|- Python Argument Parsing | Vectorization | Arrays / Slicing of Arrays || OOP in Python -|- Web Scraping in Python -|- Tuple and Dictionaries || Create Animations using Python *!* Scale all images in a folder |:| Pandas |-| File Operations in Python

- Linear Algebra ---Principal Component Analysis - PCA (GNU OCTAVE)

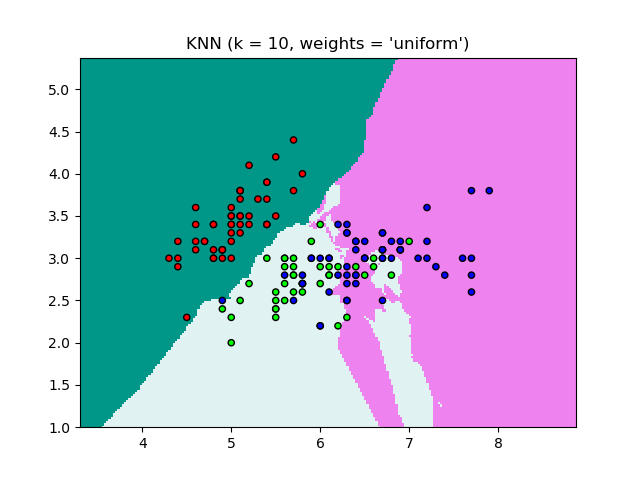

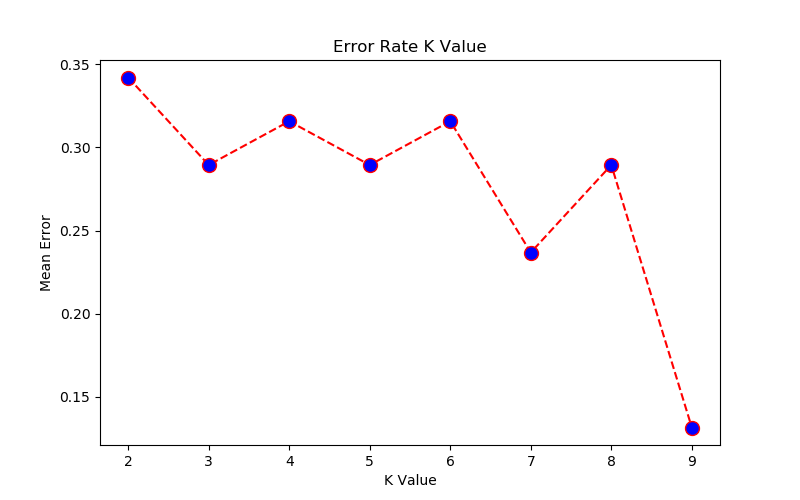

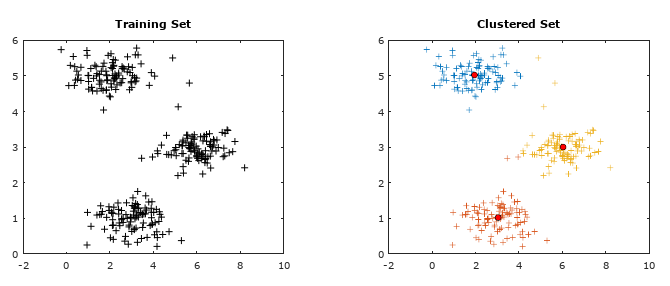

- K-Nearest Neighbours (KNN) using Python + sciKit-Learn --- clustering by K-means (GNU OCTAVE) ---Probability in Machine Learning

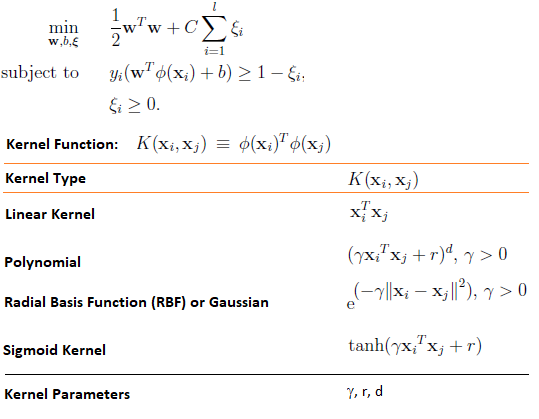

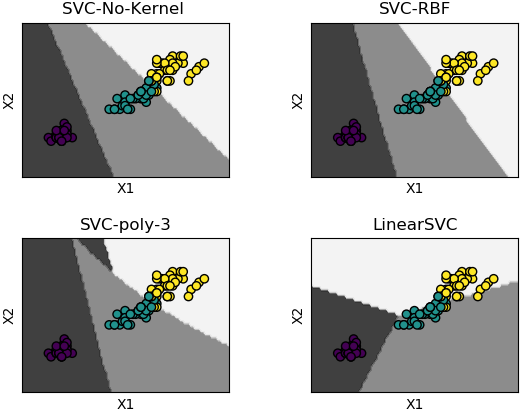

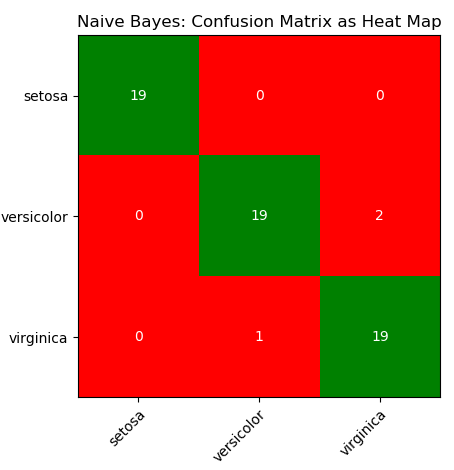

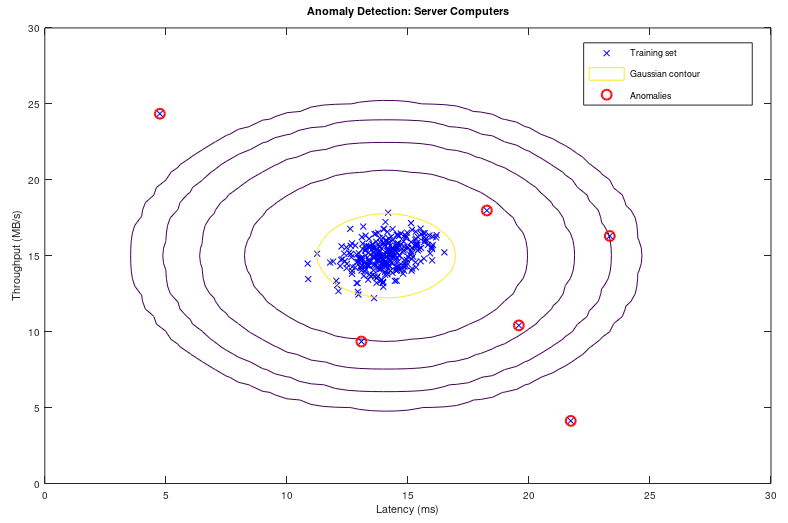

- SVM using Python + sciKit-Learn --- Naive Bayes classification --- Anomaly Detection ---Recommender Systems and Collaborative Filtering

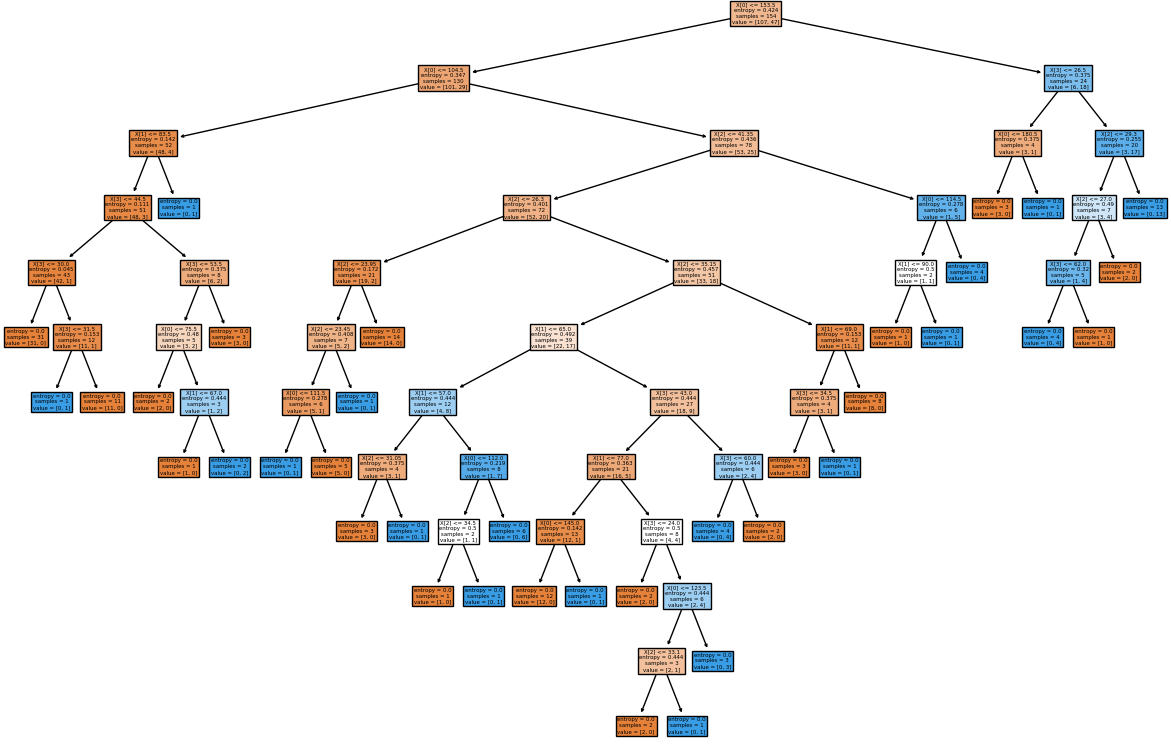

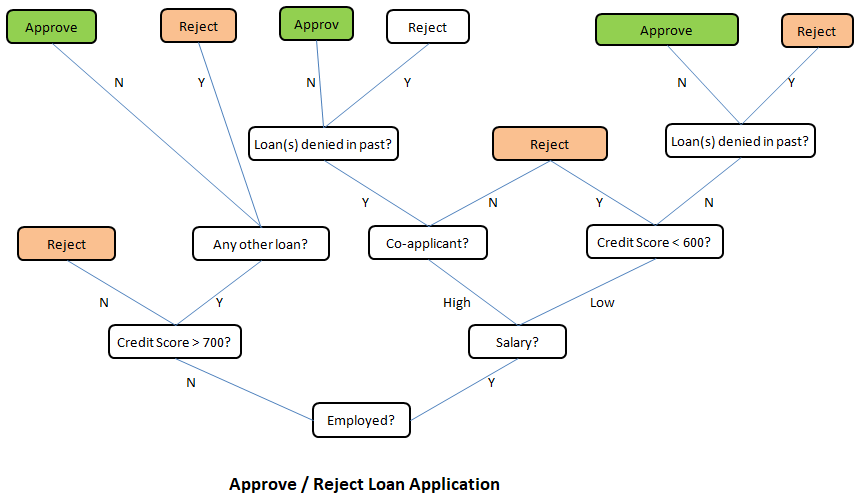

- Decision Tree / Random Forest Classification with Python + sciKit-Learn

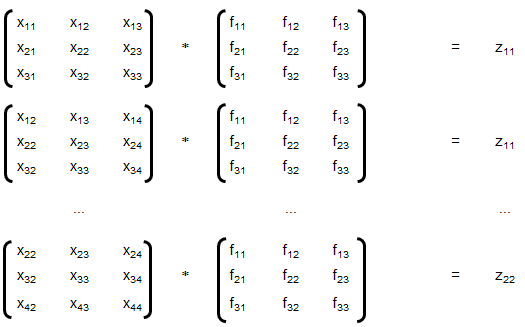

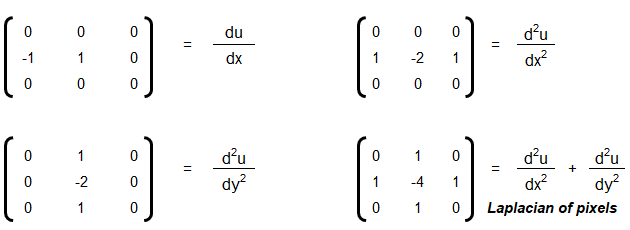

- Convolution, Edge Detection, Sobel kernel, Smoothen, Sharpen and Intensify Images

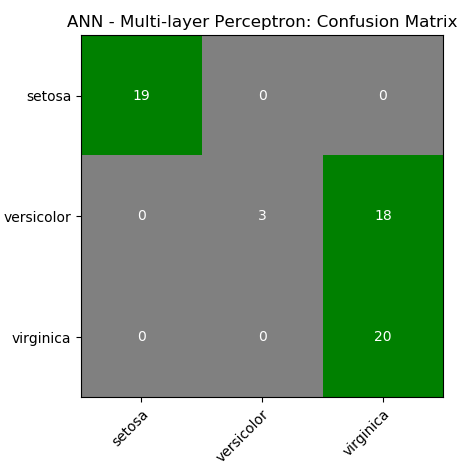

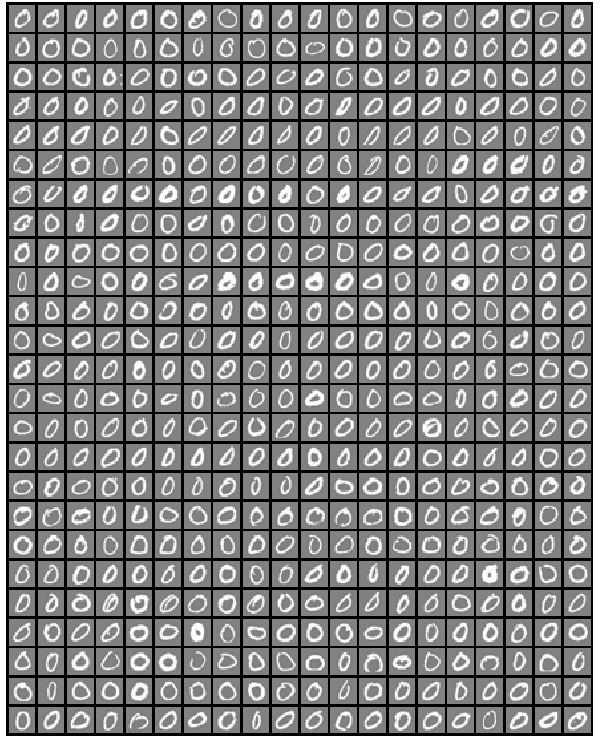

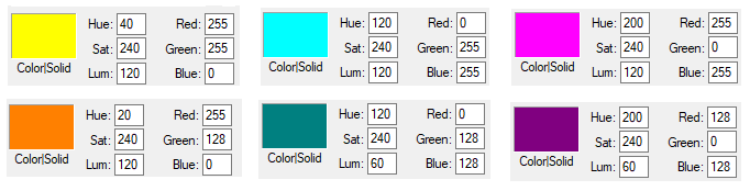

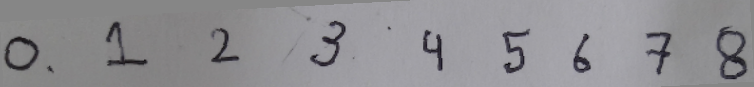

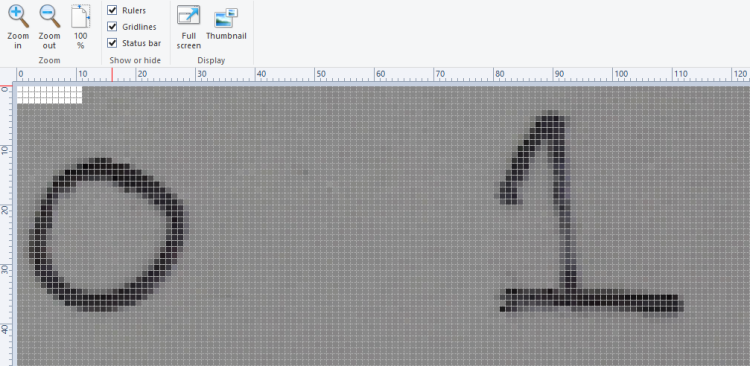

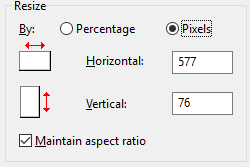

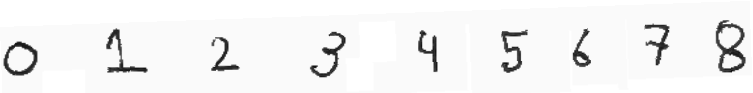

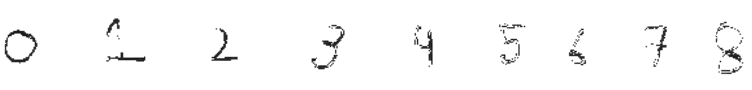

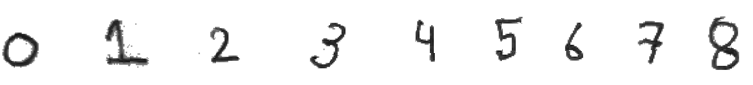

- Pixels, DPI, PPI and Screen Resolution |:| Digit Recognition and ANN MLP classifications --- Computer Vision | Image Editing using ImageMagick |:| Unsharp Mask [] Image Denoising using ML |*| Rasterize and Vectorize

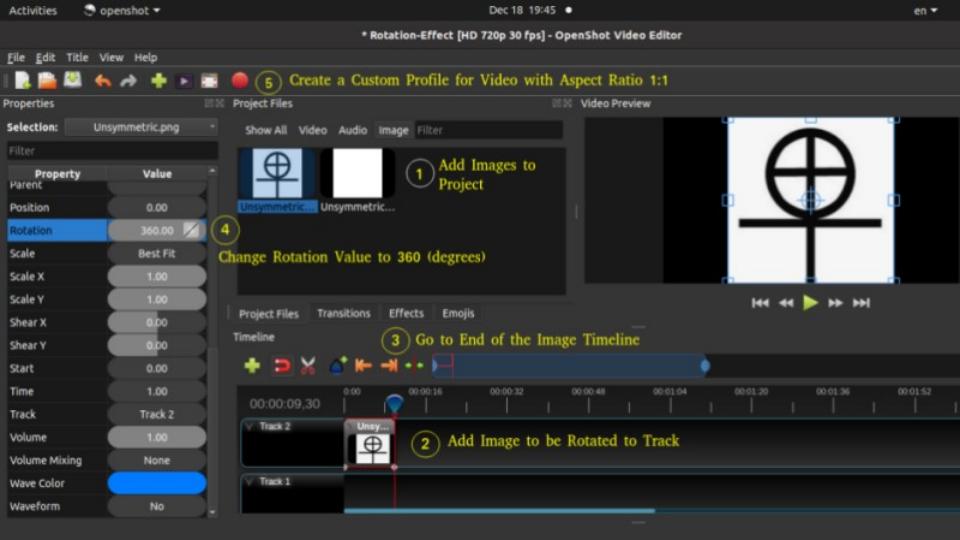

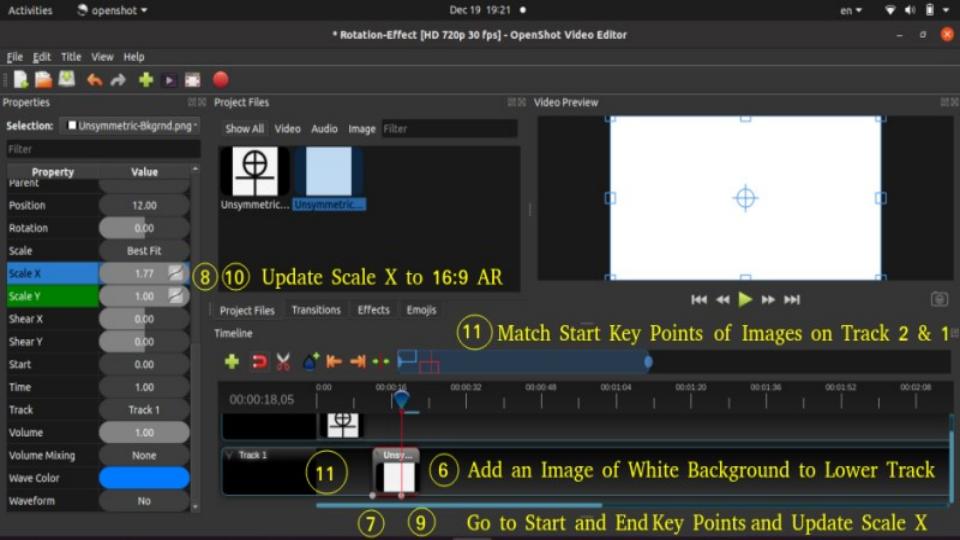

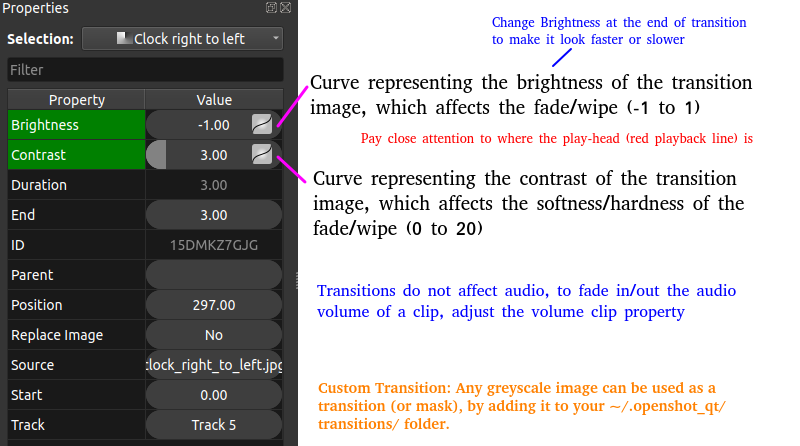

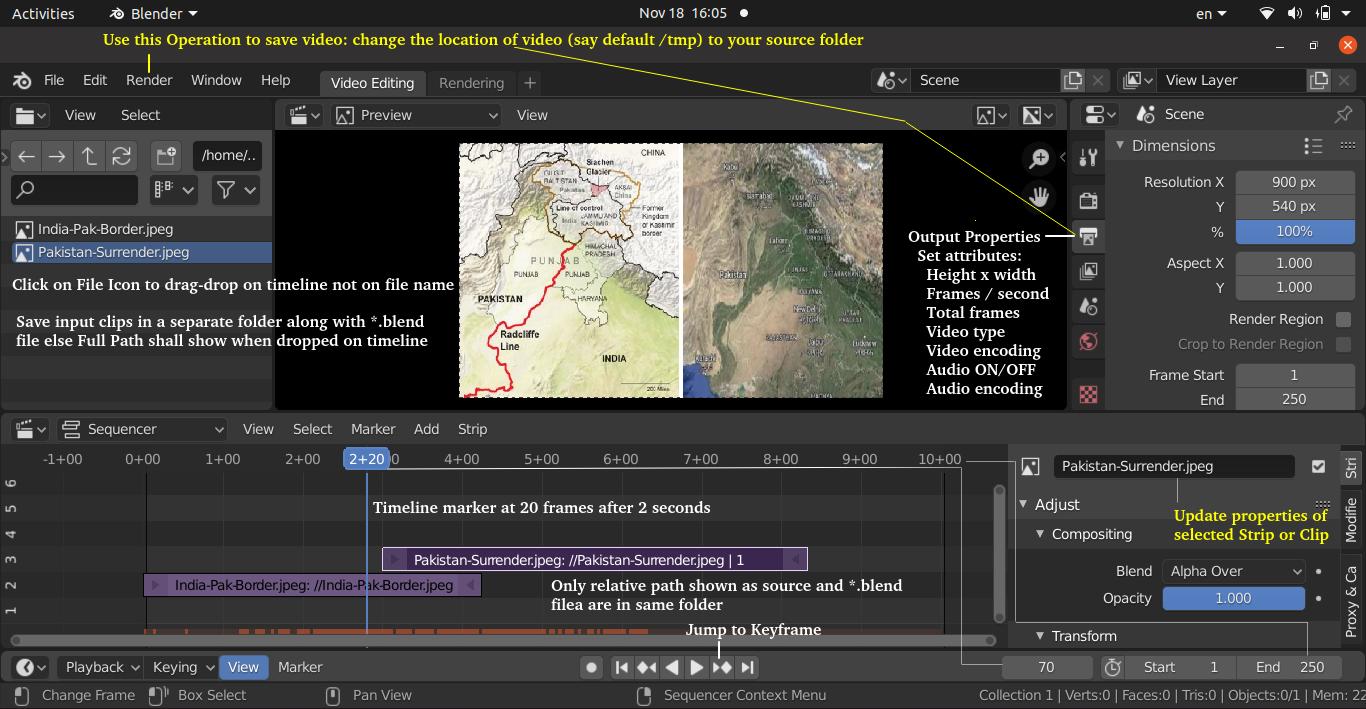

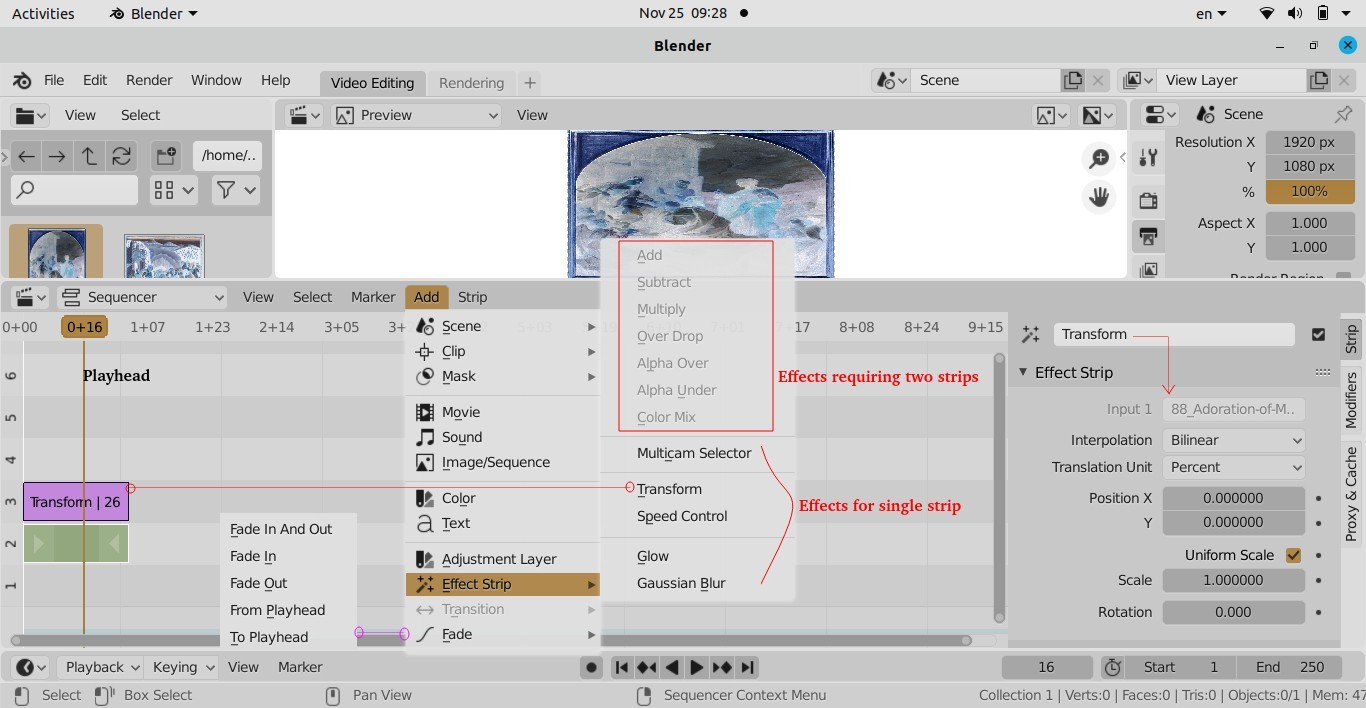

- Video Editing --- Image Filtering, Masking and Denoising ---Image Deskewing -|- Audio and Video Codecs | Video Editing using FFmpeg | Simple Rescaling | Create Videos from Images |:| Timeline editing and trimming |:| Crop videos | Overlay videos |:| Concatendate videos | Pillarboxing: add padding to videos |-| Color Effects |:| Freeze Effect [] Overlay videos using MoviePy

- Animations like PowerPoint |:| Box Transition Effect !! Cube Transition |=| Arrow Transition

- Morphological Operations | Connected Component Labeling: CCL --- Find and Draw Contours in an Image

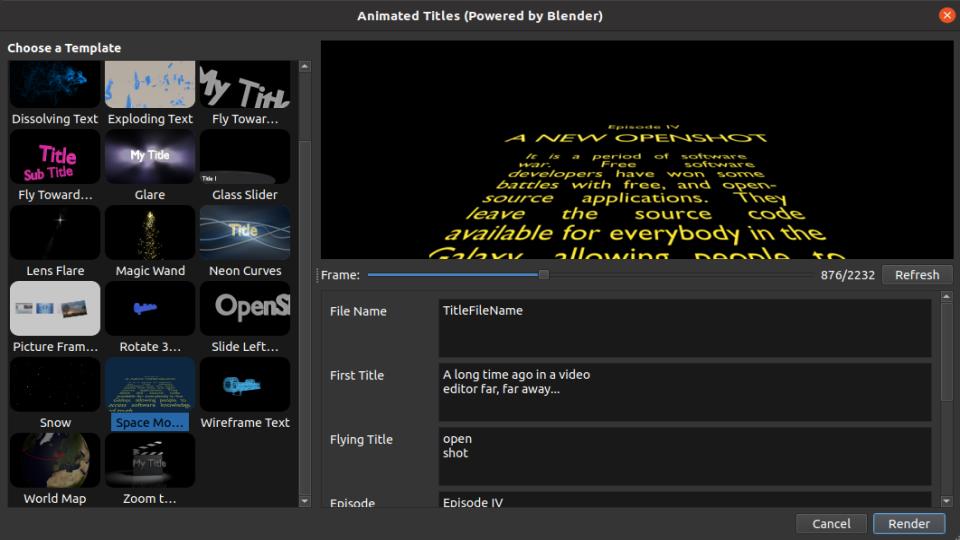

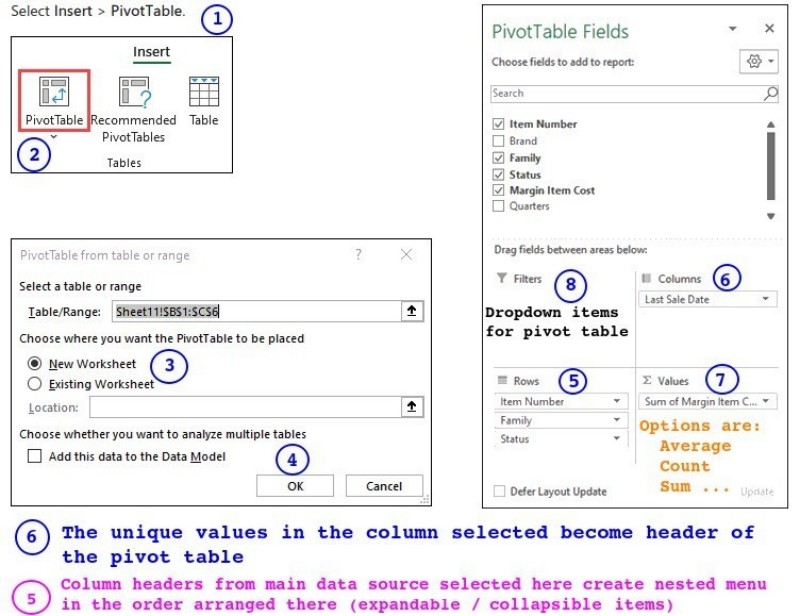

- Animations using Blender -|- Image and Video Editing using OpenCV |+| PowerBI and Pivot Table

What to Expect On This Page

Each statement is commented so that you easily connect with the code and the function of each module - remember one does not need to understand everything at the foundational level - e.g. the linear algebra behind each algorithm or optimization operations! The best way is to find a data, a working example script and fiddle with them.

✍Machine learning, artificial intelligence, cognitive computing, deep learning... are emerging and dominant conversations today all based on one fundamental truth - follow the data. In contrast to explicit (and somewhat static) programming, machine learning uses many algorithms that iteratively learn from data to improve, interpret the data and finally predict outcomes. In other words: machine learning is the science of getting computers to act without being explicitly programmed every time a new information is received.

An excerpt from Machine Learning For Dummies, IBM Limited Edition: "AI and machine learning algorithms aren't new. The field of AI dates back to the 1950s. Arthur Lee Samuels, an IBM researcher, developed one of the earliest machine learning programs - a self-learning program for playing checkers. In fact, he coined the term machine learning. His approach to machine learning was explained in a paper published in the IBM Journal of Research and Development in 1959". There are other topics of discussion such as Chinese Room Argument to question whether a program can give a computer a 'mind, 'understanding' and / or 'consciousness'. This is to check the validity of Turing test developed by Alan Turing in 1950. Turing test is used to determine whether or not computer (or machines) can think (intelligently) like humans.

The technical and business newspapers/journals are full of references to "Big Data". For business, it usually refers to the information that is capture or collected by the computer systems installed to facilitate and monitor various transactions. Online stores as well as traditional bricks-and-mortar retail stores generate wide streams of data. Big data can be and are overwhelming consisting of data table with millions of rows and hundreds if not thousands of columns. Not all transactional data are relevant though! BiG data are not just big but very often problematic too - containing missing data, information pretending to be numbers and outliers.

◎Data Management

Data management is art of getting useful information from raw data generated within the business process or collected from external sources. This is known as data science and/or data analytics and/or big data analysis. Paradoxically, the most powerful growth engine to deal with technology is the technology itself. The internet age has given data too much to handle and everybody seems to be drowning in it. Data may not always end up in useful information and a higher probability exists for it to become a distraction. Machine learning is related concept which deals with Logistic Regression, Support Vector Machines (SVM), k-Nearest-Neighbour (KNN) to name few methods.

Before one proceed further, let's try to recall how we were taught to make us what is designated as an 'educated or learned' person (we all have heard about literacy rate of a state, district and the country).

| Classical Learning Method | Example | Applicable to Machine Learning? |

| Instructions: repetition in all 3 modes - writing, visual and verbal | How alphabets and numerals look like | No |

| Rule | Counting, summation, multiplication, short-cuts, facts (divisibility rules...) | No |

| Mnemonics | Draw parallel from easy to comprehend subject to a tougher one: Principal (Main), Principle (Rule) | Yes |

| Analogy | Comparison: human metabolic system and internal combustion engines | No |

| Inductive reasoning and inferences | Algebra: sum of first n integers = n(n+1)/2, finding a next digit or alphabet in a sequence | Yes |

| Theorems | Trigonometry, coordinate geometry, calculus, linear algebra, physics, statistics | Yes |

| Memorizing (mugging) | Repeated speaking, writing, observing a phenomenon or words or sentences, meaning of proverbs | Yes |

| Logic and reasoning | What is right (appropriate) and wrong (inappropriate), interpolation, extrapolation | Yes |

| Reward and punishment | Encourage to act in a certain manner, discourage not to act in a certain manner | Yes |

| Identification, categorization and classification | Telling what is what! Can a person identify a potato if whatever he has seen in his life is the French fries? | Yes |

This is just a demonstration (using Python and scikit-learn) of one out of many machine learning methods which let users know what to expect as someone wants to dive deeper. One need not understand every line of the code though comments have been added to make the readers grab most out of it. The data in CSV format can be downloaded from here.

# CLASSIFICATION: 'DECISION TREE' USING PYTHON + SCIKIT-LEARN #On WIN10, python version 3.5 #Install scikit-learn: C:\WINDOWS\system32>py.exe -m pip install -U scikit-learn #pip install -r list.txt - install modules (1 per line) described in 'list.txt' # Decision Tree method is a 'supervised' classification algorithm. # Problem Statement: The task here is to predict whether a person is likely to # become diabetic or not based on 4 attributes: Glucose, BloodPressure, BMI, Age # Import numPy (mathematical utility) and Pandas (data management utility) import numpy as np import pandas as pd import matplotlib.pyplot as plt # Import train_test_split function from ML utility scikit-learn for Python from sklearn.model_selection import train_test_split #Import scikit-learn metrics module for accuracy calculation from sklearn import metrics #Confusion Matrix is used to understand the trained classifier behavior over the #input or labeled or test dataset from sklearn.metrics import confusion_matrix from sklearn.metrics import accuracy_score from sklearn.metrics import classification_report from sklearn import tree from sklearn.tree import DecisionTreeClassifier, plot_tree from sklearn.tree.export import export_text

# Import dataset: header=0 or header =[0,1] if top 2 rows are headers

df = pd.read_csv('diabetesRF.csv', sep=',', header='infer')

# Printing the dataset shape

print ("Dataset Length: ", len(df))

print ("Dataset Shape: ", df.shape)

print (df.columns[0:3])

# Printing the dataset observations

print ("Dataset: \n", df.head())

# Split the dataset after separating the target variable

# Feature matrix

X = df.values[:, 0:4] #Integer slicing: note columns 1 ~ 4 only (5 is excluded)

#To get columns C to E (unlike integer slicing, 'E' is included in the columns)

# Target variable (known output - note that it is a supervised algorithm)

Y = df.values[:, 4]

# Splitting the dataset into train and test

X_trn, X_tst, Y_trn, Y_tst = train_test_split(X, Y, test_size = 0.20,

random_state = 10)

#random_state: If int, random_state is the seed used by random number generator

#print(X_tst)

#test_size: if 'float', should be between 0.0 and 1.0 and represents proportion

#of the dataset to include in the test split. If 'int', represents the absolute

#number of test samples. If 'None', the value is set to the complement of the

#train size. If train_size is also 'None', it will be set to 0.25.

# Perform training with giniIndex. Gini Index is a metric to measure how often

# a randomly chosen element would be incorrectly identified (analogous to false

# positive and false negative outcomes).

# First step: #Create Decision Tree classifier object named clf_gini

clf_gini = DecisionTreeClassifier(criterion = "gini", random_state=100,

max_leaf_nodes=3, max_depth=None, min_samples_leaf=3)

#'max_leaf_nodes': Grow a tree with max_leaf_nodes in best-first fashion. Best

#nodes are defined as relative reduction in impurity. If 'None' then unlimited

#number of leaf nodes.

#max_depth = maximum depth of the tree. If None, then nodes are expanded until

#all leaves are pure or until all leaves contain < min_samples_split samples.

#min_samples_leaf = minimum number of samples required to be at a leaf node. A

#split point at any depth will only be considered if it leaves at least

#min_samples_leaf training samples in each of the left and right branches.

# Second step: train the model (fit training data) and create model gini_clf

gini_clf = clf_gini.fit(X_trn, Y_trn)

# Perform training with entropy, a measure of uncertainty of a random variable.

# It characterizes the impurity of an arbitrary collection of examples. The

# higher the entropy the more the information content.

clf_entropy = DecisionTreeClassifier(criterion="entropy", random_state=100,

max_depth=3, min_samples_leaf=5)

entropy_clf = clf_entropy.fit(X_trn, Y_trn)

# Make predictions with criteria as giniIndex or entropy and calculate accuracy

Y_prd = clf_gini.predict(X_tst)

#y_pred = clf_entropy.predict(X_tst)

#-------Print predicted value for debugging purposes ---------------------------

#print("Predicted values:")

#print(Y_prd)

print("Confusion Matrix for BINARY classification as per sciKit-Learn")

print(" TN | FP ")

print("-------------------")

print(" FN | TP ")

print(confusion_matrix(Y_tst, Y_prd))

# Print accuracy of the classification = [TP + TN] / [TP+TN+FP+FN]

print("Accuracy = {0:8.2f}".format(accuracy_score(Y_tst, Y_prd)*100))

print("Classification Report format for BINARY classifications")

# P R F S

# Precision Recall fl-Score Support

# Negatives (0) TN/[TN+FN] TN/[TN+FP] 2RP/[R+P] size-0 = TN + FP

# Positives (1) TP/[TP+FP] TP/[TP+FN] 2RP/[R+P] size-1 = FN + TP

# F-Score = harmonic mean of precision and recall - also known as the Sorensen–

# Dice coefficient or Dice similarity coefficient (DSC).

# Support = class support size (number of elements in each class).

print("Report: ", classification_report(Y_tst, Y_prd))

''' ---- some warning messages -------------- ------------- ---------- ----------

Undefined Metric Warning: Precision and F-score are ill-defined and being set to

0.0 in labels with no predicted samples.

- Method used to get the F score is from the "Classification" part of sklearn

- thus it is talking about "labels". This means that there is no "F-score" to

calculate for some label(s) and F-score for this case is considered to be 0.0.

'''

#from matplotlib.pyplot import figure

#figure(num=None, figsize=(11, 8), dpi=80, facecolor='w', edgecolor='k')

#figure(figsize=(1,1)) would create an 1x1 in image = 80x80 pixels as per given

#dpi argument.

plt.figure()

fig = plt.gcf()

fig.set_size_inches(15, 10)

clf = DecisionTreeClassifier().fit(X_tst, Y_tst)

plot_tree(clf, filled=True)

fig.savefig('./decisionTreeGraph.png', dpi=100)

#plt.show()

#---------------------- ------------------------ ----------------- ------------

#Alternate method to plot the decision tree is to use GraphViz module

#Install graphviz in Pyhton- C:\WINDOWS\system32>py.exe -m pip install graphviz

#Install graphviz in Anaconda: conda install -c conda-forge python-graphviz

#---------------------- ------------------------ ----------------- ------------

Data management is the method and technology of getting useful information from raw data generated within the business process or collected from external sources. Have you noticed that when you search for a book-shelf or school-shoes for your kid on Amazon, you start getting google-ads related to these products when you browse any other website? Your browsing history is being tracked and being exploited to remind you that you were planning to purchase a particular type of product! How is this done? Is this right or wrong? How long shall I get such 'relevant' ads? Will I get these ads even after I have already made the purchase?

The answer to all these questions lies in the way "data analytics" system has been designed and the extent to which it can access user information. For example, are such system allowed to track credit card purchase frequency and amount?

Related fields are data science, big data analytics or simply data analytics. 'Data' is the 'Oil' of 21st century and machine learning is the 'electricity'! This is a theme floating around in every organization, be it a new or a century old well-established company. Hence, a proper "management of life-cycle" of the data is as important as any other activities necessary for the smooth functioning of the organization. When we say 'life-cycle', we mean the 'generation', 'classification', "storage and distribution", "interpretation and decision making" and finally marking them 'obsolete'.

Due to sheer importance and size of such activities, there are many themes such as "Big Data Analytics". However, the organizations need not jump directly to a large scale analytics unless they test and validate a "small data analytics" to develop a robust and simple method of data collection system and processes which later complements the "Big Data Analytics". We also rely on smaller databases using tools which users are most comfortable with such as MS-Excel. This helps expedite the learning curve and sometimes even no new learning is required to get started.

Before proceeding further, let's go back to the basic. What do we really mean by the word 'data'? How is it different from words such as 'information' and 'report'? Data or a dataset is a collection of numbers, labels and symbols along with context of those values. For the information in a dataset to be relevant, one must know the context of the numbers and text it holds. Data is summarized in a table consisting of rows (horizontal entries) and columns (vertical entries). The rows are often called observations or cases.

Columns in a data table are called variables as different values are recorded in same column. Thus, columns of a dataset or data table describes the common attribute shared by the items or observations.

Let's understand the meaning and difference using an example. Suppose you received an e-mail from your manager requesting for a 'data' on certain topic. What is your common reply? Is it "Please find attached the data!" or is it "Please find attached the report for your kind information!"? Very likely the later one! Here the author is trying to convey the message that I have 'read', 'interpreted' and 'summarized' the 'data' and produced a 'report or document' containing short and actionable 'information'.

The 'data' is a category for 'information' useful for a particular situation and purpose. No 'information' is either "the most relevant" or "the most irrelevant" in absolute sense. It is the information seeker who defines the importance of any piece of information and then it becomes 'data'. The representation of data in a human-friendly manner is called 'reporting'. At the same time, there is neither any unique way of extracting useful information nor any unique information that can be extracted from a given set of data. Data analytics can be applied to any field of the universe encompassing behaviour of voters, correlation between number of car parking tickets issued on sales volume, daily / weekly trade data on projected movement of stock price...

Types of Documents

| Structured | Semi-Structured | Unstructured |

| The texts, fonts and overall layout remains fixed | The texts, fonts and overall layout varies but have some internal structure | The texts, fonts and overall layout are randomly distributed |

| Examples are application forms such as Tax Return, Insurance Policies | Examples are Invoices, Medical est reports | E-mails, Reports, Theses, Sign-boards, Product Labels |

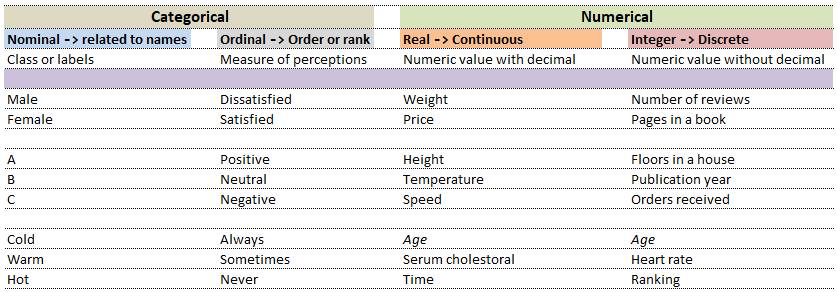

Computers understand data in a certain format whereas the nature of data can be numbers as well as words or phrases which cannot be quantified. For example, the difference in "positive and neutral" ratings cannot be quantified and will not be same as difference in "neutral and negative" ratings. There are many ways to describe the type of data we encounter in daily life such as (binary: either 0 or 1), ordered list (e.g. roll number or grade)...

| Nominal | Ordinal | ||

| What is your preferred mode of travel? | How will you rate our services? | ||

| 1 | Flights | 1 | Satisfied |

| 2 | Trains | 2 | Neutral |

| 3 | Drive | 3 | Dissatisfied |

While in the first case, digits 1, 2 and 3 are just variable labels [nominal scale] whereas in the second example, the same numbers (digits) indicate an order [ordinal scale]. Similarly, phone numbers and pin (zip) codes are 'numbers' but they form categorical variables as no mathematical operations normally performed on 'numbers' are applicable to them.

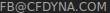

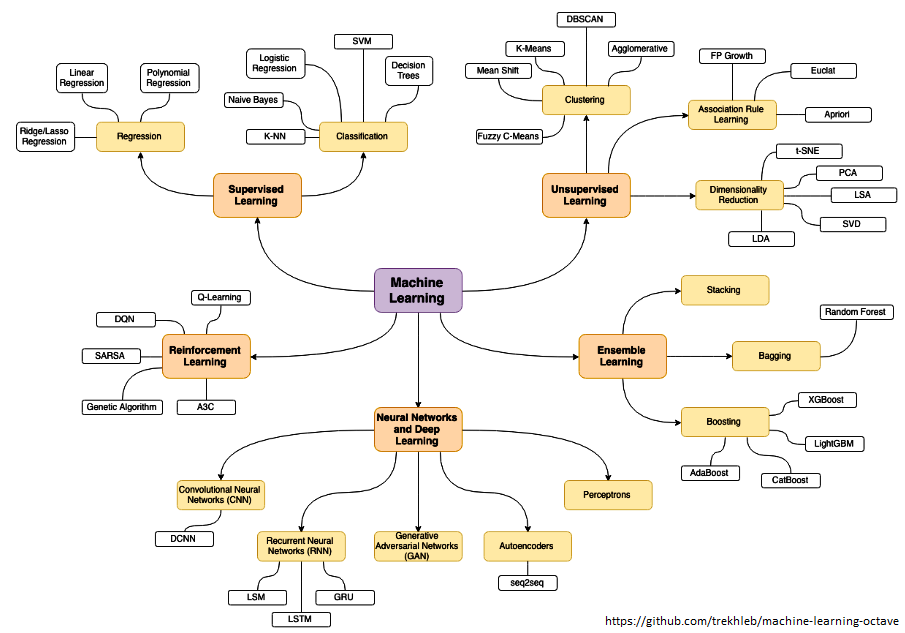

Data Analytics, Data Science, Machine Learning, Artificial Intelligence, Neural Network and Deep Learning are some of the specialized applications dealing with data. There is no well-defined boundaries as they necessarily overlap and the technology itself is evolving at rapid pace. Among these themes, Artificial Neural Network (ANN) is a technology inspired by neurons in human brains and ANN is the technology behind artificial intelligence where attempts are being made to copy how human brain works. 'Data' in itself may not have 'desired' or 'expected' value and the user of the data need to find 'features' to make machine learning algorithms works as most of them expect numerical feature vectors with a fixed size. This is also known as "feature engineering".

- Artificial Intelligence (AI): Self-driving car (autonomous vehicles), speech recognition

- Machine Learning (ML): Given a picture - identify steering angle of a car, Google translate, Face recognition, Identify hand-written letters.

| Artificial Intelligence | Machine Learning | Deep Learning |

| Engineer | Researcher | Scientist |

| B. Tech. degree | Master's degree | PhD |

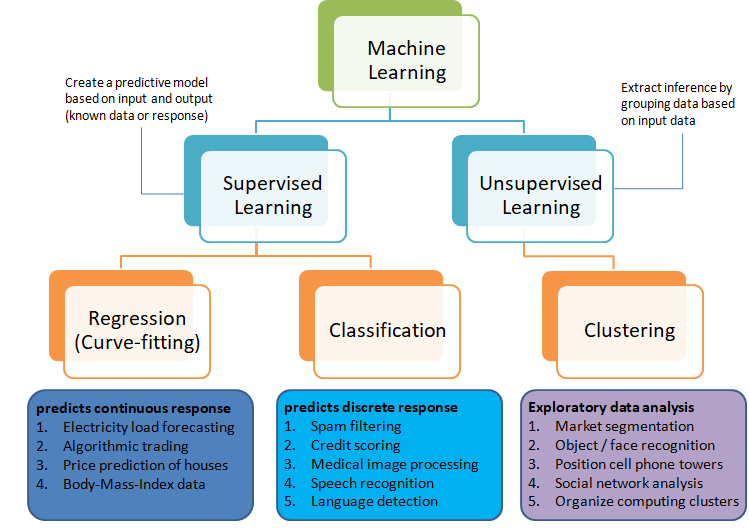

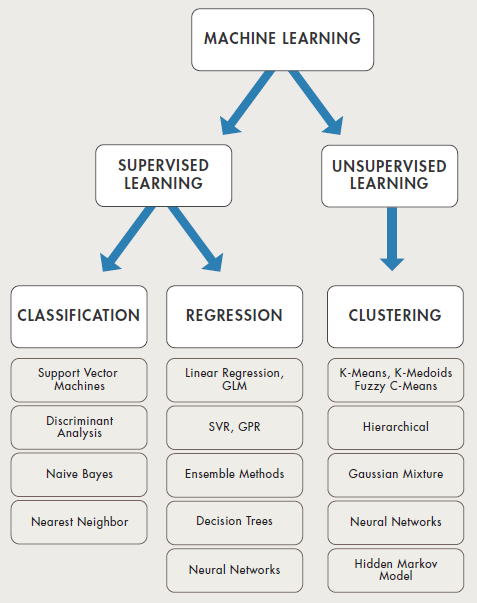

The category of supervised and unsupervised learning can be demonstrated as per the chart below. The example applications of each type of the machine learning method helps find a clear distinction among those methods. The methods are nothing new and we do it very often in our daily life. For example, ratings in terms of [poor, average, good, excellent] or [hot, warm, cold] or [below expectations, meets expectations, exceeds expectations, substantially exceeds expectations] can be based on different numerical values. Refer the customer loyalty rating (also known as Net Promoters Score) where a rating below 7 on scale of 10 is considered 'detractors', score between '7 - 8' is rated 'passive' and score only above 8 is considered 'promoter'. This highlights the fact that no uniform scale is needed for classifications.

Selection of machine learning algorithms: reference e-book "Introducing Machine Learning" by MathWorks.

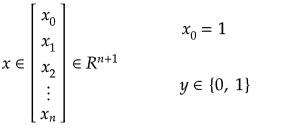

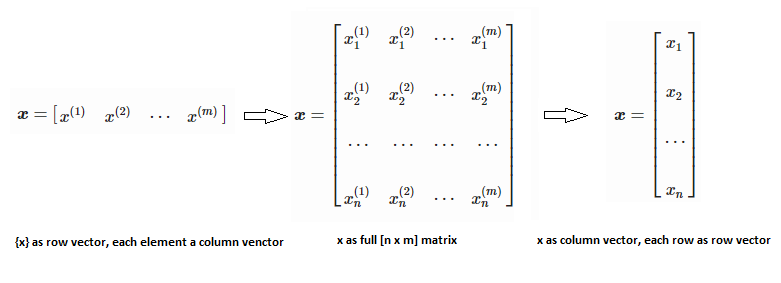

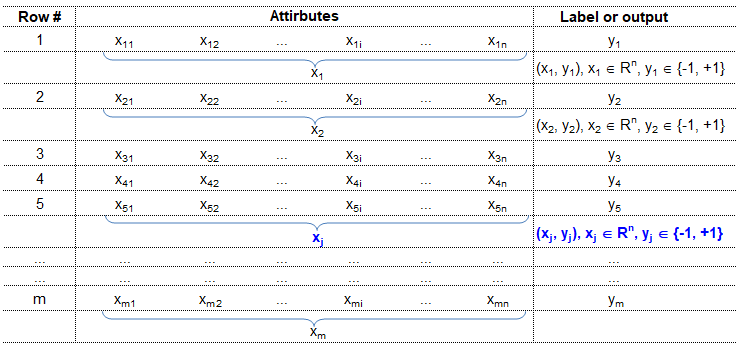

Machine learning is all about data and data is all about row and column vectors. Each instance of a data or observation is usually represented by a row vector where the first or the last element may be the 'variable or category desired to be predicted'. Thus, there are two broad division of a data set: features and labels (as well as levels of the labels).

- Features: This refers to key characteristics of a dataset or entity. In other words, features are properties that describe each instance of data. Each instance is a point in feature space. For example, a car may have color, type (compact, sedan, SUV), drive-type (AWD, 4WD, FWD, RWD) ... This is also known as predictors, inputs or attributes.

- Label: This is the final identifier such as price category of a car. It is also known as the target, response or output of a feature vector.

As in any textbook, there are solved examples to demonstrate the theory explained in words, equations and figures. And then there are examples (with or without known answers) to readers to solve and check their learnings. The two sets of question can be classified as "training questions" and "evaluation questions" respectively. Similarly in machine learning, we may have a group of datasets where output or label is known and another datasets where labels may not be known.

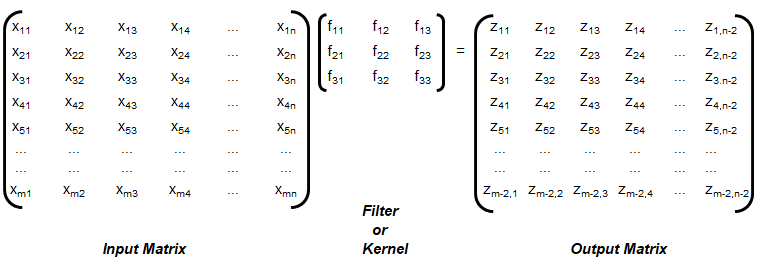

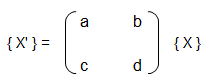

Training set is an input data where for every predefined set of features 'xi' we have a correct classification y. It is represented as tuples [(x1, y1), (x2, y2), (x3, y3) ... (xk, yk)] which represents 'k' rows in the dataset. Rows of 'x' correspond to observations and columns correspond to variables or attributes or labels. In other words, feature vector 'x' can be represented in matrix notation as:

Hypothesis (the Machine Learning Model)

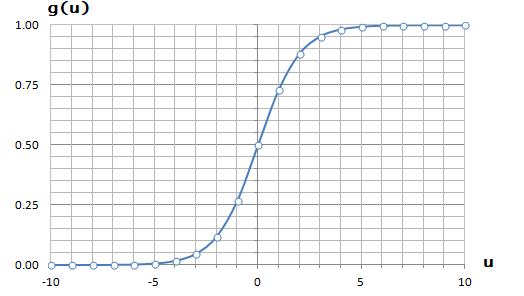

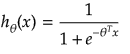

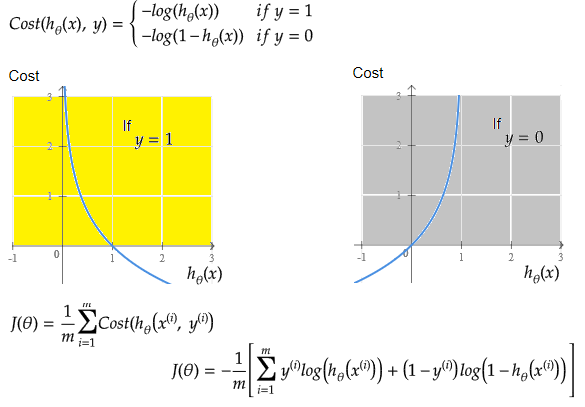

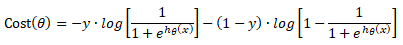

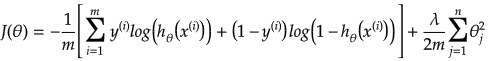

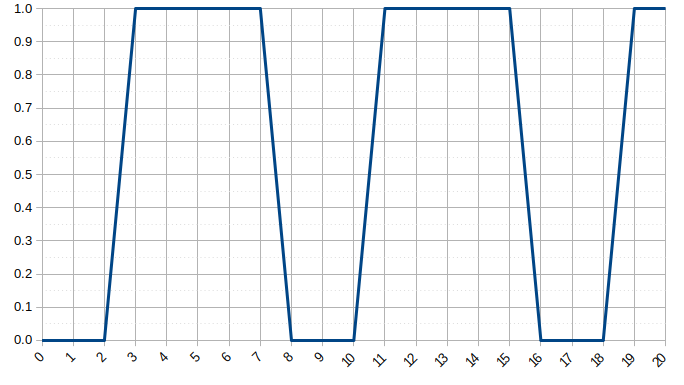

It is equation that gets features and parameters as an input and predicts the value as an output (i.e. predict if the email is spam or not based on some email characteristics). hθ(x) = g(θTx) where 'T' refers to transpose operation, θ are the (unknown) parameters evaluated during the learning process and 'g' is the Sigmoid function g(u) = [1+e-u]-1 which is plotted below.

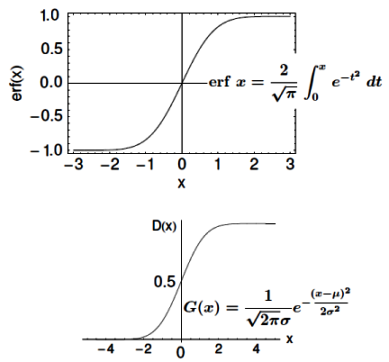

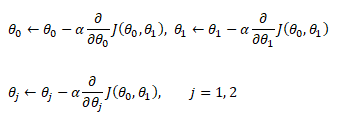

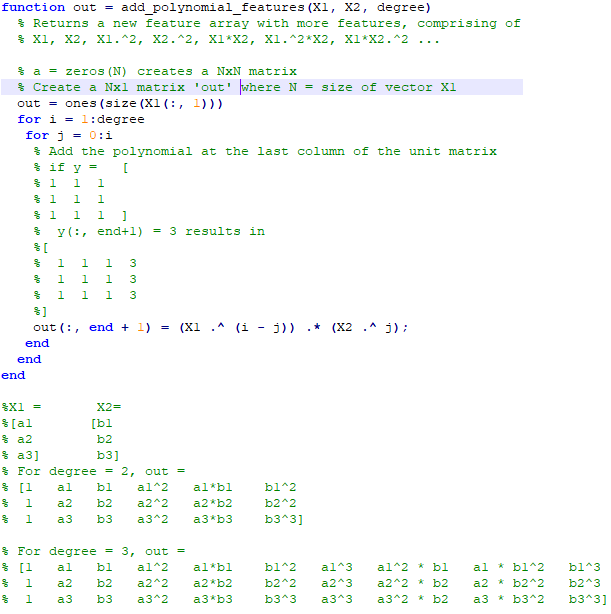

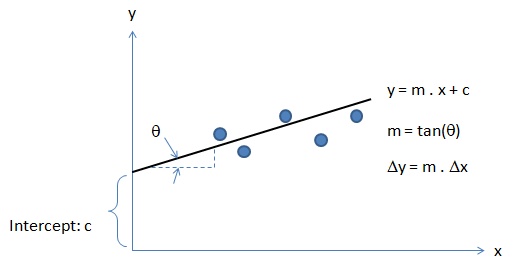

Activation Function: The hypothesis for a linear regression can be in the form y = m·x + c or y = a + b·log(c·x). The objective function is to estimate value of 'm' and 'c' by minimizing the square error as described in cost function.

The objective function of a logistic regression can be described as:- Predict y = 0 if hθ(x) < 0.5 which is true if θTx ≥ 0.

- Predict y = 1 if hθ(x) > 0.5 which is true if θTx < 0. θi are called parameters of the model.

Note that the Sigmoid function looks similar to classical error function and cumulative normal distribution function with mean zero.

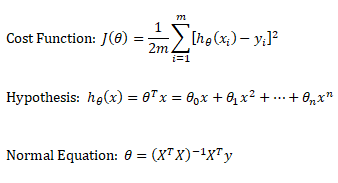

Linear regression: cost function also known as "square error function" is expressed as

Cost(θ) = - y × log[hθ(x)] - (1-y) × [1 - log(hθ(x))]

In other words:

Additional method adopted is the "mean normalization" where all the features are displacement such that their means are closer to 0. These two scaling of the features make the gradient descent method faster and ensures convergence.

Normal Equation

This refers to the analytical method to solver for θ. If the matrix XTX is invertible, θ = (XTX)-1XTy where y is column vector of known labels (n × 1). X is features matrix of size n × (m+1) having 'n' number of datasets (rows) in training set and 'm' number of attributes.If [X] contains any redundant feature (a feature which is dependent on other features), it is likely to be XTX non-invertible.

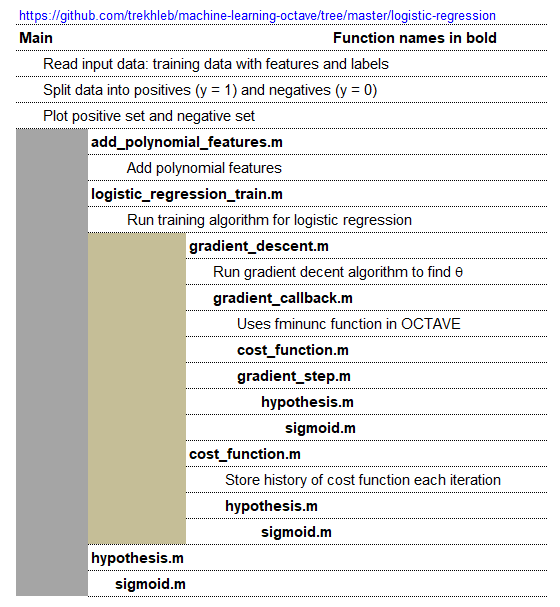

An implementation of logistic regression in OCTAVE is available on the web. One of these available in GitHub follow the structure shown below.

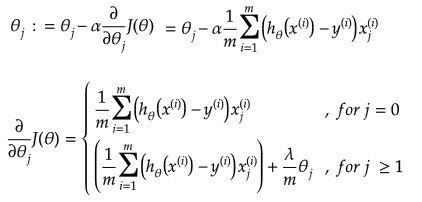

An explanation of the function add_polynomial_feature.m is described below.

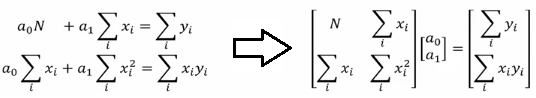

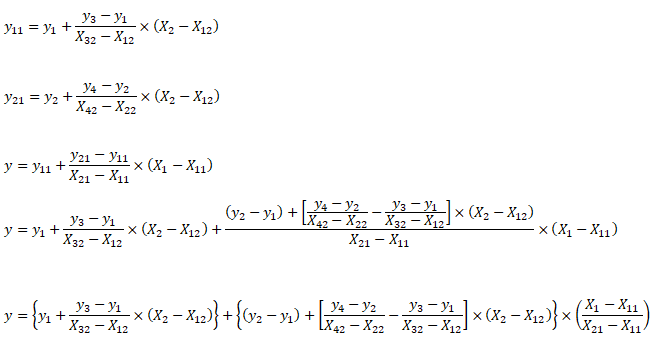

Optimization and regression using Octave - click to get the GNU OCTAVE scripts. Linear Least Square (LSS) is a way to estimate the curve-fit parameters. A linear regression is fitting a straight line y = a0 + a1x where {x} is the independent variable and y is the dependent variable. Since there are two unknowns a0 and a1, we need two equations to solve for them. If there are N data points:

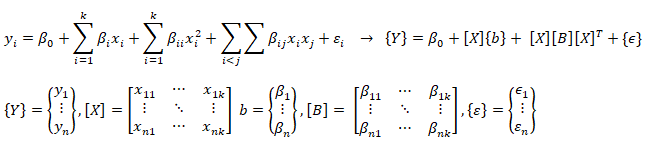

Similarly, if dependent variable y is function of more than 1 independent variables, it is called multi-variable linear regression where y = f(x1, x2...xn). The curve fit equations is written as y = a0 + a1x1 + a2x2 + ... + anxn + ε where ε is the curve-fit error. Here xp can be any higher order value of xjk and/or interaction term (xi xj).

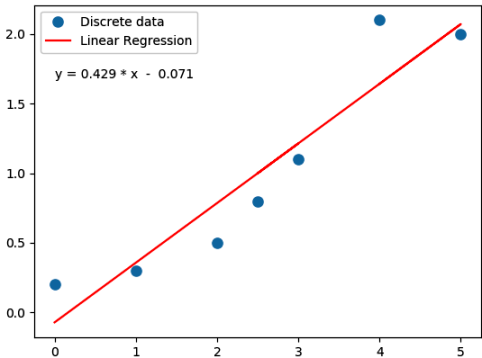

Following Python script performs linear regression and plots the discrete data, linear equation from curve-fitting operation and annotates the linear equation on the plot.

import numpy as np

#Specify coefficient matrix: independent variable values

x = np.array([0.0, 1.0, 2.0, 3.0, 2.5, 5.0, 4.0])

#Specify ordinate or "dependent variable" values

y = np.array([0.2, 0.3, 0.5, 1.1, 0.8, 2.0, 2.1])

#Create coefficient matrix

A = np.vstack([x, np.ones(len(x))]).T

#least square regression: rcond = cut-off ratio for small singular values of a

#Solves the equation [A]{x} = {b} by computing a vector x that minimizes the

#squared Euclidean 2-norm | b - {A}.{x}|^2

m, c = np.linalg.lstsq(A, y, rcond=None)[0]

print("\n Slope = {0:8.3f}".format(m))

print("\n Intercept = {0:8.3f}".format(c))

import matplotlib.pyplot as plt

_ = plt.plot(x, y, 'o', label='Discrete data', markersize=8)

_ = plt.plot(x, m*x + c, 'r', label='Linear Regression')

_ = plt.legend()

if (c > 0):

eqn = "y ="+str("{0:6.3f}".format(m))+' * x + '+str("{0:6.3f}".format(c))

else:

eqn = "y ="+str("{0:6.3f}".format(m))+' * x - '+str("{0:6.3f}".format(abs(c)))

print('\n', eqn)

#Write equation on the plot

# text is right-aligned

plt.text(min(x)*1.2, max(y)*0.8, eqn, horizontalalignment='left')

plt.show()

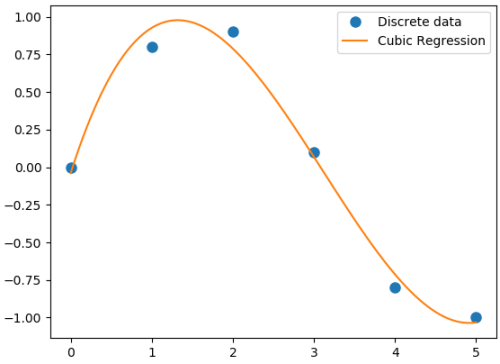

If the equation used to fit has exponent of x > 1, it is called a polynomical regression. A quadratic regression uses polynomial of degree 2 (y = a0 + a1x + a2x2 + ε), a cubic regression uses polynomial of degree 3 (y = a0 + a1x + a2x2 + a3x3 + ε) and so on. Since the coefficients are constant, a polynomial regression in one variable can be deemed a multi-variable linear regression where x1 = x, x2 = x2, x3 = x3 ... In scikit-learn, PolynomialFeatures(degree = N, interaction_only = False, include_bias = True, order = 'C') generates a new feature matrix consisting of all polynomial combinations of the features with degree less than or equal to the specified degree 'N'. E.g. poly = PolynomialFeatures(degree=2), Xp = poly.fit_transform(X, y) will transform [x1, x2] to [1, x1, x2, x1*x1, x1*x2, x2*x2]. Argument option "interaction_only = True" can be used to create only the interaction terms. Bias column (added as first column) is the feature in which all polynomial powers are zero (i.e. a column of ones - acts as an intercept term in a linear model).

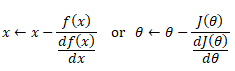

Polynomial regression in single variable - Uni-Variate Polynomial Regression: The Polynomial Regression can be perform using two different methods: the normal equation and gradient descent. The normal equation method uses the closed form solution to linear regression and requires matrix inversion which may not require iterative computations or feature scaling. Gradient descent is an iterative approach that increments theta according to the direction of the gradient (slope) of the cost function and requires initial guess as well.

#Least squares polynomial fit: N = degree of the polynomial

#Returns a vector of coefficients that minimises the squared error in the order

#N, N-1, N-2 … 0. Thus, the last coefficient is the constant term, and the first

#coefficient is the multiplier to the highest degree term, x^N

import warnings; import numpy as np

x = np.array([0.0, 1.0, 2.0, 3.0, 4.0, 5.0])

y = np.array([0.0, 0.8, 0.9, 0.1, -0.8, -1.0])

#

N = 3

#full=True: diagnostic information from SVD is also returned

coeff = np.polyfit(x, y, N, rcond=None, full=True, w=None, cov=False)

np.set_printoptions(formatter={'float': '{: 8.4f}'.format})

print("Coefficients: ", coeff[0])

print("Residuals:", coeff[1])

print("Rank:", coeff[2])

print("Singular Values:", coeff[3])

print("Condition number of the fit: {0:8.2e}".format(coeff[4]))

#poly1D: A 1D polynomial class e.g. p = np.poly1d([3, 5, 8]) = 3x^2 + 5x + 8

p = np.poly1d(coeff[0])

xp = np.linspace(x.min(), x.max(),100)

import matplotlib.pyplot as plt

_ = plt.plot(x, y, 'o', label='Discrete data', markersize=8)

_ = plt.plot(xp, p(xp), '-', label='Cubic Regression', markevery=10)

_ = plt.legend()

plt.rcParams['path.simplify'] = True

plt.rcParams['path.simplify_threshold'] = 0.0

plt.show()

Output from the above code is:

Coefficients: [0.0870 -0.8135 1.6931 -0.0397] Residuals: [ 0.0397] Rank: 4 Singular Values: [1.8829 0.6471 0.1878 0.0271] Condition number of the fit: 1.33e-15In addition to 'poly1d' to estimate a polynomial, 'polyval' and 'polyvalm' can be used to evaluate a polynomial at a given x and in the matrix sense respectively. ppval(pp, xi) evaluate the piecewise polynomial structure 'pp' at the points 'xi' where 'pp' can be thought as short form of piecewise polynomial.

Similarly, a non-linear regression in exponential functions such as y = c × ekx can be converted into a linear regression with semi-log transformation such as ln(y) = ln(c) + k.x. It is called semi-log transformation as log function is effectively applied only to dependent variable. A non-linear regression in power functions such as y = c × xk can be converted into a linear regression with log-log transformation such as ln(y) = ln(c) + k.ln(x). It is called log-log transformation as log function is applied to both the independent and dependent variables.

A general second order model is expressed as described below. Note the variable 'k' has different meaning as compared to the one described in previous paragraph. Here k is total number of independent variables and n is number of rows (data in the dataset).

As per MathWorks: "The multivariate linear regression model is distinct from the multiple linear regression model, which models a univariate continuous response as a linear combination of exogenous terms plus an independent and identically distributed error term." Note that endogenous and exogenous variables are similar but not same as dependent and independent variables. For example, the curve fit coefficients of a linear regression are variable (since they are based on x and y), they are called endogenous variables - values that are determined by other variables in the system. An exogenous variable is a variable that is not affected by other variables in the system. In contrast, an endogenous variable is one that is influenced by other factors in the system. Here the 'system' may refer to the "regression algorithm".

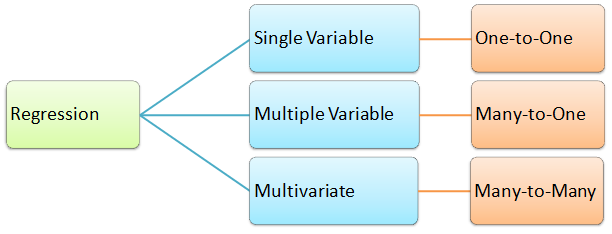

In summary, categorization of regression types:

#----------------------- -------------------------- ---------------------------

import numpy as np

import pandas as pd

df = pd.read_csv('MultiVariate2.csv', sep=',', header='infer')

X = df.values[0:20, 0:3]

y = df.values[0:20, 3]

#Y = a1x1 + a2x2 + a3x3 + ... + +aNxN + c

#-------- Method-1: linalg.lstsq ---------------------- -----------------------

X = np.c_[X, np.ones(X.shape[0])] # add bias term

beta_hat = np.linalg.lstsq(X, y, rcond=None)[0]

print(beta_hat)

print("\n------ Runnning Stats Model ----------------- --------\n")

#Ordinary Least Squares (OLS), Install: py -m pip -U statsmodels

from statsmodels.api import OLS

model = OLS(y, X)

result = model.fit()

print (result.summary())

#-------- Method-3: linalg.lstsq ----------------------- ----------------------

print("\n-------Runnning Linear Regression in sklearn ---------\n")

from sklearn.linear_model import LinearRegression

regressor = LinearRegression()

regressor.fit(X, y)

print(regressor.coef_) #print curve-fit coefficients

print(regressor.intercept_) #print intercept values

#

#print regression accuracy: coefficient of determination R^2 = (1 - u/v), where

#u is the residual sum of squares and v is the total sum of squares.

print(regressor.score(X, y))

#

#calculate y at given x_i

print(regressor.predict(np.array([[3, 5]])))

More example of curve-fit using SciPy

'''

Curve fit in more than 1 independent variables.

Ref: stackoverflow.com/.../fitting-multivariate-curve-fit-in-python

'''

import numpy as np

import matplotlib.pyplot as plt

from scipy.optimize import curve_fit

def fit_circle(x, a, b):

'''

Model Function that provides the type of fit y = f(x). It must take

the independent variable as the first argument and the parameters

to fit as separate remaining arguments.

'''

return a*x[0]*x[0] + b*x[1]*x[1]

def fit_poly(x, a, b, c, d, e, f):

'''

Model Function that provides the type of fit y = f(x). It must take

the independent variable as the first argument and the parameters

to fit as separate remaining arguments.

'''

return a*x[0]*x[0] + b*x[1]*x[1] + c*x[0]*x[1] + d * x[0] + e * x[1] + f

def fit_lin_cross(x, a, b, c, d):

'''

Model Function that provides the type of fit y = f(x). It must take

the independent variable as the first argument and the parameters

to fit as separate remaining arguments.

'''

return a*x[0] + b*x[1] + c*x[0]*x[1] + d

def fit_2d_data(fit_func, x_data, y_data, p0=None):

'''

Main function to calculate coefficients.

x_data: (k,M)-shaped array for functions with k predictors (data points)

y_data: The dependent data, a length M array

p0: Initial guess for the parameters (length N), default = 1

'''

fitParams, fitCovariances = curve_fit(fit_func, x_data, y_data, p0)

print('Curve-fit coefficients: \n', fitParams)

# Run curve-fit. x, y and z arrays can be read from a text file.

x = np.array([1, 2, 3, 4, 5, 6])

y = np.array([2, 3, 4, 5, 6, 8])

z = np.array([5, 13, 25, 41, 61, 100])

x_data = (x, y)

fit_2d_data(fit_lin_cross, x_data, z)

Ridge Regression

If data suffers from multicollinearity (independent variables are highly correlated), the least squares estimates result in large variances which deviates the observed value far from the true value (low R-squared, R2). By adding a degree of bias to the regression estimates using a "regularization or shrinkage parameter", ridge regression reduces the standard errors. In scikit-learn, it is invoked by "from sklearn.linear_model import Ridge". The function is used by: reg = Ridge(alpha=0.1, fit_intercept=True, normalize=False, solver='auto', random_state=None); reg.fit(X_trn, y_trn). Regularization improves the conditioning of the problem and reduces the variance of the estimates. Larger values specify stronger regularization.

Lasso Regression

Lasso (Least Absolute Shrinkage and Selection Operator) is similar to ridge regression which penalizes the absolute size of the regression coefficients instead of squares in the later. Thus, ridge regression uses L2-regularization whereas as LASSO use L1-regularization. In scikit-learn, it is invoked by "from sklearn.linear_model import Lasso". The function is used by: reg = Lasso(); reg.fit(X_trn, y_trn)SVR

Support Vector Machines (SVM) used for classification can be extended to solve regression problems and method is called Support Vector Regression (SVR).Regression in two variables: example

| X1 | X2 | y | X1 | X2 | y | X1 | X2 | y | X1 | X2 | y | |||

| 5 | 20 | 100.0 | First Interpolation on X2 | Second Interpolation on X2 | Final interpolation on X1 | |||||||||

| 10 | 20 | 120.0 | 5 | 20 | 100.0 | 10 | 20 | 120.0 | 5 | 25 | 200.0 | |||

| 5 | 40 | 500.0 | 5 | 40 | 500.0 | 10 | 40 | 750.0 | 10 | 25 | 277.5 | |||

| 10 | 40 | 750.0 | ||||||||||||

| 8 | 25 | ? | 5 | 25 | 200.0 | 10 | 25 | 277.5 | 8 | 25 | 246.5 | |||

Interpolate your values:

| Description | Xi1 | Xi2 | yi |

| First set: | |||

| Second set: | |||

| Third set: | |||

| Fourth set: | |||

| Desired interpolation point: | |||

from sklearn.preprocessing import PolynomialFeatures

from sklearn.linear_model import LinearRegression

from sklearn import linear_model

import numpy as np

import pandas as pd

import sys

#Degree of polynomial: note N = 1 implies linear regression

N = 3;

#--------------- DATA SET-1 -------------------- ------------------- -----------

X = np.array([[0.4, 0.6, 0.8], [0.5, 0.3, 0.2], [0.2, 0.9, 0.7]])

y = [10.1, 20.2, 15.5]

print(np.c_[X, y]) # Column based concatenation of X and y arrays

#-------------- DATA SET-2 -------------- ------------------- ------------------

# Function importing Dataset

df = pd.read_csv('Data.csv', sep=',', header='infer')

#Get size of the dataframe. Note that it excludes header rows

iR, iC = df.shape

# Feature matrix

nCol = 5 #Specify if not all columns of input dataset to be considered

X = df.values[:, 0:nCol]

y = df.values[:, iC-1]

print(df.columns.values[0]) #Get names of the features

#Print header: check difference between df.iloc[[0]], df.iloc[0], df.iloc[[0,1]]

#print("Header row\n", df.iloc[0])

p_reg = PolynomialFeatures(degree = N, interaction_only=False, include_bias=False)

X_poly = p_reg.fit_transform(X)

#X will transformed from [x1, x2] to [1, x1, x2, x1*x1, x1x2, x2*x2]

X_poly = p_reg.fit_transform(X)

#One may remove specific polynomial orders, e.g. 'x' component

#Xp = np.delete(Xp, (1), axis = 1)

#Generate the regression object

lin_reg = LinearRegression()

#Perform the actual regression operation: 'fit'

reg_model = lin_reg.fit(X_poly, y)

#Calculate the accuracy

np.set_printoptions(formatter={'float': '{: 6.3e}'.format})

reg_score = reg_model.score(X_poly, y)

print("\nRegression Accuracy = {0:6.2f}".format(reg_score))

#reg_model.coef_[0] corresponds to 'feature-1', reg_model.coef_[1] corresponds

#to 'feature2' and so on. Total number of coeff = 1 + N x m + mC2 + mC3 ...

print("\nRegression Coefficients =", reg_model.coef_)

print("\nRegression Intercepts = {0:6.2f}".format(reg_model.intercept_))

#

from sklearn.metrics import mean_squared_error, r2_score

# Print the mean squared error (MSE)

print("MSE: %.4f" % mean_squared_error(y, reg_model.predict(X_poly)))

# Explained variance score (R2-squared): 1.0 is perfect prediction

print('Variance score: %.4f' % r2_score(y, reg_model.predict(X_poly)))

#

#xTst is set of independent variable to be used for prediction after regression

#Note np.array([0.3, 0.5, 0.9]) will result in error. Note [[ ... ]] is required

#xTst = np.array([[0.2, 0.5]])

#Get the order of feature variables after polynomial transformation

from sklearn.pipeline import make_pipeline

model = make_pipeline(p_reg, lin_reg)

print(model.steps[0][1].get_feature_names())

#Print predicted and actual results for every 'tD' row

np.set_printoptions(formatter={'float': '{: 6.3f}'.format})

tD = 3

for i in range(1, round(iR/tD)):

tR = i*tD

xTst = [df.values[tR, 0:nCol]]

xTst_poly = p_reg.fit_transform(xTst)

y_pred = reg_model.predict(xTst_poly)

print("Prediction = ", y_pred, " actual = {0:6.3f}".format(df.values[tR, iC-1]))

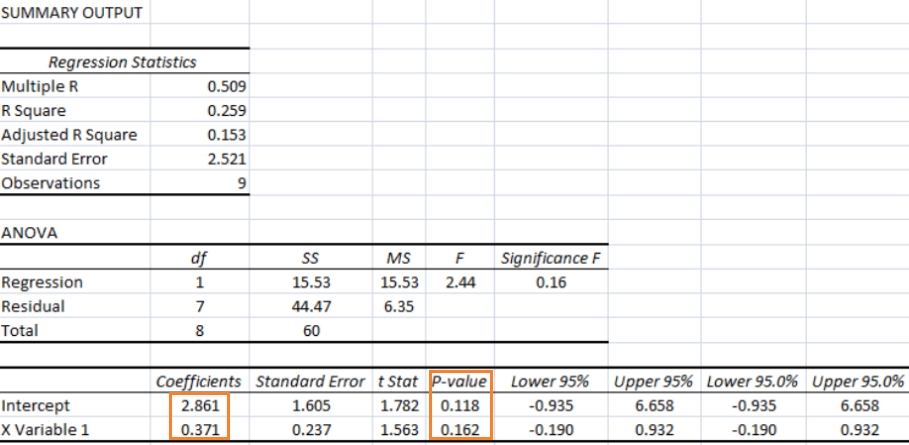

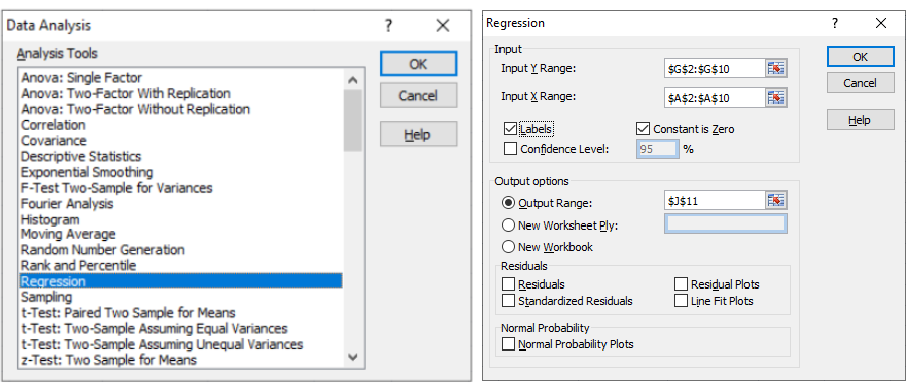

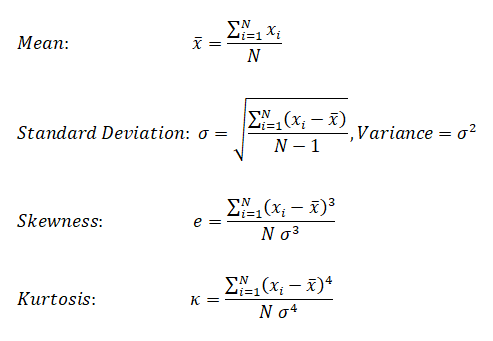

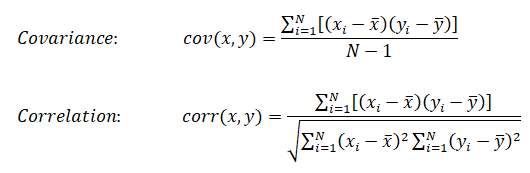

For all regression activities, statistical analysis is a necessity to determine the quality of the fit (how well the regression model fits the data) and the stability of the model (the level of dependence of the model parameters on the particular set of data). The appropriate indicators for such studies are the residual plot (for quality of the fit) and 95% confidence intervals (for stability of the model).

A web-based application for "Multivariate Polynomial Regression (MPR) for Response Surface Analysis" can be found at www.taylorfit-rsa.com. A dataset to test a multivariable regression model is available at UCI Machine Learning Repository contributed by I-Cheng Yeh, "Modeling of strength of high performance concrete using artificial neural networks", Cement and Concrete Research, Vol. 28, No. 12, pp. 1797-1808 (1998). The actual concrete compressive strength [MPa] for a given mixture under a specific age [days] was determined from laboratory. Data is in raw form (not scaled) having 1030 observations with 8 input variables and 1 output variable.

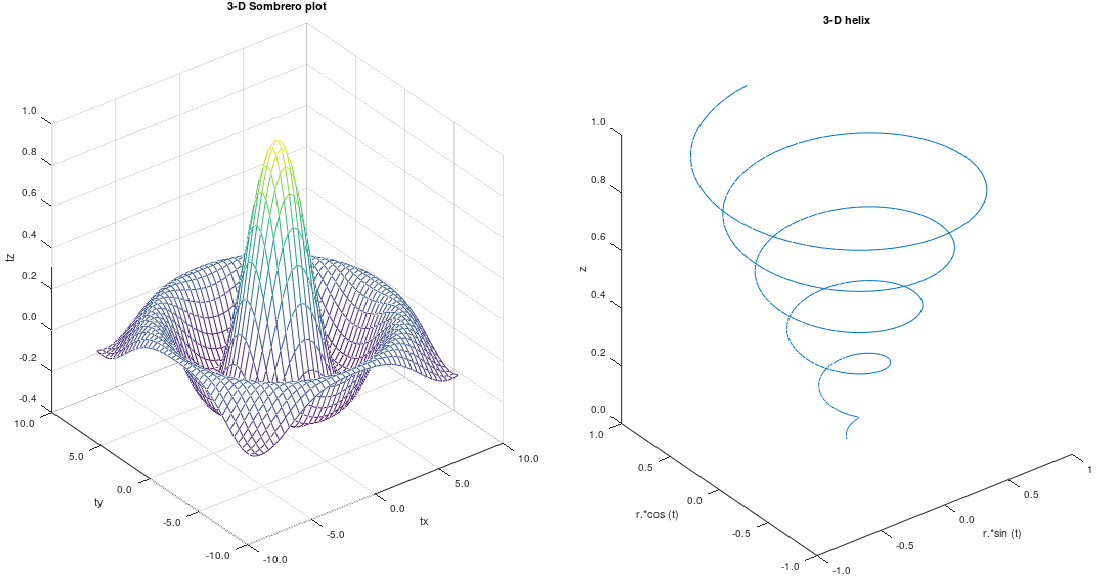

In general, it is difficult to visualize plots beyond three-dimension. However, the relation between output and two variables at a time can be visualized using 3D plot functionality available both in OCTAVE and MATPLOTLIB.

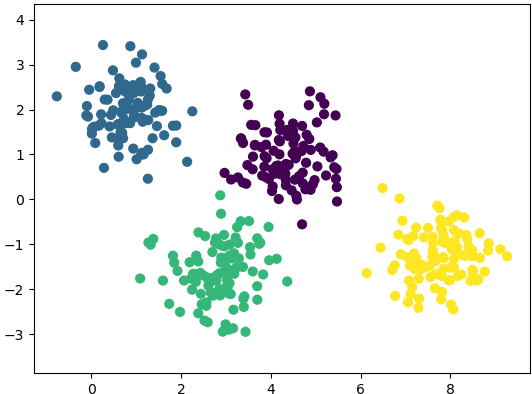

Getting the training data: The evaluation of machine learning algorithm requires set of authentic data where the inputs and labels are correctly specified. However, 'make_blobs' module in scikit-learn is a way to generate (pseudo)random dataset which can be further used to train the ML algorithm. Following piece of code available from jakevdp.github.io/PythonDataScienceHandbook/05.12-gaussian-mixtures.html: Python Data Science Handbook by Jake VanderPlas is a great way to start with.

import matplotlib.pyplot as plt

from sklearn.datasets.samples_generator import make_blobs

X, y = make_blobs(n_samples=400, centers=4, cluster_std=0.60, random_state=0)

X = X[:, ::-1] # flip axes for better plotting

plt.scatter(X[:, 0], X[:, 1], c=y, s=40, cmap='viridis', zorder=2)

plt.axis('equal')

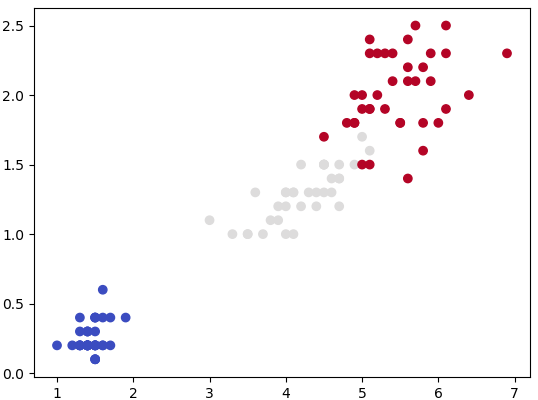

plt.show() This generates a dataset as shown below. Note that the spread of data points can be controlled by value of argument cluster_std.

In regression, output variable requires input variable to be continuous in nature. In classifications, output variables require class label and discrete input values.

Under-fitting:

The model is so simple that it cannot represent all the key characteristics of the dataset. In other words, under-fitting is when the model had the opportunity to learn something but it didn't. It is said to have high bias and low variance. The confirmation can come from "high training error" and "high test error" values. In regression, fitting a straight line in otherwise parabolic variation of the data is under-fitting. Thus, adding a higher degree feature is one of the ways to reduce under-fitting. 'Bias' refers to a tendency towards something. e.g. a manager can be deemed biased if he continuously rates same employee high for many years though it may be fair and the employee could have been outperforming his colleagues. Similarly, a learning algorithm may be biased towards a feature and may 'classify' an input dataset to particular 'type' repeatedly. Variance is nothing but spread. As known in statistics, standard deviation is square root of variance. Thus, a high variance refers to the larger scattering of output as compared to mean.Over-fitting:

The model is so detailed that it represents also those characteristics of the dataset which otherwise would have been assumed irrelevant or noise. In terms of human learning, it refers to memorizing answers to questions without understanding them. It is said to have low bias and high variance. The confirmation can come from "very low training error - near perfect behaviour" and "high test error" values. Using the example of curve-fitting (regression), fitting a parabolic curve in otherwise linearly varying data is over-fitting. Thus, reducing the degree feature is one of the ways to reduce over-fitting. Sometime, over-fitting is also described is "too good to be true". That is the model fits so well that in cannot be true.

| ML Performance | If number of features increase | If number of parameters increase | If number of training examples increase |

| Bias | Decreases | Decreases | Remains constant |

| Variance | Increases | Increases | Decreases |

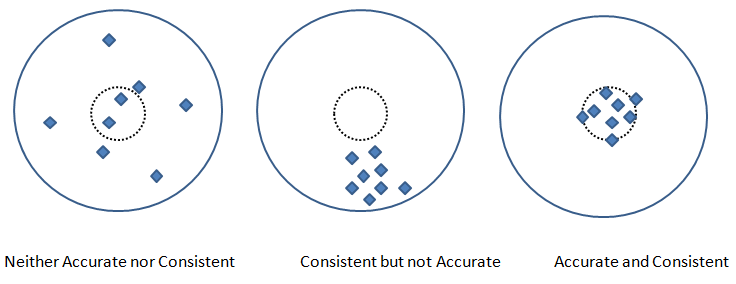

Precision and Recall are two other metric used to check the fidelity of the model. In measurements, 'accuracy' refers to the closeness of a measured value to a standard or known value and 'precision' refers to the closeness of two or more measurements to each other. Precision is sometimes also referred as consistency. Following graphics explains the different between accuracy and precision or consistency.

Pickling

Run the program of learning or training the datasets once and use its parameters every time the code is run again - this process is called pickling (analogous to classical pickles we eat)! In scikit-learn, save the classifier to disk (after training):

from sklearn.externals import joblibjoblib.dump(clf, 'pickledData.pkl')

Load the pickled classifierclf = joblib.load('pickledDatae.pkl')

It is the process of reducing the number of attributes or labels or random variables by obtaining a set of 'unique' or "linearly independent" or "most relevant" or 'principal' variables. For example, if length, width and area are used as 'label' to describe a house, the area can be a redundant variable which equals length × width. The technique involves two steps: [1]Feature identification/selection and [2]Feature extraction. The dimensionality reduction can also be accomplished by finding a smaller set of new variables, each being a combination of the input variables, containing essentially the same information as the input variables. For example, a cylinder under few circumstances can be represented just by a disk where its third dimension, height or length of the cylinder is assumed to be of less important. Similarly, a cube (higher dimensional data) can be represented by a square (lower dimensional data).

- Principal Component Analysis (PCA): This method dates back to Karl Pearson in 1901, is an unsupervised algorithm that creates linear combinations of the original features. The new features are orthogonal, which means that they are linearly independent or uncorrelated. PCA projects the original samples to a low dimensional subspace, which is generated by the eigen-vectors corresponding to the largest eigen-values of the covariance matrix of all training samples. PCA aims at minimizing the mean-squared-error. [Reference: A survey of dimensionality reduction techniques C.O.S. Sorzano, J. Vargas, A. Pascual-Montano] - The key idea is to find a new coordinate system in which the input data can be expressed with many less variables without a significant error.

- Linear Discriminant Analysis (LDA): A "discriminant function analysis" is used to determine which variables discriminate (differentiate or distinguish) between two or more groups or datasets (it is used as either a hypothesis testing or exploratory method). Thus, LDA like PCA, also creates linear combinations of input features. However, LDA maximizes the separability between classes whereas PCA maximizes "explained variance". The analysis requires the data to have appropriate class labels. It is not suitable for non linear dataset.

- Generalized Discriminant Analysis (GDA): The GDA is a method designed for non-linear classification based on a kernel function φ which transform the original space X to a new high-dimensional (linear) feature space. In cases where GDA is used for dimensionality reduction techniques, GDA projects a data matrix from a high-dimensional space into a low-dimensional space by maximizing the ratio of "between-class scatter" to "within-class scatter".

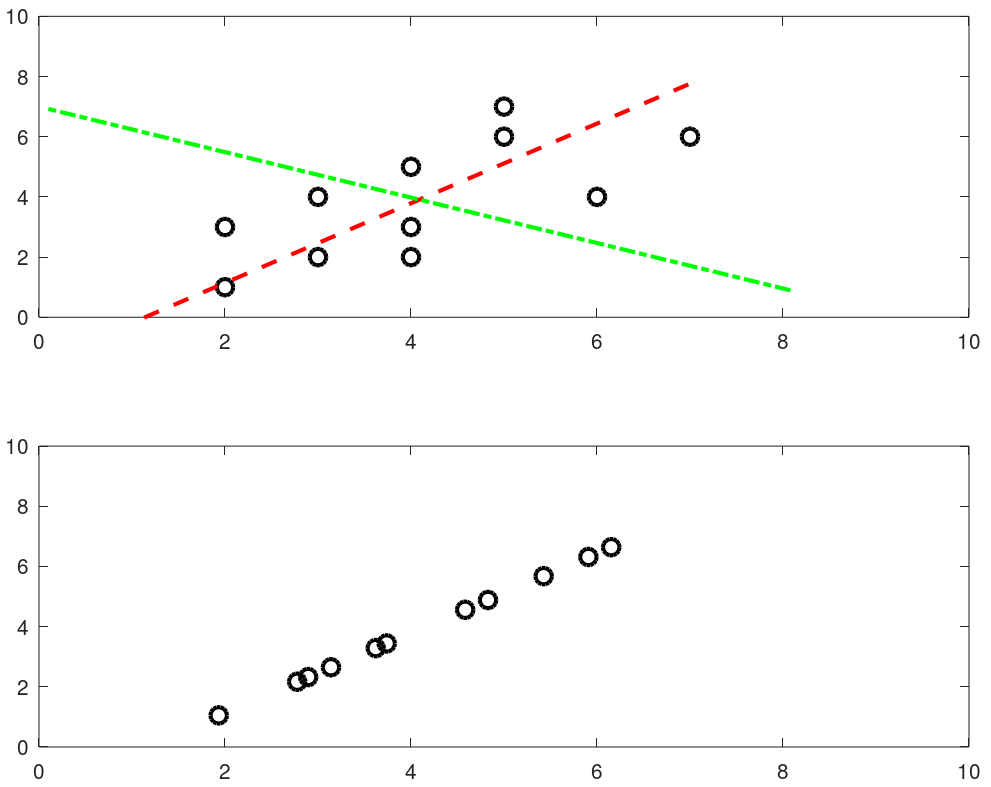

Principal Component Analysis - PCA in OCTAVE

% PCA

%PCA: Principal component analysis using OCTAVE - principal components similar

%to principal stress and strain in Solid Mechanics, represent the directions of

%the data that contains maximal amount of variance. In other words, these are

%the lines (in 2D) and planes in (3D) that capture most information of the data.

%Principal components are less interpretable and may not have any real meaning

%since they are constructed as linear combinations of the initial variables.

%

%Few references:

%https://www.bytefish.de/blog/pca_lda_with_gnu_octave/

%Video on YouTube by Andrew NG

%

clc; clf; hold off;

% STEP-1: Get the raw data, for demonstration sake random numbers are used

%Generate an artificial data set of n x m = iR x iC size

iR = 11; % Total number of rows or data items or training examples

iC = 2; % Total number of features or attributes or variables or dimensions

k = 2; % Number of principal components to be retained out of n-dimensions

X = [2 3; 3 4; 4 5; 5 6; 5 7; 2 1; 3 2; 4 2; 4 3; 6 4; 7 6];

Y = [ 1; 2; 1; 2; 1; 2; 2; 2; 1; 2; 2];

c1 = X(find(Y == 1), :);

c2 = X(find(Y == 2), :);

hold on;

subplot(211); plot(X(:, 1), X(:, 2), "ko", "markersize", 8, "linewidth", 2);

xlim([0 10]); ylim([0 10]);

%

% STEP-2: Mean normalization

% mean(X, 1): MEAN of columns - a row vector {1 x iC}

% mean(X, 2): MEAN of rows - a column vector of size {iR x 1}

% mean(X, n): MEAN of n-th dimension

mu = mean(X);

% Mean normalization and/or standardization

X1 = X - mu;

Xm = bsxfun(@minus, X, mu);

% Standardization

SD = std(X); %SD is a row vector - stores STD. DEV. of each column of [X]

W = X - mu / SD;

% STEP-3: Linear Algebra - Calculate eigen-vectors and eigen-values

% Method-1: SVD function

% Calculate eigenvectors and eigenvalues of the covariance matrix. Eigenvectors

% are unit vectors and orthogonal, therefore the norm is one and inner (scalar,

% dot) product is zero. Eigen-vectors are direction of principal components and

% eigen-values are value of variance associated with each of these components.

SIGMA = (1/(iC-1)) * X1 * X1'; % a [iR x iR] matrix

% SIGMA == cov(X')

% Compute singular value decomposition of SIGMA where SIGMA = U*S*V'

[U, S, V] = svd(SIGMA); % U is iR x iR matrix, sorted in descending order

% Calculate the data set in the new coordinate system.

Ur = U(:, 1:k);

format short G;

Z = Ur' * X1;

round(Z .* 1000) ./ 1000;

%

% Method-2: EIG function

% Covariance matrix is a symmetric square matrix having variance values on the

% diagonal and covariance values off the diagonal. If X is n x m then cov(X) is

% m x m matrix. It is actually the sign of the covariance that matters :

% if positive, the two variables increase or decrease together (correlated).

% if negative, One increases when the other decreases (inversely correlated).

% Compute right eigenvectors V and eigen-values [lambda]. Eigenvalues represent

% distribution of the variance among each of the eigenvectors. Eigen-vectors in

% OCTAVE are sorted ascending, so last column is the first principal component.

[V, lambda] = eig(cov(Xm)); %solve for (cov(Xm) - lambda x [I]) = 0

% Sort eigen-vectors in descending order

[lambda, i] = sort(diag(lambda), 'descend');

V = V(:, i);

D = diag(lambda);

%P = V' * X; % P == Z

round(V .* 1000) ./ 1000;

%

% STEP-4: Calculate data along principal axis

% Calculate the data set in the new coordinate system, project on PC1 = (V:,1)

x = Xm * V(:,1);

% Reconstruct it and invert mean normalization step

p = x * V(:,1)';

p = bsxfun(@plus, p, mu); % p = p + mu

% STEP-5: Plot new data along principal axis

%line ([0 1], [5 10], "linestyle", "-", "color", "b");

%This will plot a straight line between x1, y1 = [0, 5] and x2, y2 = [1, 10]

%args = {"color", "b", "marker", "s"};

%line([x1(:), x2(:)], [y1(:), y2(:)], args{:});

%This will plot two curves on same plot: x1 vs. y1 and x2 vs. y2

s = 5;

a1 = mu(1)-s*V(1,1); a2 = mu(1)+s*V(1,1);

b1 = mu(2)-s*V(2,1); b2 = mu(2)+s*V(2,1);

L1 = line([a1 a2], [b1 b2]);

a3 = mu(1)-s*V(1,2); a4 = mu(1)+s*V(1,2);

b3 = mu(2)-s*V(2,2); b4 = mu(2)+s*V(2,2);

L2 = line([a3 a4], [b3 b4]);

args ={'color', [1 0 0], "linestyle", "--", "linewidth", 2};

set(L1, args{:}); %[1 0 0] = R from [R G B]

args ={'color', [0 1 0], "linestyle", "-.", "linewidth", 2};

set(L2, args{:}); %[0 1 0] = G from [R G B]

subplot(212);

plot(p(:, 1), p(:, 2), "ko", "markersize", 8, "linewidth", 2);

xlim([0 10]); ylim([0 10]);

hold off;

The output from this script is shown below. The two dashed lines show 2 (= dimensions of the data set) principal components and the projection over main principal component (red line) is shown in the second plot.

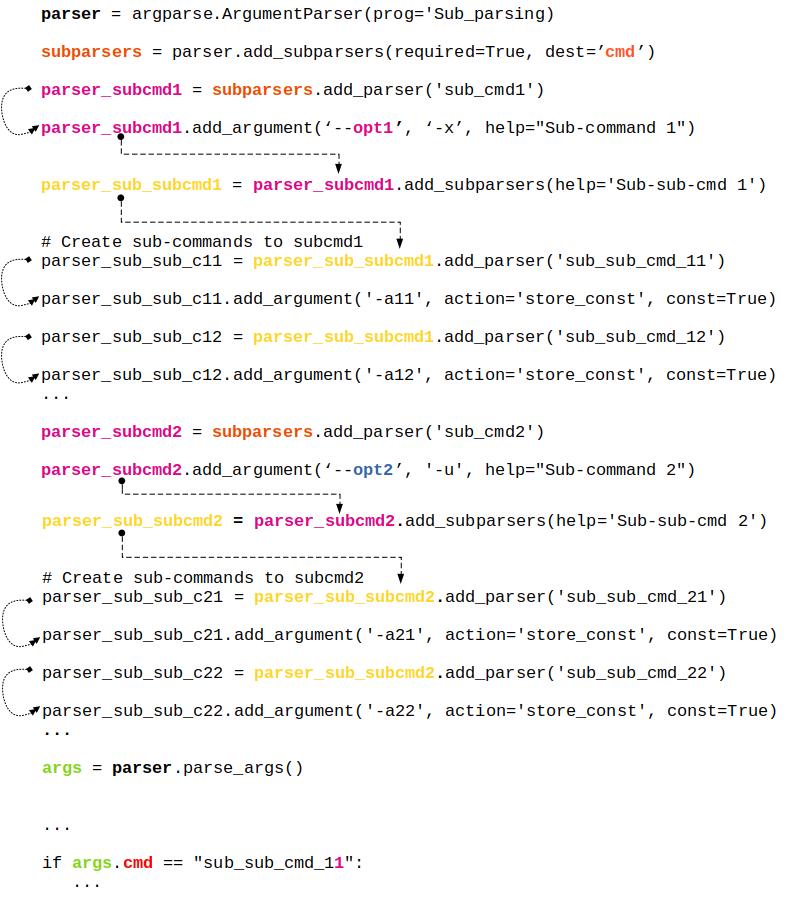

Python install / update

Install / uninstall: do not remove any older version of Python - it may uninstall Ubuntu Desktop as well. In case you unistalled a python which uninstalls Ubuntu desktop, Linux shall start in command line mode only. Use "sudo apt-get update" followed by "sudo apt-get install ubuntu-desktop" to get the desktop re-installed. It should restart with a graphical log-in prompt. If it still opens in command line mode: use 'reboot' command on the prompt. The .bash_profile shall need to be recreated especially for aliases like rm='rm -i', mv='mv -i' and cp='cp -i'.To call Python3 with just 'python' in Linux: sudo rm /usr/bin/python followed by sudo ln -s /usr/bin/python3.9 /usr/bin/python - gives error if link already exists. This way, if Python2.x is needed, it can be called explicitly with python2.x while 'python' defaults to python3 because of the symbolic link. ls -l /usr/bin/python* and ls -l /usr/local/bin/python*- get all installed versions in Linux. To make a specific version default, add in .bash_profile: alias python3='/usr/bin/python3.9'.

Install packages: sudo apt-get install python3-pip, python3 -m pip install matplotlib, pip install numpy, sudo apt-get install python3-opencv. Note that "pip install numpy" works in Linux but prints error message "Access Denied" in Windows. Use "python -m pip install numpy" in Windows terminal.OCTAVE vs. Python

You would have got a flavour of Python programming and OCTAVE script in examples provided earlier. This page does not cover about basic syntax of programming in any of the language. One thing unique in Python is the indentation. Most of the languages use braces or parentheses to define a block of code or loop and does not enforce any indentation style. Python uses indentation to define a block of statements and enforces user to follow any consistent style. For example, a tab or double spaces or triple spaces can be used for indentation but has to be only one method in any piece of code (file).

Following table gives comparison of most basic functionalities of any programming language.

| Usage | OCTAVE | Python |

| Case sensitive | Yes | Yes |

| Current working directory | pwd | import os; os.getcwd() |

| Change working directory | chdir F:\OF | import os; os.chdir("C:\\Users") |

| Clear screen | clc | import os; os.system('cls') |

| Convert number to string | num2str(123) | str(123) |

| End of statement | Semi-colon | Newline character |

| String concatenation | strcat('m = ', num2str(m), ' [kg]') | + operator: 'm = ' + str(m) + ' [kg]' |

| Expression list: tuple | - | x, y, z = 1, 2, 3 |

| Get data type | class(x) | type(x) |

| Floating points | double x | float x |

| Integers | single x | integer x, int(x) |

| User input | prompt("x = ") x = input(prompt) | print("x = ") x = input() |

| Floor of division | floor(x/y) | x // y |

| Power | x^y or x**y | x**Y |

| Remainder (modulo operator) | mod(x,y): remainder(x/y) | x%y: remainder(x/y) |

| Conditional operators | ==, <, >, != (~=), ≥, ≤ | ==, <, >, !=, ≥, ≤ |

| If Loop | if ( x == y ) x = x + 1; endif | if x == y: x = x + 1 |

| For Loop | for i=0:10 x = i * i; ... end | for i in range(1, 10): x = i * i |

| Arrays | x(5) 1-based | x[5] 0-based |

| File Embedding | File in same folder | from pyCodes import function or import pyCodes* as myFile |

| Defining a Function | function f(a, b) ... end | def f(a, b): ... |

| Anonymous (inline) Function | y = @(x) x^2; | y = lambda x : x**2 |

| Return a single random number between 0 ~ 1 | rand(1) | random.random() |

| Return a integer random number between 1 and N | randi(N) | random.randint(1,N) |

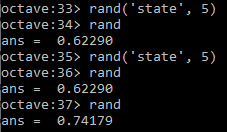

| Return a integer random number with seed | rand('state', 5) | random.seed(5) |

|  | |

| Return a single random number between a and b | randi([5, 13], 1) | random.random(5, 13) |

| Return a (float) random number between a and b | a + (b-a)*rand(1) | random.uniform(a, b) |

| Return a (float) random number array | rand(1, N) | numpy.random.rand(N) |

| Stop execution after a statement | return | sys.exit() |

To run a function (out of many stored in a module) from the command line: $python3 -c 'import moduleName; funcName()'. The parentheses at the end of funcName makes the function be called. In Windows shell, a double quote should be used instead of single. If funcName() takes arguments, to supply by the command line use sys.argv to the method or access it from the funcName() method. Alternatively, add following lines at the end of module.

if __name__ == "__main__": args = sys.argv # args[0] = current file (module name), args[1] = function name # args[2:] = function args : (*unpacked) globals()[args[1]](*args[2:])Here 'globals' pass the command line argument (name of the function) into locals which is a dictionary with a current local symbol table.

if __name__ == '__main__': moduleName()This is the way to make the function execute (run) when the file is run and not when the file (or module) is imported.

Many use of underscore: throwaway or implicit variable such as "for _ in range(5)", stores the result of the last executed expression (_ allows to call the last function/variable), double leading and trailing underscore such as __variable__ is reserved for special methods, ignoring values such as "_, thresh = cv2.threshold(img, 127, 255, 0)", grouping thousand separator for easy readability of long literals such as 5_000_000.0

Function Annotation: -> marks the (optional) return annotation of a function and annotations are dictionaries. function_name.__annotations__ prints the details such as argument names and type, return values and type...

def func(n: float) -> int: return int(n)print(func.__annotations__) gives output: "{'n': <class 'float'>, 'return': <class 'int'>}". Here the return annotation '->' infers that func() shall return an integer (though the function is not forced to return an integer). The colon separator in "n: float" specifies that 'n' should be of type float. It is like 'int' used while defining a method in (strongly typed language) Java.

public int func(float n) {

return n * n;

}

Lambda Functions, also known as anonymous functions as they do not have name. They can have any number of arguments but can only have one expression. These are good for one task that needs repetition. Lambda functions can be used inside other regular functions. In fact, this is their main advantage. f = lambda x: x**2 #Like creating a function f, y = f(5) #Execute the function. Some other built-in functions are

zip(a, b) is equivalent to y = [] for i in range(5): for j in range(3): if i == j: x = (i, j) y.append(x)

Ternary Operator

Python for and if on one line: find a list of items matching some criterion - [i for i in xlist if x % 2 == 1]. To find one item matching some criterion, x = next(i for i in xlist if x % 2 == 1). i = next((elm for elm in xlist if elm == 'two'), None) - i will be set to 'None' if there is no matching element. Ternary Operator: result = 'Even' if x % 2 == 0 else 'Odd'.

Tuple and Dictionary

Associative arrays used in other programming languages are called dictionaries in Python. Dictionaries are type of lists where keyrod and value pairs are stored separated by comma. E.g. dictList = {'streams': [{'index': 0, 'codec_name': 'vp9'}, {'index': 1, 'codec_type': 'audio'}], 'format': {'filename': 'in.webm', 'nb_streams': 2}} - this is a list of dictionaries named 'streams' and 'format'. The value of 'codec_type' that is 'audio' can be retrieved by codec = dictList['streams']['codec_name']. In other words, dictionaries are unordered set of key: value pairs, with the requirement that the keys are unique (within one dictionary)

From Python Documentation:- Pair of braces creates an empty dictionary: dictX = {}

- Placing a comma-separated list of key:value pairs within the braces adds initial key:value pairs to the dictionary

- keys() method of a dictionary object returns a list of all the keys used in the dictionary, in arbitrary order

- Apply the sorted() function to it keys() method to get an alphabetically sorted list: sortedKeys = sorted(dictName.keys())

- To check whether a single key is in the dictionary, use the 'in' keyword such as "x not in s" or "y in z"

- To loop through dictionaries, the key and corresponding value can be retrieved at the same time using the iteritems() method: for key, val in dictName.iteritems()

- The dict() constructor builds dictionaries directly from sequences of key-value pairs

- The .get() method retrieves the value associated with a key: keyValue = dictName.get(keyName). If the key exists, it returns the value else Boolean None is returned.

try:

value = dictName[key]

except KeyError:

print("Specified key is not present.\n")

pass

An underscore is used to ignore part of a tuple returned by Python commands: _, dirnames, filenames in os.walk(path) - here the underscore simply tells that the user is not going to use first part of the three-tuple returned by os.walk. However, dpath, dname, fname = os.walk() can be used to access three-tuples returned by os.walk.

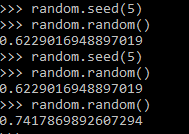

Arbitrary Positional Arguments

*args and **kwargs allow you to pass arbitrary (multiple) positional arguments and arbitrary (multiple) keyword arguments respectively to a function without declaring them beforehand. Note that keyword arguments are like dictionary with a 'key=value' pair where = is used instead of colon (:). The star (*) and double-stars (**) respectively at the start of these names are called asterisk operators or unpacking operators which return iterable objects as tuple. A tuple is similar to a list in that they both support slicing and iteration. Note that tuples are not mutable that is they cannot be changed. Tuples are specified as comma separated items inside parentheses like theTuple = (1, 2, 3) whereas lists are specified in brackets like theList = [1, 2, 3].

args[0] or "for arg in args" and kwargs[kwrd] or "for key, value in kwargs.items()" or "for kwval in kwargs.values()" can be used to access each members of args and kwargs lists respectively. "for key in kwargs" can be used to access 'key' names in kwargs list comprising of pairs of keywords and values.

How to check if *args[0] exists? Note that *args is a tuple (with zero, one or more elements) and it will result in a True if it contains at least one element. Thus, the presence of *args can be simply checked by "if args:" Similarly, if 'key1' in kwargs: can be used if key1 in **kwargs exists or not? len(args) ≡ args.__len__() and len(kwargs): find length of positional arguments. How to check if function is callable with given *args and **kwargs? While looping through a sequence, the position index and corresponding value can be retrieved at the same time using the enumerate() function: for idx, val in enumerate(listName)

Arrays

aRaY = [] - here aRaY refers to an empty list though this is an assignment, not a declaration. Python can refer aRaY to anything other than a list since Python is dynamically typed. The default built-in Python type is called a 'list' and not an array. It is an ordered container of arbitrary length that can hold a heterogeneous collection of objects (i.e. types do not matter). This should not be confused with the array module which offers a type closer to the C array type. However, the contents must be homogenous (all of the same type), but the length is still dynamic.This file contains some examples of array operations in NumPy.

arr = np.array( [ [0, 0, 0, 0], [0, 1, 1, 0], [0, 1, 1, 0], [0, 0, 0, 0] ] )print(arr): output is

[[0 0 0 0] [0 1 1 0] [0 1 1 0] [0 0 0 0]]print(type(arr)) = <class 'numpy.ndarray'>

x = np.array([1.2, 2.3, 5.6]), x.astype(int) = array([1, 2, 6])

row_vector = array([[1, 3, 5]]) or np.r_['r', [1, 3, 5]] which has 1 row and 3 columns, col_vector = array([[2, 4, 6]]).T or np.r_['c', [2, 4, 6]] which has 3 rows and 1 column. Convert a row vector to column vector: col_vec = row_vec.reshape(row_vec.size, 1), or col_vec = row_vec.reshape(-1, 1) where -1 automatically finds the value of row_vec.size. Convert a column vector to row vector: row_vec = col_vec(1, -1). Examples:a = np.linspace(1, 5, num=5) > print(a) >> [1. 2. 3. 4. 5.] print(a.shape) >> (5,) print(a.reshape(-1, 1)) [[1.] [2.] [3.] [4.] [5.]] print((a.reshape(-1, 1)).shape) >> (5, 1) x = np.array([1,2,3,4,5]) > print(x) >> [1 2 3 4 5]. print(x.shape) >> (5,) x = np.array([[1,2,3,4,5]]) > print(x.shape) >> (1, 5) x = np.arange(1, 5, 1) > print(x) >> [1 2 3 4]. print(x.shape) >> (4,)2D operations

X = np.array([1, 2, 3]), Y = np.array([2, 4, 6]), X, Y = np.meshgrid(X, Y) Z = (X**2 + Y**2), print(Z) [[ 5 8 13] [17 20 25] [37 40 45]] i.e. Z = np.array([[5, 8, 13], [17, 20, 25], [37, 40, 45]])

Summary

Python interpreter is written in C language and that array library includes array of C language. A string is array of chars in C and hence an array cannot be used to store strings such as file names.

- Floating-point arithmetic always produces a floating-point result. Hence, modulo operator for integers and floating point numbers will yield different results.

- Python uses indentation to show block structure. Indent one level to show the beginning of a block. Outdent one level to show the end of a block. The convention is to use four spaces (and not the tab character even if it is set to 4 spaces) for each level of indentation. As an example, the following C-style code:

C Python if (x > 0) { if x: if (y > 0) { if y: z = x+y z = x+y } z = x*y z = x*y }Comments: Everything after "#" on a line is ignored. Block comments starts and ends with ''' in Python. - eye(N), ones(N) and zeros(N) creates a NxN identity matrix, NxN matrix having each element '1' and NxN matrix having each element '0' respectively.

- 'seed' number in random number generators is only a mean to generate same random number again and again. This is equivalent to rng(5) in MATLAB.

- linspace(start, end, num): num = number of points. If omitted, num = 100 in MATLAB/OCTAVE and num = 50 in numpy. Note that it is number of points which is equal to (1 + number of divisions). Hence, if you want 20 divisions, set num = 21. That is, increment Δ = (end - start) / (num - 1). Available in numpy, OCTAVE/MATLAB.

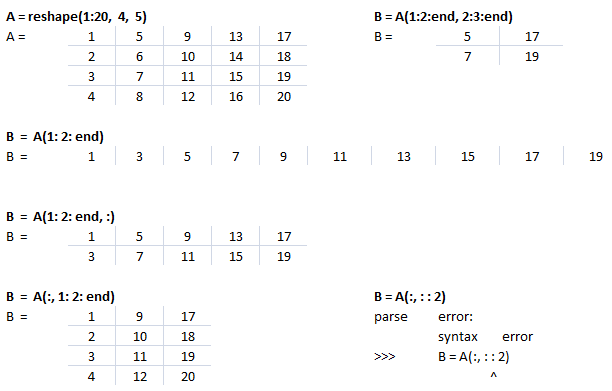

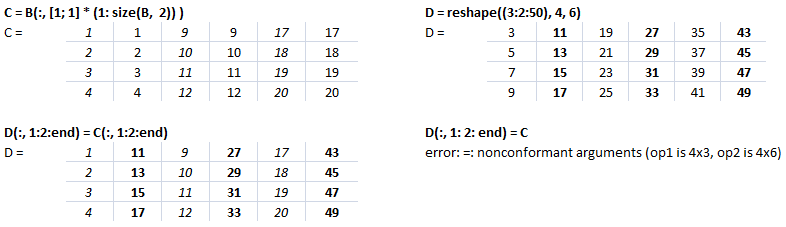

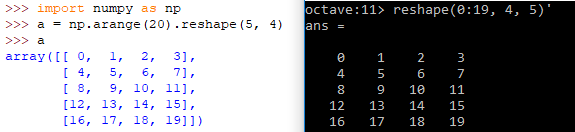

- linspace(1, 20, 20) = 1:20. And hence, reshape(1:20, 4, 5) is equivalent to reshape(linspace(1, 20, 20), 4, 5)

- Arrays: use space or commas to delimit columns, use semi-colon to delimit rows. In same ways, space can be used to stack arrays column-wise (hstack in numpy) e.g. C = [A B] and semi-colon can be used to stack arrays row-wise (vstack in numpy) e.g. C = [A; B].

- In numpy, if x is a 2D array then x[0,2] = x[0][2]. NumPy arrays can be indexed with other arrays. x[np.array([3, 5, 8])] refers to the third, fifth and eighth elements of array x. Negative values can also be used which work as they do with single indices or slices. x[np.array([-3, -5, -8])] refers to third, fifth and eighth elements from the end of the vector 'x'.

- Negative indexing to call element of an array or a matrix is not allowed in OCTAVE/MATLAB. In case negative counters of a loop is present e.g. j = -n:n, use i = 1:numel(j) to access x(i).

Conditional and Logical Indexing

u(A < 25) = 255 replaces the elements of matrix 'u' to 255 corresponding to elements of A which is < 25. If A = reshape(1:20, 4, 5) then B = A(A>15)' will yield B = [16 17 18 19 20]. B = A; B(A < 10) = 0 will replace all those elements of matrix A which are smaller than 10 with zeros.B = A < 9 will produce a matrix B with 0 and 1 where 1 corresponds to the elements in A which meets the criteria A(i, j) < 9. Similarly C = A < 5 | A > 15 combines two logical conditions.

Find all the rows where the elements in a column 3 is greater than 10. E.g. A = reshape(1:20, 4, 5). B = A(:, 3) > 10 finds out all the rows when values in column 3 is greater than 10. C = A(B:, :) results in the desired sub-matrix of the bigger matrix A.vectorization

This refers to operation on a set of data (array) without any loop. Excerpts from OCTAVE user manual: "Vectorization is a programming technique that uses vector operations instead of element-by-element loop-based operations. To a very good first approximation, the goal in vectorization is to write code that avoids loops and uses whole-array operations". This implicit element-by-element behaviour of operations is known as broadcasting.Summation of two matrices: C = A + B

for i = 1:n

for j = 1:m

c(i,j) = a(i,j) + b(i,j);

endfor

endfor

Similarly:

for i = 1:n-1 a(i) = b(i+1) - b(i); endforcan be simplified as a = b(2:n) - b(1:n-1)

If x = [a b c d], x .^2 = [a2 b2 c2 d2]

The vector method to avoid the two FOR loops in above approach is: C = A + B where the program (numPy or OCTAVE) delegates this operation to an underlying implementation to loop over all the elements of the matrices appropriately.

Slicing

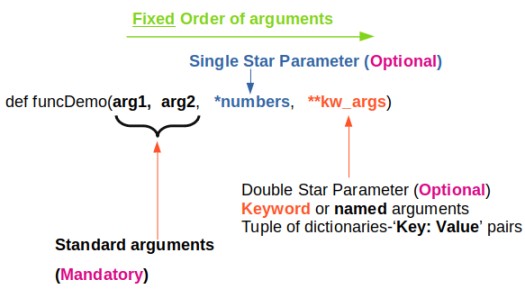

This refers to the method to reference or extract selected elements of a matrix or vector. Indices may be scalars, vectors, ranges, or the special operator ':', which may be used to select entire rows or columns. ':' is known as slicing object. The basic slicing syntax is "start: stop: step" where step = increment. Note that the 'stop' value is exclusive that is rows or columns will be included only up to ('stop' - 1). In NumPy (and not in OCTAVE), any of the three arguments (start, stop, step) can be omitted. Default value of 'step' = 1 and default value of 'start' is first row or column. In NumPy (not in OCTAVE) an ellipsis '...' can be used to represent one or more ':'. In other words, an Ellipsis object expands to zero or more full slice objects (':') so that the total number of dimensions in the slicing tuple matches the number of dimensions in the array. Thus, for A[8, 13, 21, 34], the slicing tuple A[3 :, ..., 8] is equivalent to A[3 :, :, :, 8] while the slicing tuple A[..., 13] is equivalent to A[:, :, :, 13]. Special slicing operation A[::-1] reverses the array A. Note that even though it is equivalent to A[len(A)-1: -1: -1], the later would produce an empty array.

Slicing to crop an image: img_cropped = img(h0: h0+dh, w0: w0+dw) can be used to crop an image (which is stored as an array in NumPy). This one line code crops an array by number of pixels w0, h0, dw and dh from left, top, right and bottom respectively. Similarly, the part of an array (and hence an image) can be replaced with another image in this one line of code: img_source(h0: h0 + img_ref.shape[0], w0: w0 + img_ref.shape[1]) = img_ref -> here the content of image named img_ref is replaced inside img_source with top-left corner placed at w0 and h0 in width and height directions respectively.Slicing against column: B = A(3:2:end, :) will will slice rows starting third row and considering every other row thereafter until the end of the rows is reached. In numpy, B = A[:, : : 2] will slice columns starting from first column and selecting every other column thereafter. Note that the option ': : 2' as slicing index is not available in OCTAVE.

Let's create a dummy matrix A = reshape(1:20, 4, 5) and do some slicing such as B = A(:, 1:2:end).

This text file contains example of Slicing in NumPy. The output for each statement has also been added for users to understand the effect of syntaxes used. There is a function defined to generate a sub-matrix of a 2D array where the remaining rows and columns are filled with 255. This can be used to crop a portion of image and filling the remaining pixels with white value, thus keeping the size of cropped image of size as the input image.

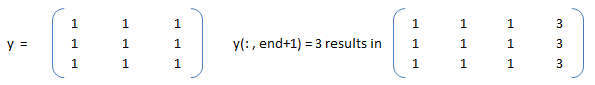

Arrays: Example syntax and comparison between OCTAVE and NumPy

| Usage | GNU OCTAVE | Python / NumPy |

| Definition | A = reshape(0:19, 4, 5)' | A = numpy.arange(20).reshape(5, 4) |

| Reshape example |  | |

| A(3) | Scalar - single element | - |

| A[3] | Not defined | Same as A[3, :]: 4th row of matrix/array |

| Special arrays | zeros(5, 8), ones(3,5,"int16") | np.zeros( (5, 8) ), np.ones( (3, 5), dtype = np.int16) |

| Create array from txt files | data = dlmread (fileName, ".", startRow, startCol) | np.genfromtxt(fileName, delimiter=",") |

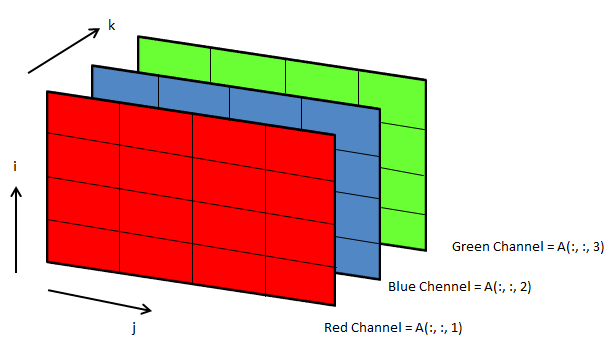

3D arrays: widely used in operations on images

Following OCTAVE script can be used to convert the background colour of an image from black to white.

clc; clear; clear all; [x, map, alpha] = imread ("Img.png"); [nR nC nZ] = size(x);

A = x(:, :, 1); B = x(:, :, 2); C = x(:, :, 3); i = 40; u = A; v = B; w = C;

u(A<i & B<i & C<i) = 255; v(A<i & B<i & C<i) = 255; w(A<i & B<i & C<i) = 255;

z = cat(3, u, v, w); imwrite(z, "newImg.png"); imshow(z);

File Operations in Python

- List files and folders: dirpath, subdirs, files = os.walk(root_folder) - os.walk returns a three-tuple (dirpath, dirnames, filenames) where dirpath is nothing but the top-level root folder specified, subdirs = sub-directories below root folder. Thus, "for dirpath, subdirs, files in os.walk(root_folder)" will create one outer loop (list or names of sub-folders) and an inner loop (list or names of files in each sub-folder).

- As per user doc: "Note that the names in the lists contain no path components. To get a full path (which begins with top) to a file or directory in dirpath, do os.path.join(dir_path, file_name). Whether or not the lists are sorted depends on the file system."

- Sort a list: files.sort(), to list them in numeric order: sorted(files, key=int). os.walk() yields in each step what it will do in the next step(s).

- Find number of folders: n_dirs = sum([ len(dirs) for root, dirs, files in os.walk(root_folder) ])

- Number of files: n_files = sum([ len(files) for root, dirs, files in os.walk(root_folder) ])

- Check if a file exists: os.path.isfile(fileName)

- Check if a folder exists: os.path.isdir(str(dirPath))

- Get file extension: f_extn = fileName.partition('.')[-1]

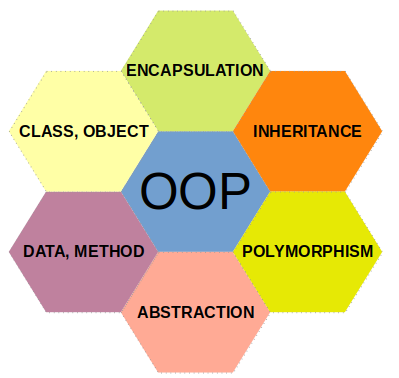

Procedural or Functional Programming vs. Object Oriented Programming - Functional programs tend to be a bit easier to follow than OOP which has intricate class hierarchies, dependencies and interactions. From learn.microsoft.com titled getting-started-with-vba-in-office: "Developers organize programming objects in a hierarchy, and that hierarchy is called the object model of the application. The definition of an object is called a class, so you might see these two terms used interchangeably. Technically, a class is the description or template that is used to create, or instantiate, an object. Once an object exists, you can manipulate it by setting its properties and calling its methods. If you think of the object as a noun, the properties are the adjectives that describe the noun and the methods are the verbs that animate the noun. Changing a property changes some quality of appearance or behavior of the object. Calling one of the object methods causes the object to perform some action."

A related concept is namespace which is a way of encapsulating items. Folders are namespace for files and other folders.

As a convention, an underscore _ at the beginning of a variable name denotes private variable in Python. Note that it is a convention as the concept of "private variables" does not exist in Python.

Class definition like function 'def' statement must be executed before used.#!/usr/bin/env python3

import math

class doMaths(): # definition of a new class

py = 3.1456 # can be accessed as doMaths.py

# Pass on arguments to a class at the time of its creation using

# __init__ function.

def __init__(self, a, b):

# Here 'self' is used to access the current instance of class, need

# not be named 'self' but has to be the first parameter of function

# Define a unique name to the arguments passed to __init__()

self.firstNum = a

self.secondNum = b

self.sqr = a*a + b* b

self.srt = math.sqrt(a*a + b*b)

print(self.sqr)

def evnNum(self, n):

if n % 2 == 0:

print(n, " is an even number \n")

else:

print(n, " is an odd number \n")

# Create an INSTANCE of the class doMaths: called Instantiate an object

xMath = doMaths(5, 8) # Output = 89

print(xMath.firstNum) # Output = 5

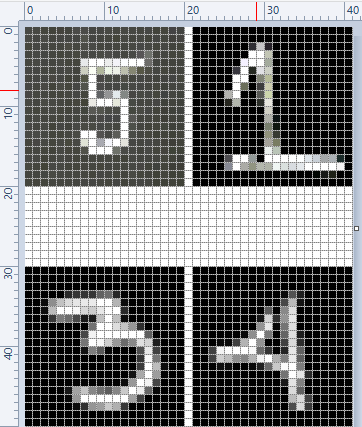

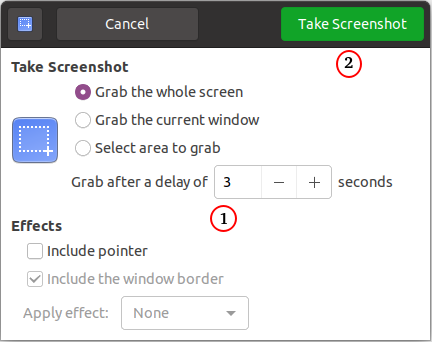

print(xMath.sqr) # Output = 89