- CFD, Fluid Flow, FEA, Heat/Mass Transfer

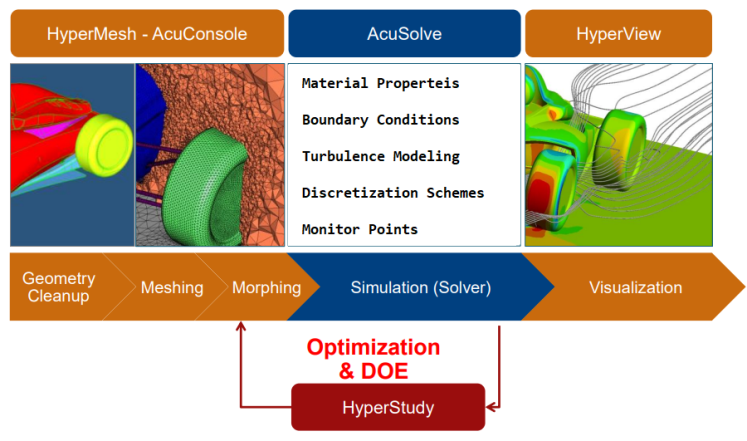

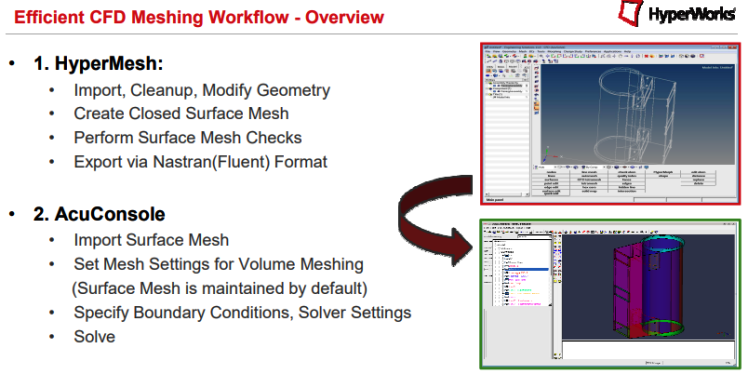

SOLVER SETTING FOR CFD SIMULATIONS

Solver Settings

Type of Solvers and Solution Control Parameters

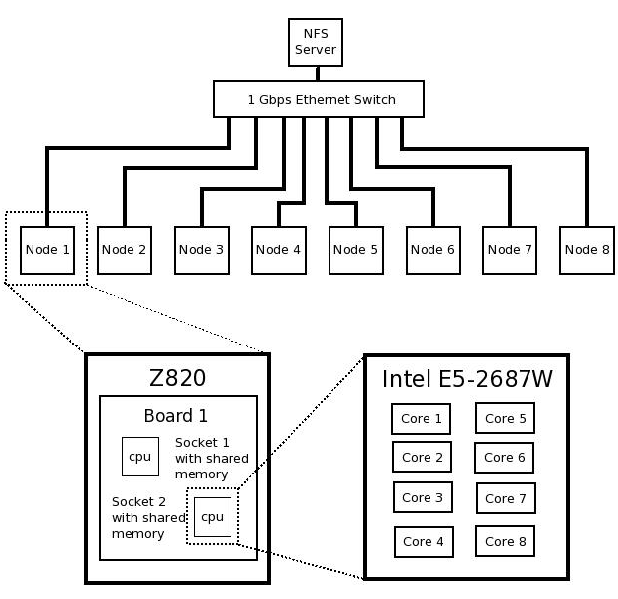

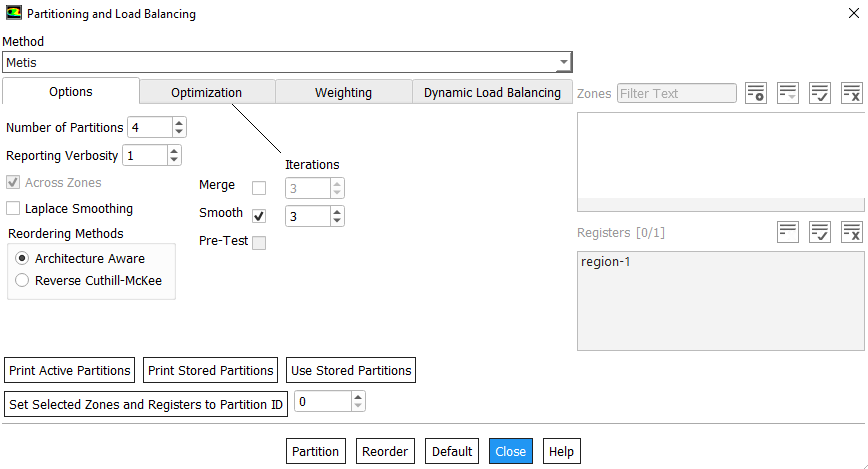

This section deals with solution controls for solvers including topics like CFL Number, Time-step for Transient Simulations, Psuedo-time Marching, Parallel Computing, Nodes and Cluster, HPC - High Performance Computing, Threading, Partitioning, MPI - Message Passing Interface and Scalability.

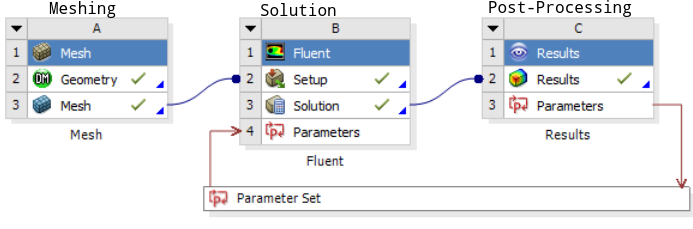

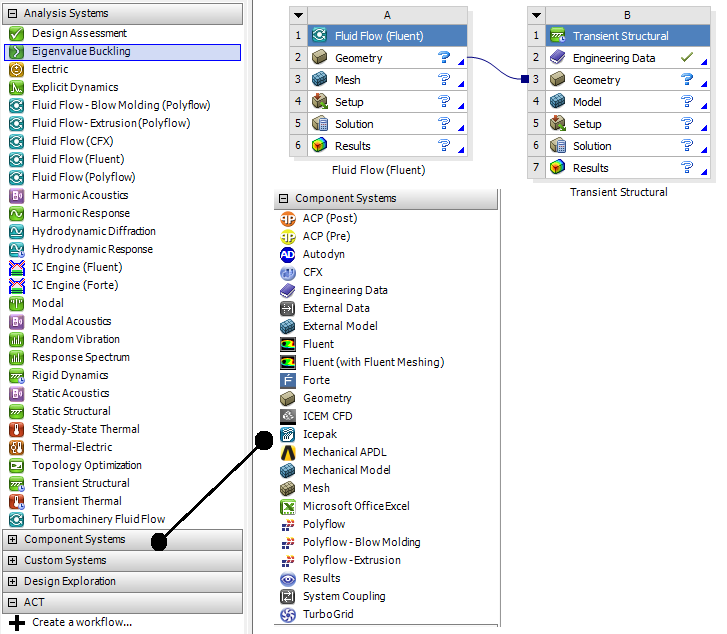

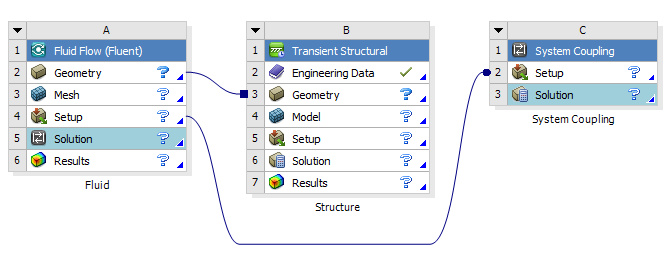

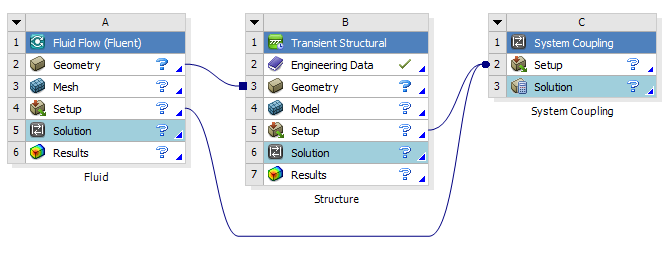

Table of Contents: Solver Settings for Multi-Phase Flows | Porous Domains | Fluid-Structure Interaction in ANSYS WB | Parametric Study in FLUENT | Type of Particle Injections |-| Material Properties |*| TUI Commands for Particle Injection | Transient Table as Boundary Condition in FLUENT | Parallel Processing | Mass Fraction to Volume Fraction | Solver Setting for Transient Simulations | Troubleshooting Convergence in FLUENT | Convergence History | Mark Bad Cells | Non-Newtonian Fluids |:| Material Properties as Tabulated Data

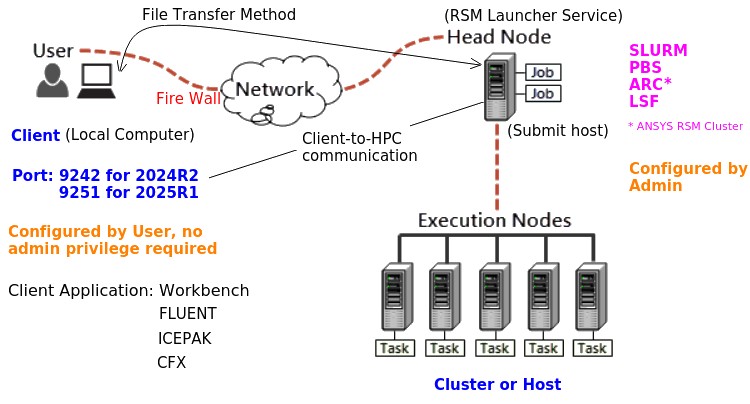

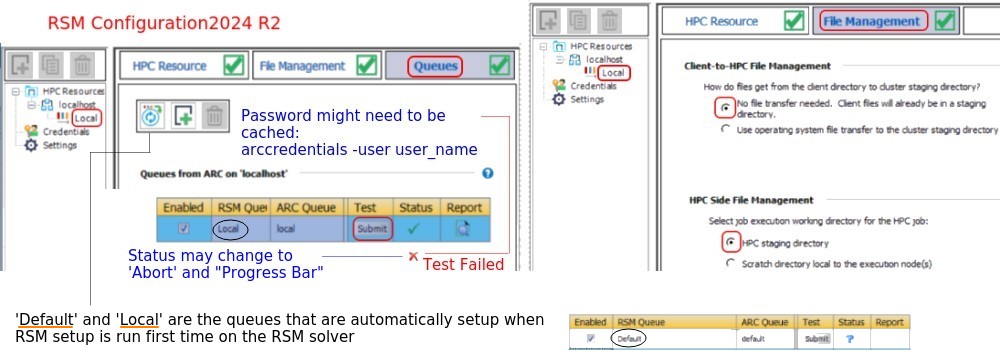

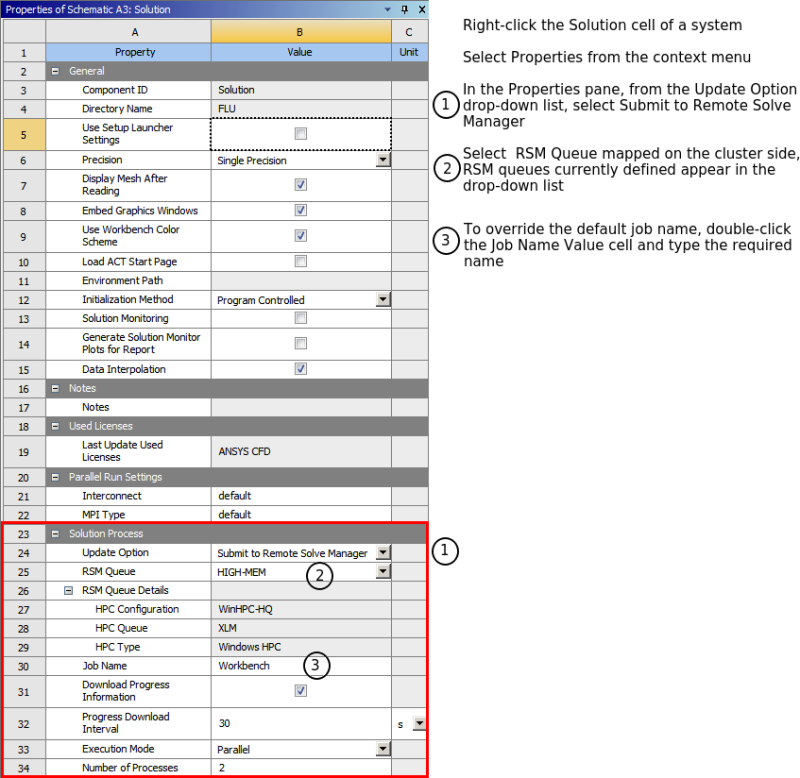

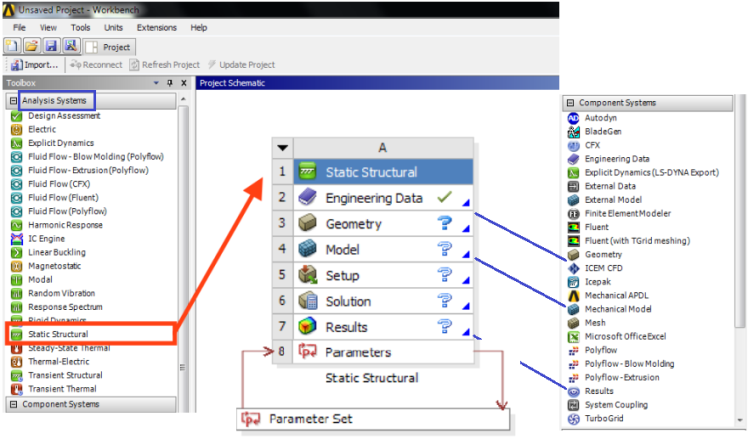

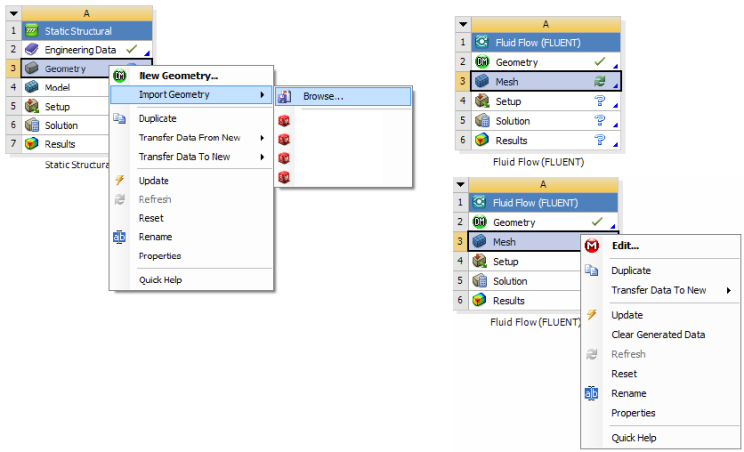

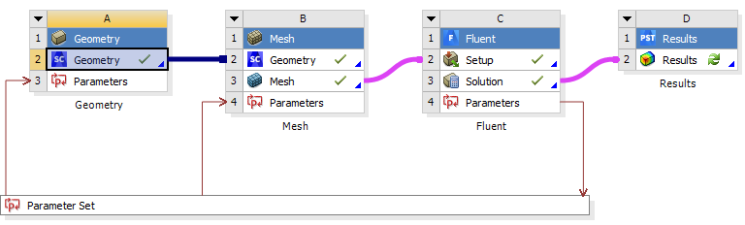

Interpolation of Results | Animation in ANSYS Fluent | Multiple Runs in a Sequence: STAR-CCM+ and CFX | Material Definition in Text File | Single Precision vs. Double Precision |:| SPH: Smoothed-Particle Hydrodynamics |=| Dashboard for Utilization of Clusters |-| Ansys Remote Solve Manager ]=[ Checkpoint - Save Intermediate Results ]=[ ANSYS Workbench [=] Parametric DOE and Reduced Order Modeling [*] Starting FLUENT in batch mode [\/] Update Design Points using Script

Any program does only one thing: "Solves exactly what one tells it to solve and how to solve, nothing else!"

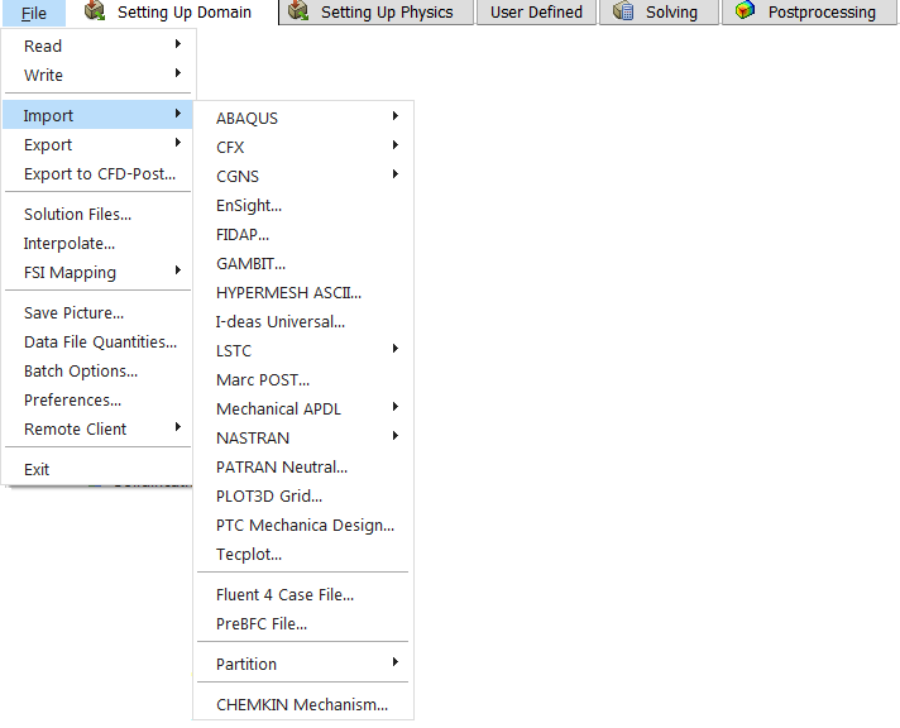

Before starting a Simulation Run

It is a waste of time and resources when you find that some settings were not correct after runs were complete. Hence, a 2-tier check is mandatory for First Time Right solution. The final check should be made on the summary report generated by the software. In ANSYS FLUENT, a simple text file can be printed using TUI report/summary or file/write-settings. For STAR-CCM+ use the feature File > Summary Report > To File... For scripts to further summarize the information, refer here. In CFX-Pre, there is option to write CCL files only for select boundaries.Before you proceed to set solver controls

Check mesh quality for orthogonality (must be ≥ 0.10) and aspect ratio (must be ≤ 50). In case these limits are crossed, go back to the mesh generator and bring the mesh quality in the limit. In ANSYS FLUENT, you need to set verbosity level to get desired mesh quality information.

From user guide: /mesh/check-verbosity - "Set the level of details that will be added to the mesh check report generated by mesh/check. A value of 0 (the default) notes when checks are being performed, but does not list them individually. A value of 1 lists the individual checks as they are performed. A value of 2 lists the individual checks as they are performed, and provides additional details (for example, the location of the problem, the affected cells). The check-verbosity text command can also be used to set the level of detail displayed in the mesh quality report generated by mesh/quality. A value of 0 (the default) or 1 lists the minimum orthogonal quality and the maximum aspect ratio. A value of 2 adds information about the zones that contain the cells with the lowest quality, and additional metrics such as the maximum cell squish index and the minimum expansion ratio."

Solver setting

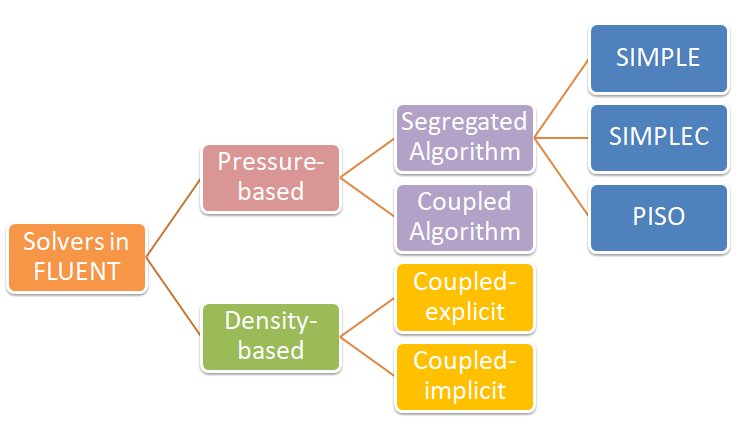

This activity of a CFD simulation process involves selection of solver type (pressure-based or density-based, stead-state or transient), selection of [turbulence model, spatial discretization scheme (upwind, central difference), temporal discretization scheme (forward Euler, Backward Euler, Crank-Nicolson scheme, explicit leapfrog method), relaxation factor, PV-coupling method, transient time-step for solver (outer loop iteration), number of inner loop iterations for transient simulations, intermediate output data back-up frequency], gravity ON/OFF, creation of monitor points and monitor planes... The purpose of all these settings is to get a numerical scheme which is consistent, stable and convergent. Here stability refers to the property when errors caused by small perturbations in numerical method remains bound.

Note that the field variables solved during CFD simulation are velocity vector, pressure, temperature (enthalpy). The density at each cell is calculated from equation of state (ideal gas law or real gas laws like Redlich-Kwong and IAPWS equation of state for steam). Note that the "compressible flows" and "compressible gas" are not the same concepts. Compressible flows are characterized by Mach number of the flow whereas compressible behaviour of fluid refers to equation of state such as ideal gas law, essential change in density due to change in pressure and temperature.

Checklist of Solver Settings including Boundary Conditions

| No. | Checkpoint | Record (Y / N / NA) |

| 01 | Has the basic checks been made: scale of mesh, quality, default interfaces settings (CFX may create unwanted interfaces)? Check mesh statistics and compare file size with some reference case. | |

| 02 | Has the density, viscosity and thermal conductivity of fluid been correctly assigned as per expected variation in operating temperature and pressure? Constant vs. function of temperature. | |

| 03 | Has the auto-save frequency and file name correctly defined? For runs on clusters, specify only file name without full path and applies to all other input files. | |

| 04 | For transient simulations, have the specific heat capacity and density of solids been correctly assigned? | |

| 05 | Has the relaxation factors for k, ε and turbulent viscosity been reduced to value lower than 1.0 say 0.25 or 0.50? | |

| 06 | Has the convergence criteria been set to low value such as 1e-05 or lesser? Run may stop early if set to higher number such as 1e-3. | |

| 07 | Has the discretization schemes set to first order for initial 500 ~ 1000 iterations? Gradually move to second order. | |

| 08 | Has the monitor points been created for global mass imbalance and some quantitative estimates such as Δp, mass flow rate and mean velocity at inlet or outlet? | |

| 09 | Is a monitor point created to calculate running average of last 100 iterations? This helps when there are fluctuations in residuals and monitor points. | |

| 10 | Has a monitor for heat transfer through all walls been created? Do not include inlets and outlets. | |

| 11 | Have the interfaces of porous and fluid domains been changed to type 'internal'? | |

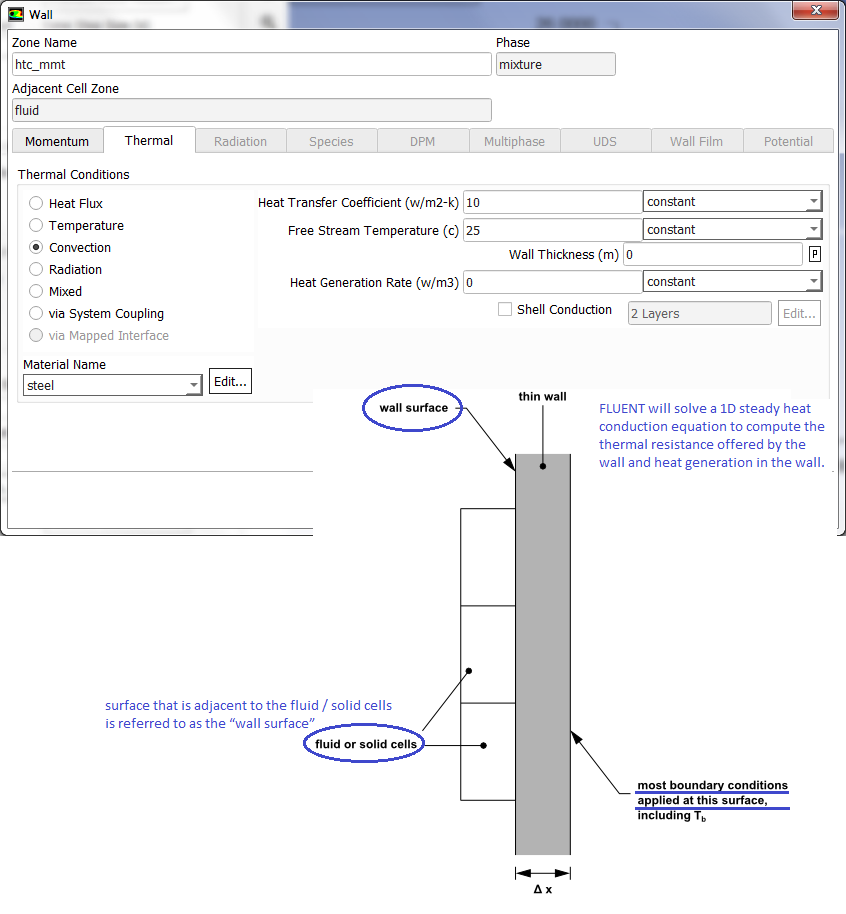

| 12 | Have the shadow walls checked and changed to coupled? Changing them to type Heat Flux or Temperature will decouple the two walls. | |

| 13 | Have the roughness been applied for applicable walls? Check for direction of rotating domains and wall rotation if applicable. | |

| 14 | Has the emissivity been applied to correct wall (part of solid zone) of a shadow wall pair? Specially important for FLUENT where a shadow wall may be part of fluid domain. | |

| 15 | In FLUENT, have contour plots been created before making the runs? This helps avoid repeating the process while setting up different cases with same geometry. | |

| 16 | For parametric analyses, was a naming convention developed and the set-up file named consistently? While naming set-up, include few parameters, geometrical as well as boundary / solver data. |

Notes:

- Segregated solvers are fast but less stable than coupled solvers. Segregated solvers is more likely to diverge even with low relaxation factors. Sometimes, the residuals (especially continuity and momentum) may not drop below certain value with segregated solver. Hence, change the P-V coupling to coupled solver and the residual levels drop significantly. Note that this may or may not result in significant improvement of field values (u, v, w and p).

- For multiple inlets and multiple outlets, it is better to use coupled (pseudo transient) solvers.

- Do not reduce under-relaxation for energy less than 0.95, convergence of energy equation slows down significantly.

- FLUENT creates shadow zones with extension :external for all walls on which shell conduction is enabled. This is available only for post-processing operations.

- The TUI option to set under-relaxation factor is different for coupled (solve set pseudo-relaxation-factor) and segregated (solve set relaxation-factor) P-V-coupling in FLUENT.

- Pressure jump and fan boundary conditions can be used only for small values say 100 ~ 500 [Pa]. It cannot be used to model large pressure head such as 10 [kPa] with ideal gas.

- Switch OFF the default interface creation option in CFX. It will merge zero thickness walls into interior if fluid zones exists on the two sides.

- Refer to this page to get automation scripts and TUI commands.

- If you get high number of 'incomplete' trajectories of particles in DPM even with very high number of steps, the reason could be 'rebound' settings at the outlets.

Excerpts from user manuals of commercial tools

- Smaller physical time-steps are more robust than larger ones.

- An 'isothermal' or cold-flow simulation is more robust than modeling heat transfer. The Thermal Energy model is more robust than the Total Energy Model.

- Velocity or mass specified boundary conditions are more robust than pressure specified boundary conditions. Static pressure boundary is more robust than a total pressure boundary.

- If the characteristic time scale is not simply the advection time of the problem, there may be transient effects holding up convergence. Heat transfer or combustion processes may take a long time to convect through the domain or settle out. There may also be vortices caused by the initial guess, which take longer to move through the entire solution domain. In these cases, a larger time step may be needed to push things through initially, followed by a smaller time step to ensure convergence on the small time scale physics. If the large time step results in solver instability, then a small time scale should be used and more iterations may be required.

- Sometimes the levels of turbulence in the domain can affect convergence. If the level of turbulence is non-physically too low, then the flow might be "too thin" and transient flow effects may be dominating. Conversely if the level of turbulence is non-physically too high then the flow might be "too thick" and cause unrealistic pressure changes in the domain. It is wise to look at the Eddy Viscosity and compare it to the dynamic (molecular) viscosity. Typically the Eddy Viscosity is of the order of 1000 times the dynamic viscosity, for a fully turbulent flow.

- The 2nd Order High Resolution advection scheme has the desirable property of giving 2nd order accurate gradient resolution while keeping solution variables physically bounded. However, may cause convergence problems for some cases due to the non-linearity of the Beta value. If you are running High Res and are having convergence difficulty, try reducing your time step. If you still have problems converging, try switching to a Specified Blend Factor of 0.75 and gradually increasing the Blend Factor to as close to 1.0 as possible.

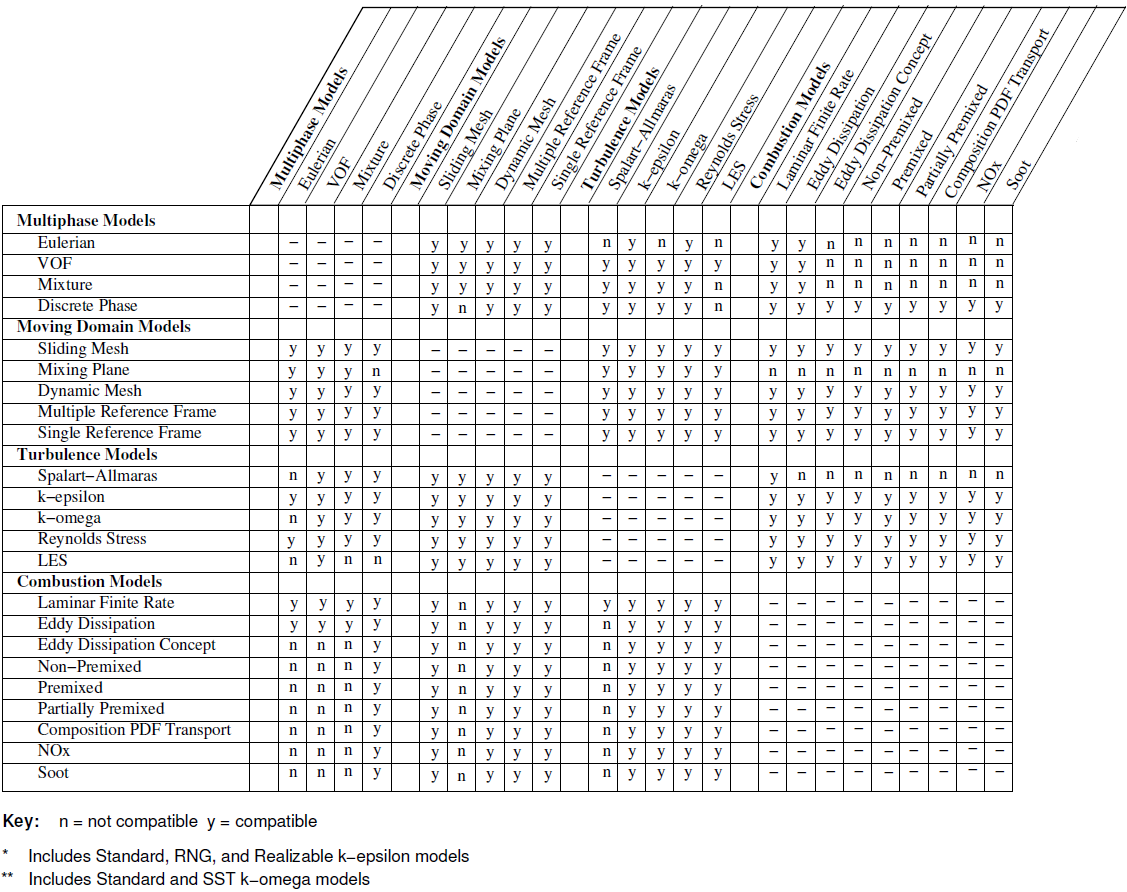

Compatibility of several ANSYS FLUENT model with underlying physics and turbulence model are tabulate below (reference ANSYS FLUENT User's Guide)

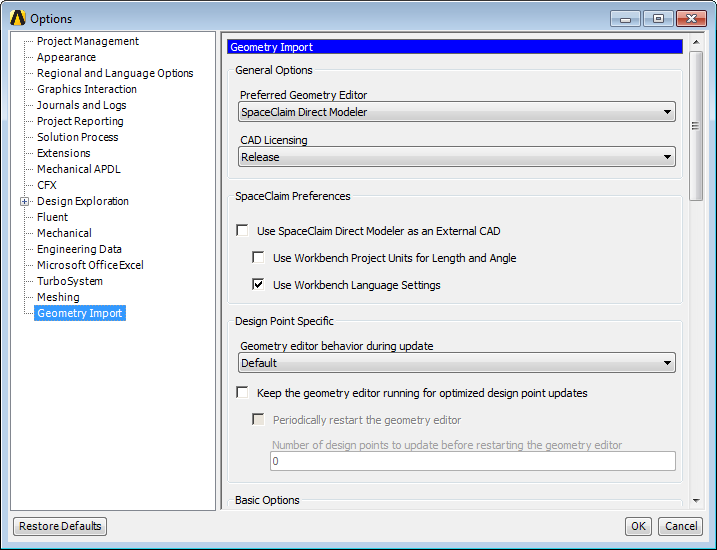

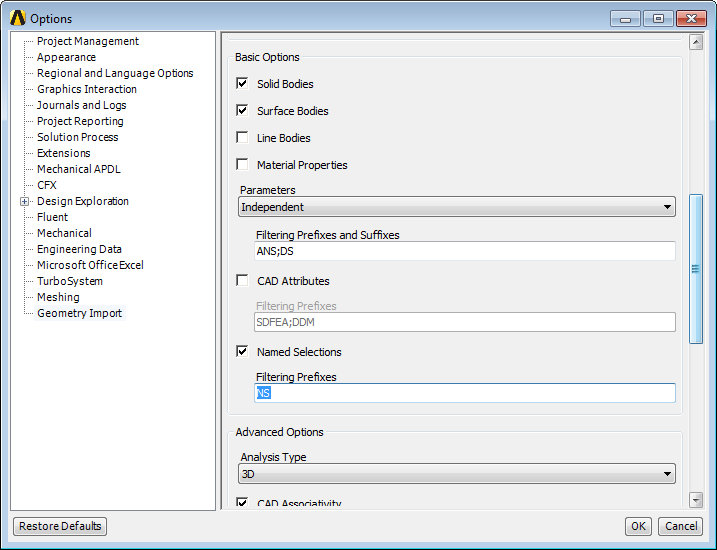

Default Settings

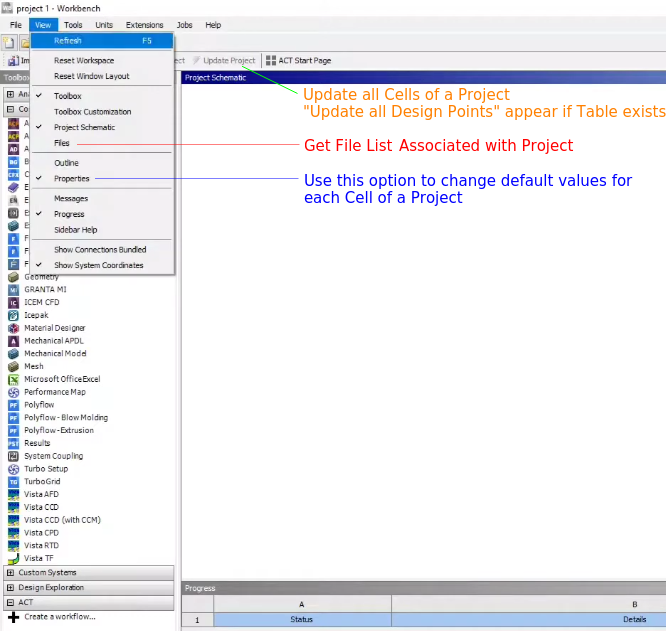

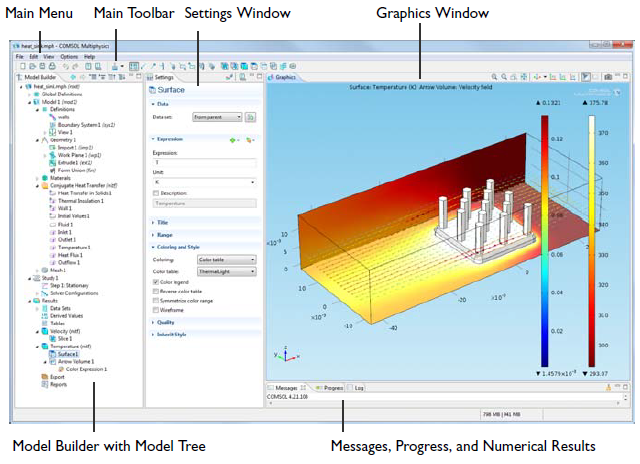

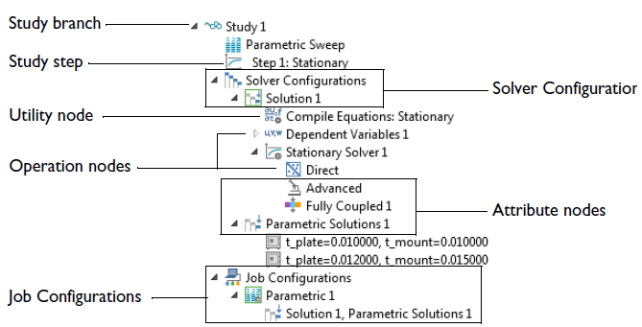

The GUI of solvers at start-up comprises of layout (of various windows), default value of material properties, number of contour bands, number of decimal places, a specific license configuration, parallel configuration (such as n-2 cores of the machine), working directory, solution backup interval, units, default view of graphics windows... Most of the programs have a text file stored which can be edited to change the default behaviour. For example: STAR-CCM+ uses a folder C:\Users \UserName \AppData \Local \CD-adapco \STAR-CCM+ <version> - deleting this folder shall reset both the GUI and other custom settings in Simcenter STAR-CCM+. Sometimes, GUI can be used to reset the GUI such as in STAR-CCM+ use: Tools > Reset Options > Window Layouts. To reset the GUI settings using a command prompt, type "starccm+.exe -reset".

Similarly, When FLUENT opens it auto executes the .fluent file in the "home directory" of Linux users, C:\ directory on Windows machines. A .fluent file can contain any number of scheme file names to load. Unless full path is specified for each scheme file in .fluent file, FLUENT tries to locate the scheme files from the working directory. Some users create a SCHEME journal file as per their needs and manually run it in TUI: file/read-journal after FLUENT session has opened, or using typically just 5 button clicks in the GUI.

To change default values in ANSYS CFD-Post, for example changing the Default Number of Contours from 11 to 16, or changing the Default Range choice from Global to Local: the file <ANSYS Inc>/CFD-Postetc/CFXPostRules.ccl needs to be edited. E.g. %3.0f can be used instead of %10.3e to change as Fixed and 0 decimal place.

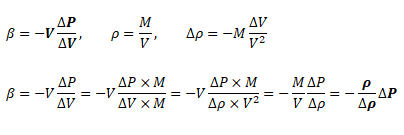

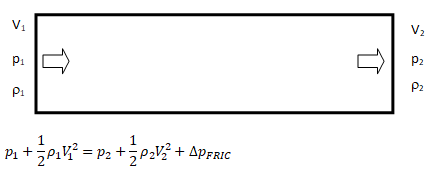

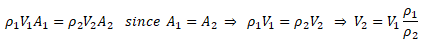

Compressibility of Fluids

One of the key consideration is the compressible or incompressible behaviour of the fluid. For liquids under lower pressure conditions (except cases such as hydraulics, deep bore drilling in oil exploration...), they can be assumed incompressible without loss of any accuracy. The compressibility chek for liquids need to be decided based on bulk modulus, operating pressures and coefficient of volume expansion.. The later varies between 0.001 ~ 0.010 [K-1]. The bulk modulus is defined as β = -V × ΔP/ΔV where negative sign refers to the fact that volume decreases as pressure increases. Thus, in terms of density, β = -ρ/Δρ × ΔP. β for water is 2.2 [GPa]. Hence, at pressure of 100 [MPa], the change in water density as compared to density at 1 [atm] would be 1000 [kg/m3] × 0.1 [GPa] / 2.2 [GPa] = 45.5 [kg/m3] which is 4.55% of density of water at 1 [atm].

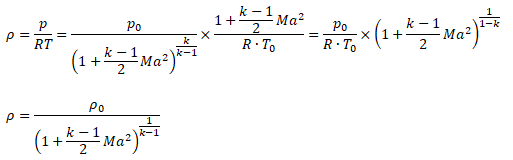

While the change in density of liquids is more pronounced with change in temperature, the situation is different in cases of gases where density is a strong function of both temperature and pressure changes. Note that the density is calculated based on static conditions (static temperature and static pressure) and not on total conditions (total temperature and total pressure). On the other hand, the stagnation conditions are the true measure of pressure energy and internal energy. There are two modes of reduction in stagnation (or total) pressure: the first mode is due to conversion of pressure energy into velocity (kinetic) energy and the second mode is loss of pressure energy due to wall friction and minor losses (sudden expansion and contraction). The general equations to estimate stagnation and static conditions for all values of flow velocities are described below.

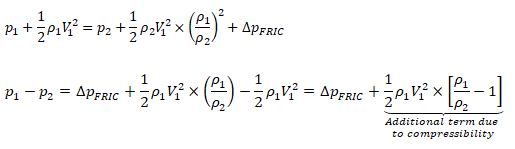

The operating pressure is used to specify the mean pressure in the system. Though it can be adjusted to convert the gauge pressure into absolute pressure. That is, the operating pressure can be set to zero to get the pressure field directly in absolute values. This is the recommended method for cases involving phase change such as simulation of cavitation in pumps. Note that cavitation occurs when local absolute static pressure in the flow field falls below saturation pressure at given temperature. However, once the operating pressure is set to 0 [Pa], the boundary conditions should also be specified in absolute values, e.g. 1,01,325 [Pa] instead of 0 [Pa].

ANSYS FLUENT provides an option of incompressible-ideal-gas available in ANSYS FLUENT makes the density a function of temperature only. This option does not take into account change in density due to variation of pressure and hence should be used when temperature variations are large but pressure variations are small. How to solve isothermal flows with significant variation in pressure? One option is to use Ideal Gas and turn off the energy equation. Thus, temperature shall remain constant and pressure field is anyway solved by momentum equation. Another option is to use an UDF to define density as a function of pressure and constant temperature.

Near atmospheric pressure, and in non-vacuum systems, the continuum theory (called macroscopic in nature) accurately describes the properties of gases when collisions between gas molecules determines the properties of a gas. Under vacuum conditions, gas molecules become so sparse that inter-molecular collisions no longer dominates, and the collisions between gas molecules and the chamber walls become determining factor affecting the gas properties. Air which contains 78% nitrogen, the Knudsen number based on mean free path of nitrogen at 1,000 [Pa] pressure is approximately 0.00034 [m] / 0.025 [m] = 0.014. Thus, the continuum hypothesis shall not hold true for air if pressure reduces close to 1,000 [Pa] which is approximately 100 times less than atmospheric pressure.

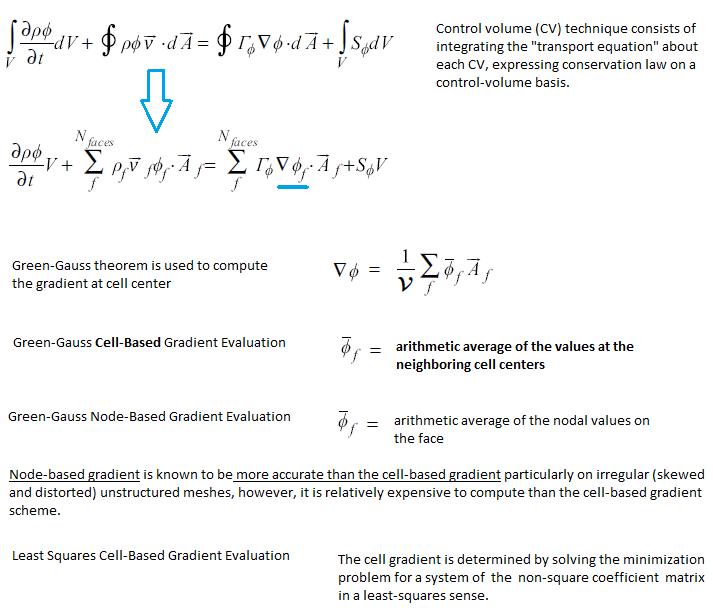

The solver-setting process encompasses following aspects of numerical solutions.

- Discretization scheme for momentum, pressure, energy and turbulence parameters: The PRESTO (Pressure Staggering Option) is recommended for pressure because in flows with high swirl numbers.

- PV-Coupling such as SIMPLE, SIMPLEC, SIMPLER, PISO

- Gradient schemes: Green-Gauss node based or Green-Gauss cell-based.

On irregular (skewed and distorted) unstructured meshes, the accuracy of the least-squares gradient method is comparable to that of the node-based gradient (and both are much more superior compared to the cell-based gradient).

- Conservation target and residual levels for convergence criteria. In addition to the "residual norm", it is strongly recommended to set "mass-conservation" or "energy-conservation" as convergence check.

- Fluid time-scales

- Solid time-scales (in case of conjugate heat transfer or pure conduction problems). The recommended practice is to have solid time-scale set to an order of magnitude (10 times) higher than fluid time-scale.

- One should ensure that the solution is mesh-independent and use mesh adaption to modify the mesh or create additional meshes for the mesh-independence study. A mesh-sensitive result confirms presence of "False Diffusion".

- The node-based averaging scheme is known to be more accurate than the default cell-based scheme for unstructured meshes, most notably for triangular and tetrahedral meshes.

- Note that for coupled solvers which solves pseudo-transient equations even for steady state problems (such as CFX), relaxation factors are not required to be set. The solver applies a false time step as the convergence process is iterated towards final solution.

- QUICK or Geo-Reconstruct scheme for the volume fraction in FLUENT

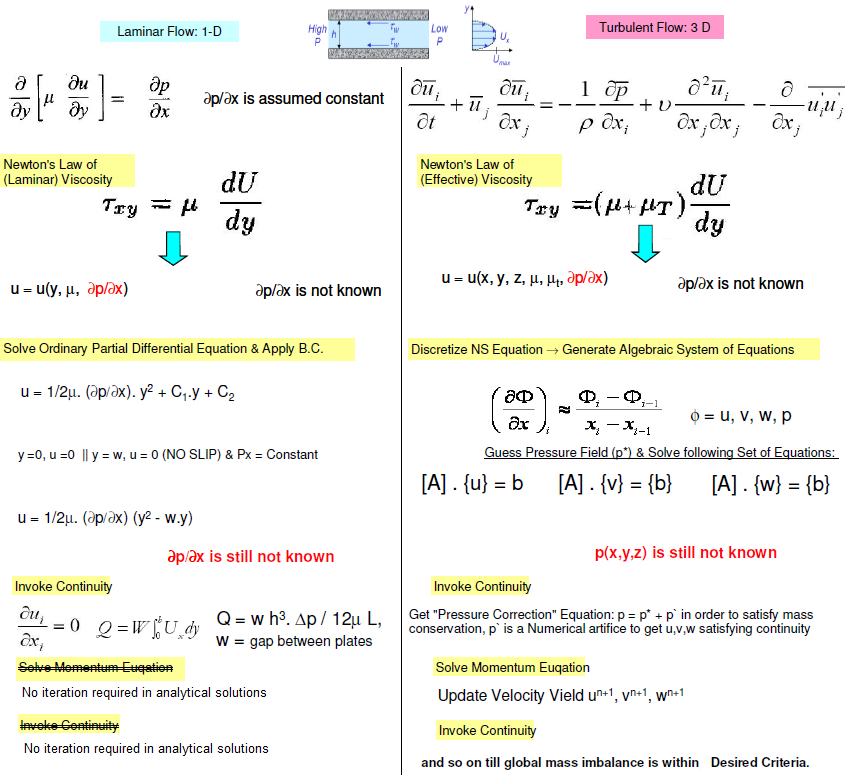

SIMPLE Algorithm: Analogy between analytical solution and numerical simulation

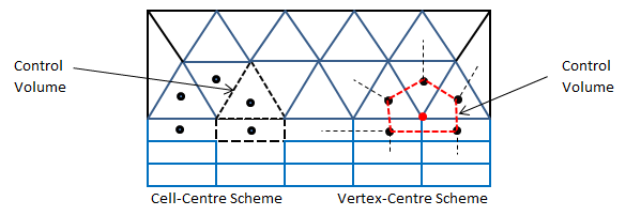

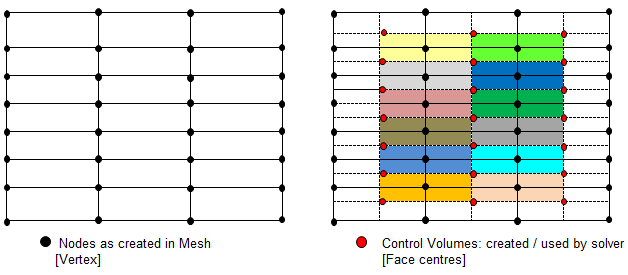

Differences between collocated (non-staggered) and staggered grid layout

- For collocated solvers (such as ANSYS CFX), control volumes are identical for all transport equations, continuity as well as momentum.

- CFX is a node-based solver and constructs control volumes around the nodes from element sectors, thus the number of control volumes is equal to the number of nodes and not the number of elements. The input of CFX may be tetrahedron, prism and hexhedron in physical form, the solver internally generates a polyhedral mesh. In a cell centered code, such as FLUENT or STAR-CD / STAR-CCM+, number of elements are the same as the number of control volumes (as the control volumes are same as physical elements) so these are often used interchangeably.

- Node-based Solvers: All field (or unknown or solution) variables as well as fluid properties are stored at the nodes (the vertices on the mesh).

- The distinction between collocated and staggered approach is nicely explained in section 4.6 by S. V. Patankar in his book "Numerical Heat Transfer and Fluid Flow" and section 7.2 in the book Computational Methods for Fluid Dynamics by J. H. Ferziger and M. Peric.

- For staggered (cell-based) solvers like ANSYS FLUENT, values of scalar field variables as well as material properties are stored as cell centres.

- Due to the difference in the way field variables are stored, the simulation with same mesh, material properties and boundary conditions, the y+ value reported in ANSYS CFX will be approximately twice that reported in FLUENT.

Compress Solution Files

The file size of set-up files generated during CFD simulations is relatively large depending upon the number of cells and physics solved. Large files take time to upload/download and even compression-decompression is time consuming. However, compression is only feasible option to minimize issues related to data transfer. On Windows: while creating/splitting zip files using 7zip, there is option to choose the number of threads for parallel processing which can be used to ZIP the file or folder quickly. However, UNZIP operation inherently runs in serial mode. In Linux: "tar -cvzf zippedFolder.tar.gz f1.pdf f2.docx f3.msh dirReports" can be used to compress/tar files and folders together. However, it works in serial mode only. Also, a double precision solver shall produce result files significantly bigger than single precision solver.Single Precision vs. Double Precision

If you try to calculate 21024 in LibreOffice Calc, you will get #Num! which is an indication that the number cannot be stored. 2^1023.99999999999 = 1.79769313485112E+308, if you try 2^1023.999999999994 then the values in the formula shall get changed to 2^1023.99999999999 and calculated value shall get changed to 1.79769313485481E+308. If you try to increase the number of significant digits, maximum you can go up to 1.79769313485481000000E+308. Why is it so? Why LibreOffice Calc is not able to calculate 2^1024? Why it is not able to store more than 14 digits after decimal place? It shall store 9.99999999999999 and any additional digit after decimal shall get truncated or rounded-off. Attempt to type 9.999999999999991 gets truncated to 9.99999999999999, attempt to type 9.999999999999992 gets rounded-off to 10. All these computations are being tried out on a Laptop with 64-bit AMD processor and Ubuntu OS.Few basics before learning how computers store numbers. Bit = 0 or 1, Nibble = 4 bits, Byte = 8 bits, 1 Word = 4 bytes, usigned = positive numbers, left most bit of a byte is called most significant bit and the right most as least significant bit, halfword or double byte contains 16 bits. MSB (Most Significant Bit) is used to indicate if the number is negative or positive: MSB = '1' means '-' and MSB = '0' means '+'. Each character (in ASCII table) requires 8 bits (1 byte) of storage. However, the number 1.23E-4 does not require 7 characters x 1 byte/character = 7 bytes or 28 bits to get stored digitally. So how does a decimal number gets stored digitally?

The paper "On floating point precision in computational fluid dynamics using OpenFOAM" by F. Brogi et. al. gives a real life example of impact of single precision solver on accuracy, convergence and computation time. Other references: amd.com/ ... /single-precision-vs-double-precision-main-differences.html and mathworks.com/ ... /floating-point-numbers.html

| Description | Single-Precision | Double-Precision |

| Overview: x= (−1)s.(1+f).2e | Uses 32 bits of memory to represent a numerical value, with one of the bits representing the sign of mantissa | Uses 64 bits of memory to represent a numerical value, with one of the bits representing the sign of mantissa |

| Base, s: | 0 for positive number, 1 for negative number | |

| Biased exponent, e: | 8 bits used for exponent | 11 bits used for exponent |

| *Fraction or Mantissa, f: precision bits of numbers | Uses 23 bits for mantissa (to represent fractional part) | Uses 52 bits for mantissa (to represent fractional part) |

| Definition in programming languages | Float, Single, int32, uint32 | Real, Double, int64, uint64 |

| Minimum** | -2127 ≈ -3.4E-038 | -21023 ≈ -2.225e-308 |

| Maximum** | 3.40E-38 | 1.798E+308 |

Tips: In case the solver set-up is too big to get accommodated in available RAM size, try switching to single precision version of the solver, at least for the initial run till a machine with higher RAM can be arranged. The file size with single precision version shall be approx. half of that of double precision version. As per Microsoft "Data type summary":

| Data type | Storage size | Range |

| Boolean | 2 bytes | True or False |

| Byte | 1 byte | 0 to 255 |

| Decimal | 14 bytes | +/- 79,228,162,514,264,337,593,543,950,335 with no decimal point +/- 7.9228162514264337593543950335 with 28 places to the right of the decimal. Smallest non-zero number is +/- 0.0000000000000000000000000001 |

| Double* | 8 bytes | -1.79769313486231E308 to -4.94065645841247E-324 for negative values. 4.94065645841247E-324 to 1.79769313486232E308 for positive values |

| Integer | 2 bytes | -32,768 to 32,767 |

| Long integer on 32-bit systems | 4 bytes | -2,147,483,648 to 2,147,483,647 |

| Long integer on 64-bit systems | 8 bytes | -9,223,372,036,854,775,808 to 9,223,372,036,854,775,807 |

| Single* | 4 bytes | -3.402823E38 to -1.401298E-45 for negative values. 1.401298E-45 to 3.402823E38 for positive values |

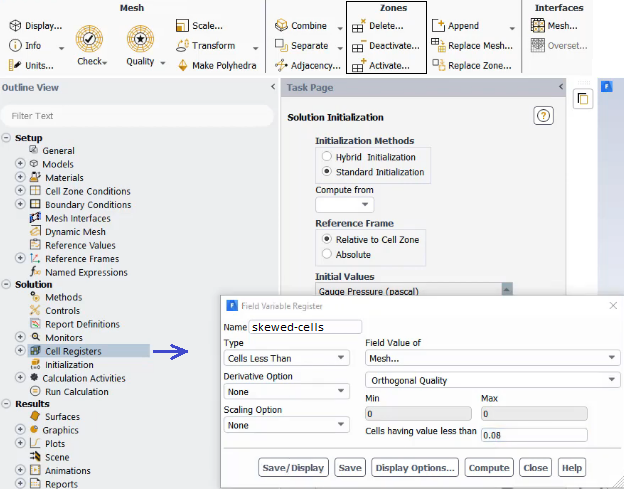

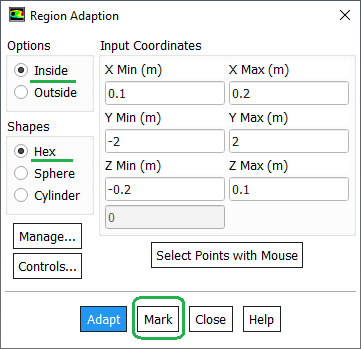

Mark Bad Cells

Separating cells by Mark (Adaptation Register) /mesh/modify-zones/slit-face-zone 3 5 (): slit the faces of wall zones 3 and 5, either delete or deactivate the separated cell zone.To mark and display bad cells, use "Cell Register" from model structure tree and then select 'Mesh' under "Field Value of" drop-down. This option is available only when fields are initialized.

Alternatively, use Adapt Iso-value Mesh Select the property you want to adapt (e.g. Skewness or Orthogonal Quality in this case) Click 'Compute" to get the minimum and maximum value of the property in the domain Set the minimum and maximum bounds to select cells between these bounds. For example, you can set skewness with min 0.95 and max 1.0 to select all cells with a skewness bigger than 0.90 Click Adapt to remesh them.

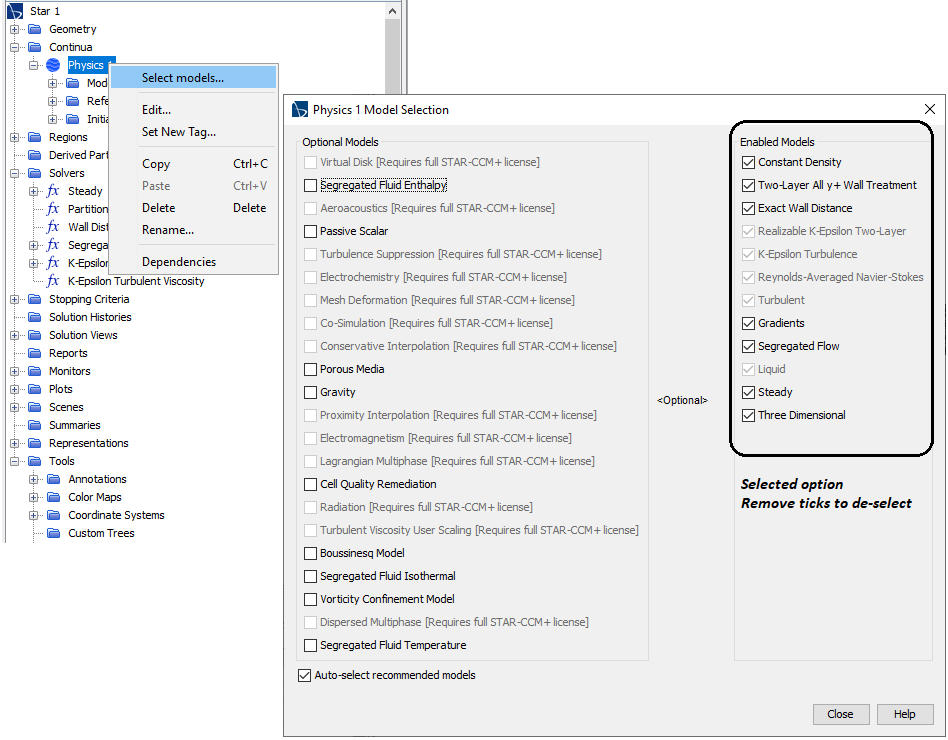

Solver Setting: Physics

The flow regime and classification of laminar, transition and turbulent zones are unique to every flow. One of the most widely used flow condition is flow over a cylinder in cross flow. The following summary is an interesting description of how the flow evolve:- Re ≤ 5, flow is laminar throughout and no vortices are present downstream of the body.

- 5 < Re < 40, a vortex pair can be observed in the wake region though flow is still laminar in the boundary layer.

- 40 ≤ Re ≤ 200, a von Karman vortex street is formed as where pairs of counter-rotating vortices are shed downstream of the body. The vortex shedding frequency is given by a non-dimensional Strouhal number: St = f × D / U.

- 200 ≤ Re ≤ 300, the flow behind a cylinder undergoes transition to a turbulent wake.

- 300 ≤ Re ≤ 3×105, the sub-critical Reynolds number regime wake is turbulent while the boundary layer is still laminar.

- Re > 3×105, a transition from laminar to turbulent boundary layer separation occurs.

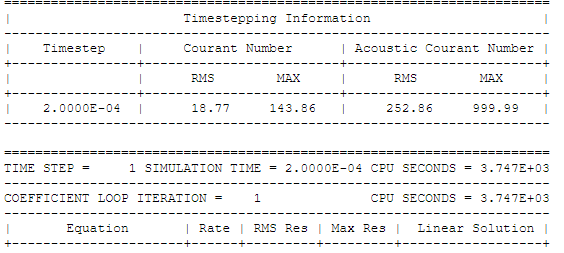

Solver Setting for Transient Simulation: CFL Number

Many a time, it is difficult to decide whether the physical behaviour of system is transient, quasi-transient and steady state. Following example can be used to check if solution should be solved as steady state problem or a transient simulation.

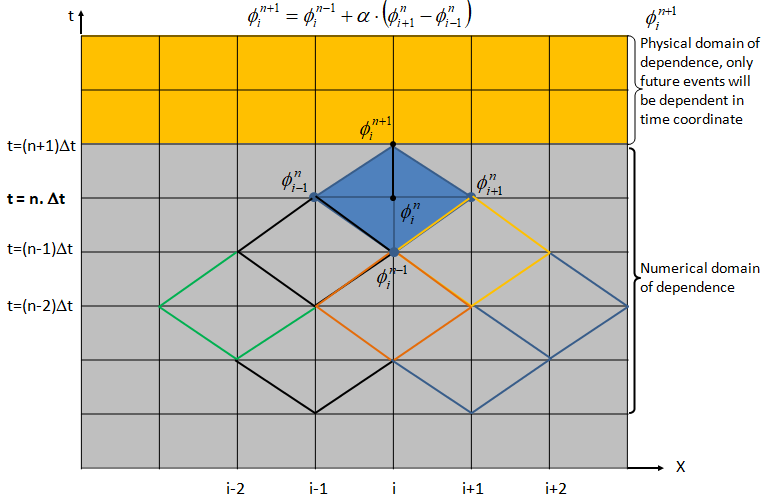

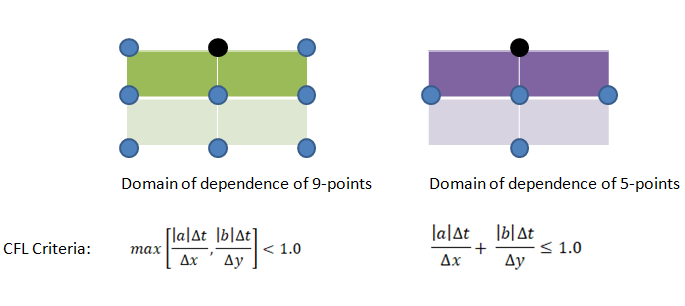

In an explicit Eulerian method, the Courant number cannot be > 1 otherwise it will lead a condition where information is propagating through more than one grid cell at each time step. This is explained in the physical domain of dependence and numerical domain of dependence in following diagram. For a stable solution, numerical domain of dependence must cover the physical (real) domain of dependence.

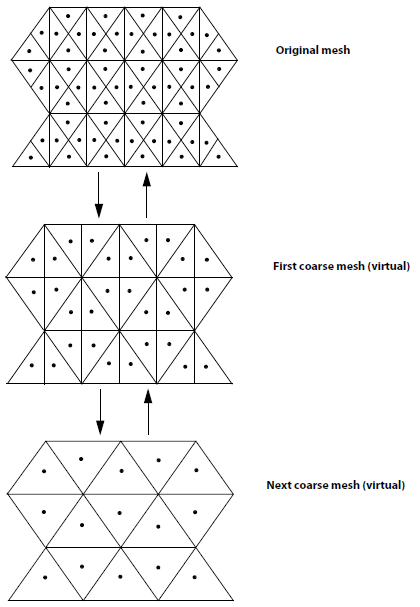

Thus, Courant number can be reduced either by reducing the time step or by increasing the mesh size (i.e. coarsening of the mesh). The later option may appear counter-intuitive though mathematically that remains a fact. It is difficult for most of the simulation engineers to believe the fact that coarsening the mesh help them achieve a better convergence.

- The CFL number scales the time-step sizes that are used for the time-marching scheme of the flow solver.

- A higher value leads to faster convergence but can lead to divergence and unstable simulations. The (stiff) CFL condition can be relaxed or softened by using implicit Euler such as Crank–Nicolson which is unconditionally stable.

- The inverse of this is also true where choosing a smaller value in an unstable simulation improves convergence.

- ANSYS FLUENT uses implicit (first order and second order) formulation for pressure-based solver. As per the user guide: "It is best to select the Coupled pressure-velocity coupling scheme if you are using large time steps to solve your transient flow, or if you have a poor quality mesh."

- In general, the solution at the end of (inner iterations in) current time-step is used as initial value for iterations at next time-step. ANSYS FLUENT provides option to extrapolate the solution variable values for the next time step using a Taylor series expansion. This can improve the convergence of the transient calculations by enabling and the absolute residual levels are lowered during inner iterations.

To verify that your choice for time-step was proper, check contours of the Courant number within the domain. In FLUENT, select Cell Courant Number under Velocity... For a stable and accurate calculation, the Courant number should not exceed a value of 20-40 in most sensitive transient regions of the domain.

Note that CFX reports two types of Courant number:

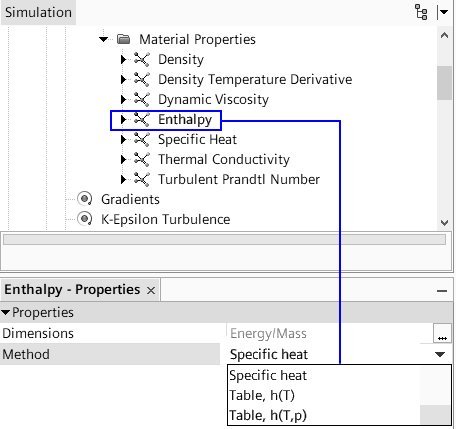

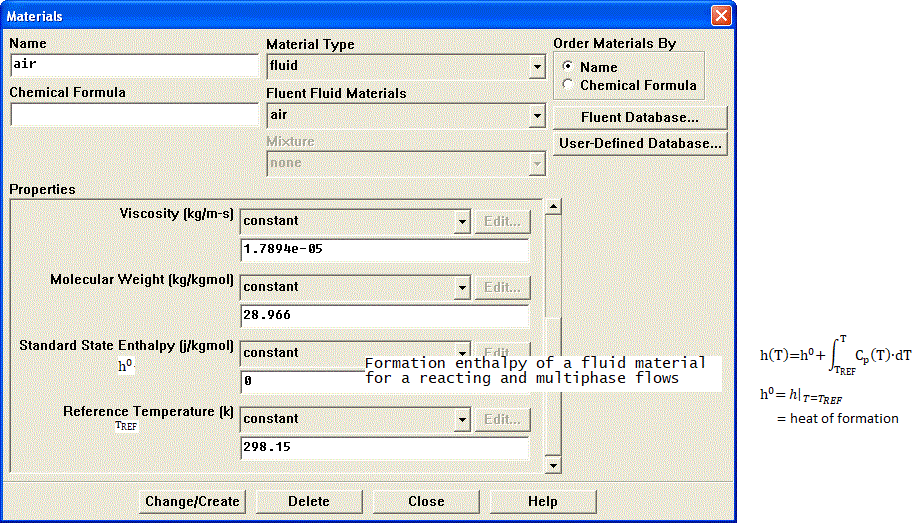

Material Properties

Sometime underlying physics and type of solver chosen governs the material definition. For example, a density based solver requires density to be function of pressure and temperature. Similarly, simulation of Joule-Thomson phenomena (cooling effect due to throttling) requires material properties to be modeled as real-gas. An ideal gas will never cause this effect. The simulation related to steams required careful understanding of state of steam: wet-steam vs. dry steam. An incompressible flow may required only density and viscosity of a fluid whereas phase change and/or reacting mixture phenomena will require definition of reference enthalpy and entropy.Similarly, air can be treated as 4 different ways depending upon application such as:

| Air for common applications | Air in HVAC and Psychrometrics | Air in Hydrocarbon Fuel Combustion | Air in Pneumatic and High Pressure applications |

| Ideal gas with molecular weight = 28.96 [g/mole] | Mixture of gases: Dry air + Water vapour | Mixture of gases: Oxygen, Nitrogen, Water Vapour, Argon | Real Gas with behaviour different from ideal gases |

In some applications such as fans, there are significant reduction in pressure values (such as trailing edge and flow separation regions) as well as significant increase in pressure values (near leading edge). Hence, flow simulations dealing with gas and turbo-machine should use ideal gas law as final settings. Constant density can be used initially to get a convergence. The option of incompressible-ideal-gas available in ANSYS FLUENT makes the density a function of temperature only. This option also does not take into account change in density due to variation of pressure near the blade tip and separation regions.

To get a better accuracy, temperature dependent properties should be used. Some correlations available for gases are tabulated below. The Sutherland coefficients for some common gases are as follows. μ = μ0 * (273+C)/(T+C)*(T/273)1.5 where C is Sutherland coefficient in [K], T is fluid temperature in [K] and μ is viscosity in [Pa.s]. Reference: Handbook of Hydraulic Resistance by I. E. Idelchik.

| Fluid | μ0 [Pa.s] | C [K] |

| Air | 17.12 | 111 |

| N2 | 16.60 | 104 |

| O2 | 19.20 | 125 |

| CO2 | 13.80 | 254 |

| H2O-g | 8.93 | 961 |

| H2 | 8.40 | 71 |

The variation in dynamic viscosity of air in the temperature range 0 to 100 [°C] is of the order of 25% which is a significant amount if the wall friction contributes more to the pressure drop in the system. Similarly, a 5% reduction in local pressure shall cause 5% reduction in density and hence the velocity or volume flow rate has to increase by 5% to ensure the mass balance.

Specific Heat Capacity - Reference: B. G. Kyle, Chemical and Process Thermodynamics - Cp [kJ/kmol-K] ≡ [J/mol-K] = a + b.T + c.T2 + d.T3 where T is in [K]. For air, the coefficients are: a = 28.11 [kJ/kmol-K], b = 1.967E-03 [kJ/kmol-K2], c = 4.802E-06 [kJ/kmol-K3], d = -1.966E-09 [kJ/kmol-K4]. Taking molecular weight of air to be 28.96 [kg/kmol], the coefficients to calculate Cp in [J/kg-K] are: a = 970.65 [J/kg-K], b = 0.06792 [J/kg-K2], c = 1658E-04 [J/kg-K3], d = -6.788E-08 [J/kg-K4]. As you can notice, there is ~1% variation in specific heat capacity of air over the range of 100 [°C] and hence it can be assumed a constant value in calculations and simulations. Also note that for steady state simulations, the specific heat capacity and even density is not needed for solids. This can be inferred from the conduction equation for steady state conditions where only thermal conductivity appears as coefficient.

Thermal Conductivity - Reference: B. G. Kyle, Chemical and Process Thermodynamics - k [W/m-K] = a + b.T + c.T2 + d.T3 where T is in [K]. a = -3.933E-04 [W/m-K], b = 1.018E-04 [W/m-K2], c = -4.857E-08 [W/m-K3], d = 1.521E-11 [W/m-K4]. There is 25% increase in thermal conductivity or air in the temperature range 15 ~ 100 [°C] and hence a constant thermal conductivity may yield lower heat transfer rate.

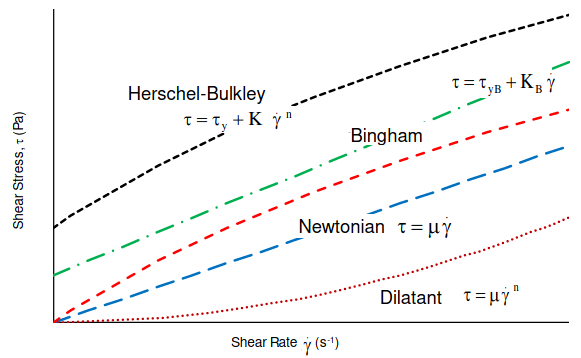

Non-Newtonian Fluids: Most of the fluids behave such that shear stress is linear function of strain rate with zero initial threshold value of shear stress. However, there are some material where shear stress is non-linear function of strain rate with non-zero initial threshold value of shear stress (yield point) before which flow cannot start. Various type of such material also known as Bingham plastic and Herschel-Bulkley are described below.

Use material properties as tabulated data function of p and T: In STAR-CCM+ use "Physics Simulation > Materials > Setting Material Properties Methods > Using Table (T, P)". In ANSYS FLUENT, File > Table File Manager... > Read... In ANSYS FLUENT V24, an expression for density cannot be defined in terms of both the Absolute Pressure and Static Temperature. Error reported is: "Density expression cannot be a function of both Absolute Pressure and Static Temperature".

import numpy as np

def bilinear_interpolation(grid, x_var, y_var, x, y):

'''

Args:

grid (np.array): A 2D array representing the data (dependent variable)

x_var (np.array): 1D array of x - independent variable 1

y_var (np.array): 1D array of y - independent variable 2

x (float): The value of x to interpolate at

y (float): The value of y to interpolate at

Returns: The interpolated value at (x, y).

'''

# Find the grid indices surrounding the point

x_idx = np.searchsorted(x_var, x, side="right") - 1

y_idx = np.searchsorted(y_var, y, side="right") - 1

#Check if interpolation points falls inside data grid

if x_idx<0 or y_idx<0 or x_idx >= len(x_var)-1 or y_idx >= len(y_var)-1:

return np.nan

x1, x2 = x_var[x_idx], x_var[x_idx + 1]

y1, y2 = y_var[y_idx], y_var[y_idx + 1]

# Get the values of the four corners

q11 = grid[y_idx, x_idx]

q12 = grid[y_idx, x_idx + 1]

q21 = grid[y_idx + 1, x_idx]

q22 = grid[y_idx + 1, x_idx + 1]

# Check for division by 0, perform the interpolation

if x2 == x1 or y2 == y1:

return q11

x_weight = (x - x1) / (x2 - x1)

y_weight = (y - y1) / (y2 - y1)

interpolated_value = (

q11 * (1 - x_weight) * (1 - y_weight) +

q12 * x_weight * (1 - y_weight) +

q21 * (1 - x_weight) * y_weight +

q22 * x_weight * y_weight

)

return interpolated_value

grid = np.array([[1, 2, 4], [4, 5, 10], [7, 8, 12]])

x_c = np.array([0, 5, 9])

y_c = np.array([0, 2, 4])

x_interp = 2.5

y_interp = 1.0

interp_value = bilinear_interpolation(grid, x_c, y_c, x_interp, y_interp)

print(f"Interpolated value at ({x_interp}, {y_interp}): {interp_value}")

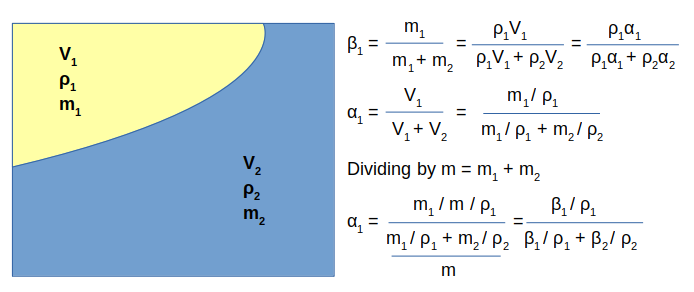

Mass Fraction to Volume (or Mole) Fraction: ensure that the sum of mass fractions add to 1.0, no checks are performed in case they do not. Typically method to check the correctness of following method is to specify same value of mass fractions (0.5 or 50%) and density for both phases, the calculated volume fractions should be equal to 0.50 (50%) for both phases.

| First phase mass fraction [%] and density [kg/m3] | β1: | % | ρ1: | |

| Second phase mass fraction [%] and density [kg/m3] | β2: | % | ρ2: | |

| Volume fraction of the two phases | α1: | % | α2: | % |

Formula to convert mass fraction to volume fraction and vice versa:

Volume Fraction to Mass Fraction: ensure that the sum of volume fractions add to 1.0, no checks are performed in case they do not.

| First phase volume fraction [%] and density [kg/m3] | α1: | % | ρ1: | |

| Second phase mass fraction [%] and density [kg/m3] | α2: | % | ρ2: | |

| Mass fraction of the two phases | β1: | % | β2: | % |

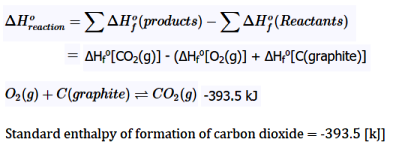

The value of ΔH0 will be negative if the process is endothermic (that is the reaction for material formation requires external heat energy) or positive if the process is exothermic (if heat is released during formation or reaction).

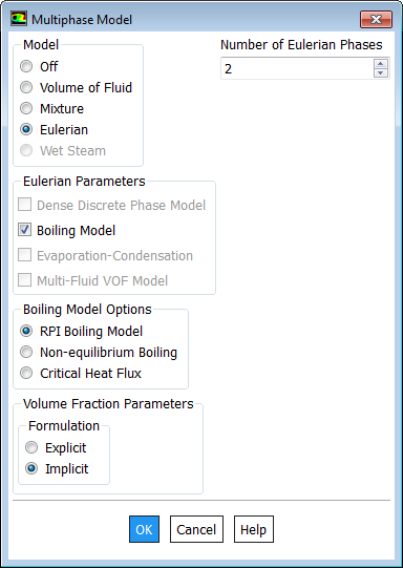

In situations with phase change such as forced convection subcooled nucleate boiling, the standard state enthalpies of vapor and liquid phase are set such that their difference equals to latent heat of vapourization = 2.992E+07 [J/kmole]. The unit of standard state enthalpy is [kJ/kmol] and usually the latent heat value is available in [kJ/kg]. The latest heat value in [kJ/mole] should be multiplied by molecular wight of the liquid. For correct specification of latent heat, the reference temperature should be set to 298.15 [K] or 25 [°C]. Note that it is a positive value (in case of boiling simulation) as compared to standard state enthalpies of species such as CO2 and CH4 (which are negative by sign convection) used in species transport and combustion modeling.

In phase change phenomena (evaporation and/or condensation), the difference between actual temperature of the phase and saturation conditions are described as degree of sub-cooling (liquid) and degree of super-heating (gas). If the inlet temperature of liquid is T = 350 [K] and the saturation temperature TSAT = 373 [K], liquid state is terms as sub-cooled and with the degree of sub-cooling = 373 - 350 = 23 [K]. In a nucleate boiling, when the wall temperature rises above the liquid saturation temperature, steam bubbles will get formed and migrate away from the wall. Since the bulk flow (liquid far away from the wall) is sub-cooled, these bubbles condense near the center of the pipe.

Wet Steam: The steam with droplets of water is known as wet steam and it characterized by dryness fraction. Most of the boilers do not generate dry steam as steam bubbles breaking through the heated surface will pull tiny water droplets along with it. In general, when a substance exists partly as liquid and partly as vapour (gas) phase, its quality is defined as the ratio of the mass of vapor to the total mass. On the hand, mass fraction of the condensed phase is known as wetness factor.

Dry Steam: Saturated or superheated steam are known as dry steam.

For water, the triple point T = 0.01 [°C] and P = 0.6113 [kPa], is selected as the reference state where the internal energy, enthalpy and entropy of saturated liquid are assigned a zero value. Similarly, saturated refrigerant R-134a is specified enthalpy of 0 [J/kg] at temperature -40 [°C] and pressure of 51.25 [kPa]. Thus, standard state enthalpy of liquid water is 0 and reference temperature is 273.15 [K]. The standard state enthalpy of steam is heat of vaporization at T = 0.01 [°C] and P = 0.6113 [kPa] and equals 2501 [kJ/kg]. The molecular weight of water is 18.0153 [kg/kmole] and hence the standard state enthalpy of steam is (2501 × 18.0153) [kJ/kmole]. The saturation pressure of water as per ASHRAE: p(T in [K]) in [Pa] = exp[c1 / T + c2 + c3 * T + c4 * T2 + c5 * T3 + c6 * Ln(T)] where c1 = -5800.22, c2 = +1.3914993E+00, c3 = -4.8640239E−02, c4 = +4.1764768E−05, c5 = −1.4452093E−08, c6 = +6.54597.Porous Domains

Flow geometry such as heat exchangers with closely spaced fins, honeycomb flow passages in a catalytic converters, screens or perforated plates used as protection cover at the from of a tractor engine ... are too complicated to model as it is. They are simplified with equivalent performance characteristic, knowns as Δp-Q curve. These curves are either generated using empirical correlations from textbooks or using a CFD simulation for small, periodic / symmetric flow arrangement. The simplified computational domain is known as "porous zone" in case it is represented as a 3D volume or pressure or porous jump in case it is represented as a plane of zero thickness. In a similar fashion, the performance data of a fan can be specified including the swirl component.All the porous media formulation take the form: Δp = -L × (A.v + B.v2) where v is the 'superficial' flow velocity and negative sign refers to the fact that pressure decreases along the flow direction. The 'superficial velocity' is calculated assuming there is no blockage of the flow. L is the thickness of the porous domain in the direction of the flow. Here, A and B are coefficients of viscous and inertial resistances.

In FLUENT, the equation used is: Δp/L = -(μ/α.v + C2.ρ/2.v2) where α is known as 'permeability' and μ is the dynamic viscosity of fluid flowing through the porous domain. This (permeability) is a measure of flow resistance and has unit of [m2]. Other unit of measurement is the darcy [1 darcy = 0.987 μm2], named after the French scientist who discovered the phenomenon.

STAR-CCM+ uses the expression Δp/L = -(Pv.v + Pi.v2) for a porous domain.

The pressure drop is usually specified as Δp = ζ/2·ρ·v2 where ζ is 'equivalent loss coefficient' and is dimensionless. Darcy expressed the pressure gradient in the porous media as v = -[K/μ]·dP/dL where 'K' is the permeability and 'v' is the superficial velocity or the apparent velocity determined by dividing the flow rate by the cross-sectional area across which fluid is flowing.Steps to find out viscous and inertial resistances:

- Calculate the pressure drop vs. flow velocity data [Δp-v] from empirical correlations or wind-tunnel test or simplified CFD simulations.

- Divide the pressure drop value with thickness of the porous domain. Let's name it as [Δp'-v curve].

- Calculate the quadratic polynomial curve fit coefficients [A, B] from the curve Δp'-v. Ensure that the intercept to the y-axis is zero.

- In STAR-CCM+ these coefficients 'A' and 'B' can be directly used as Pv and Pi which are viscous and inertial resistance coefficients respectively.

- Divide 'A' by dynamics viscosity of the fluid to get inverse of permeability that is 1/α [m-2] to be used in ANSYS FLUENT as "viscous resistance coefficient" [m-1].

- Divide 'B' by [0.5ρ] where ρ is the density of fluid, to get C2 to be used in ANSYS FLUENT as "inertial resistance coefficient".

- The method needs to be repeated for the other 3 directions. If the flow is primarily one-directional, the resistances in other two directions need to be set to a very high value, typically 3 order of magnitude higher.

In case porous domain is not aligned to any coordinate direction, the direction of unit vector along the flow and across the flow directions can be estimated from following Javascript program. Note that empty field is considered as 0.0. There is no check if a text value is specified in the input fields and the calculator will result in an error.

| First point - X1: | |

| First point - Y1: | |

| First point - Z1: | |

| Second point - X2: | |

| Second point - Y2: | |

| Second point - Z2: | |

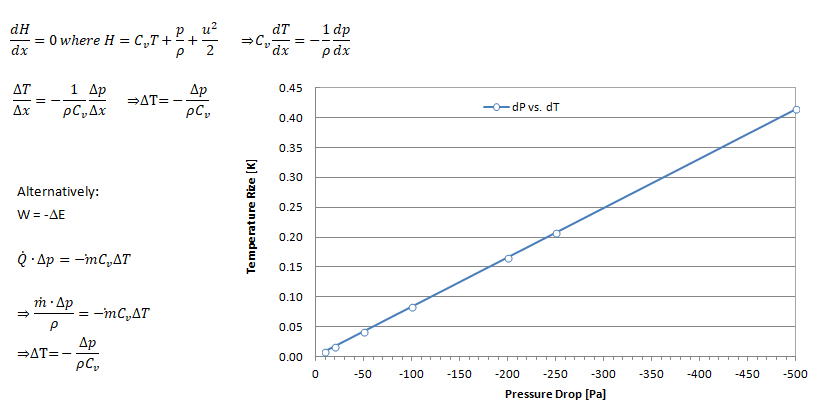

Temperature Drop across a Porous Domain

If flow through the porous domain is assumed (a) incompressible, one-dimension, steady state and (b) pressure drop varies linearly with the flow direction x:

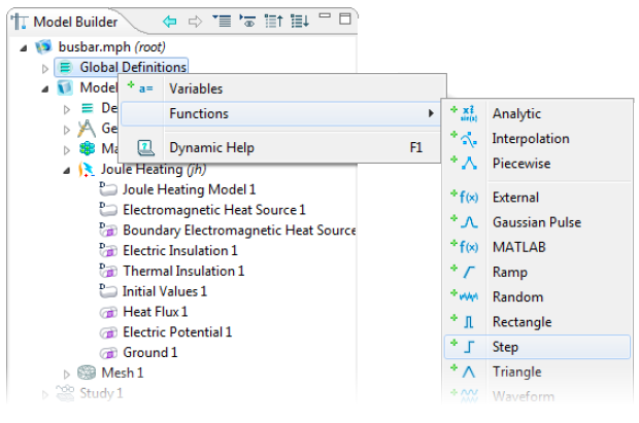

Named Expressions

The newer versions of program FLUENT and STAR-CCM+ have option to use named expression and field functions for customized boundary conditions. This eliminate need to write an UDF (User Defined Function). For example, following 5 expressions can be used to define inlet mass flow rate (kg/s) as function of static pressure (Pa) at outlet where mass flow rate is a parabolic function of pressure mdot [kg/s] = C0 [kg/s] + C1 [m.s] × pr + C2 [m^2 s^3 kg^-1] × pr2. Here pr = MassFlowAve(StaticPressure, ['Outlet']).Radiation Modeling

The selection of Radiation Model is based on optical thickness = a x L where L is typical length scale and a absorption coefficient. OpenFOAM provides three radiation models: P1 model, fvDOM (finite volume discrete ordinates model) and viewFactor model.If a x L >> 1 then P1 model. If a × L < 1, use of fvDOM is recommended. Since fvDOM also captures the large optical length scales, it is the most accurate model though it is computationally more intensive since it solves the transport equation for each direction. fvDOM can handle non gray surfaces (dependence of radiation on the the solid angle is included). viewFactor method is recommended if non-participating mediums are present (spacecraft, solar radiation).

ANSYS FLUENT offer following radiation models: Rosseland, P1, Discrete Transfer (DTRM), Surface to Surface (S2S) and Discrete Ordinates (DO). P1, DO, or Rosseland radiation model in ANSYS FLUENT can be used in cases of participating media (where the fluid participated in radiative heat transfer, example exhaust smoke). It is required to define both the absorption and scattering coefficients of the fluid. When using the Rosseland model or the P1 model, the refractive index for the fluid material only should be defined.

TUI for Radiation Setting: /define/models/radiation discrete-ordinate? , , , , where comma is used to accept default values. The 4 inputs needed are (1)Number of θ divisions (2)Number of φ division (3)Number of θ pixels and (4)Number of φ pixels.

The TUI to define emissivities for the walls is: /define/b-c/wall <wall name> , , , , , , , , , 0.40 , , , , where the emissivity is 0.40 and comma is used to accept default values. This TUI command sets the wall as OPAQUE. Also note that the TUI operation does not accept multiple wall zones and hence the setting has to be provided using a loop on multiple face zones of type wall.Solid Cell Zones Conditions for the DO Model: With the DO model, you can specify whether or not you want to solve for radiation in each cell zone in the domain. By default, the DO equations are solved in all fluid zones, but not in any solid zones. If you want to model semi-transparent media, for example, you can enable radiation in the solid zone(s). To do so, enable the Participates In Radiation option in the Solid dialogue box

Initial Condition

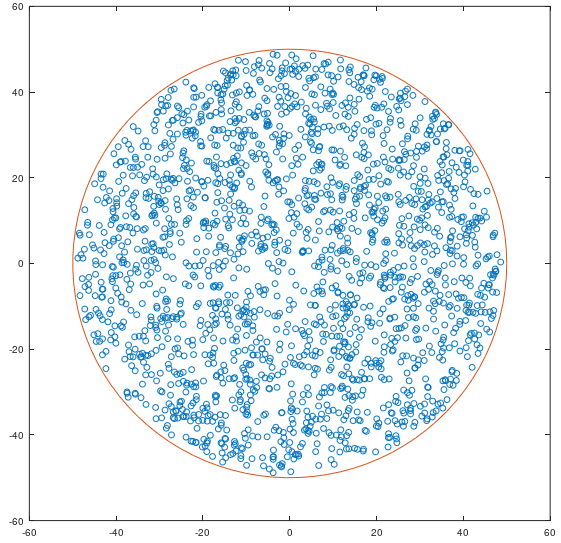

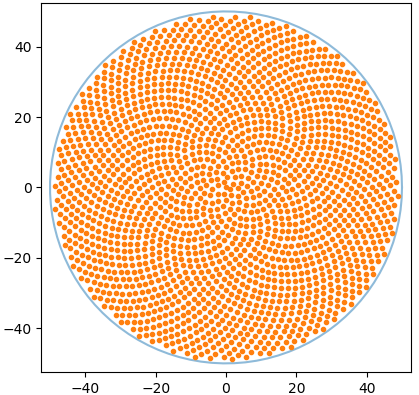

The field variables (u, v, w, p, T, k , ε...) need to be specified certain starting value. It can all be set to 'ZERO' in their respective units or some other physically realistic values. In CFD simulation process, it is called 'initialization' which can be domain (volume specific) or global (applicable to entire computational nodes). For steady-state simulation, the initial condition is a 'wise guess' of the converged steady-state solution. In transient simulations, the initial condition provides the (actual) spatial distribution at t = 0 and hence temporal starting point for the simulation. Thus, for transient simulations, the intermediate result will be strongly influenced the initial condition.In case of multi-phase flow, an initial distribution of volume fraction of the constituents is important. The method to set initial conditions in ANSYS FLUENT is called patching which requires regions to be marked as shown below. In OpenFOAM setFields (requires setFieldsDict) utility is used to initialize field variables in various zones.

Volumetric Heat Sources

ANSYS FLUENT expects heat sources in terms of heat density that is [W/m3] whereas STAR-CCM+ can take directly the heat generation rate [W] for a given volume. In order to check that the total applied heat generated rate is equal to the expected value, initialize the flow field and then calculate heat transfer rate from "Reports -> Fluxes" option. ANSYS FLUENT shall print the total "User Source" that is the total heat generation rate defined by all defined volumetric heat sources.A sample SCHEME script to apply volumetric heat source of 500 [W/m3] to a zone named htr-ss and material defined as steel is as follows: define b-c solid htr-ss yes steel y 1 y 500 n no n 0 n 0 n 0 n 0 n 0 n 0 n no n. The energy should be turned ON and material name should be present before this script is run in TUI. This script is not applicable for rotating reference frames.

Many times, material properties and volumetric heat source are function of pressure and temperature. They might be available in tabular format and a curve fit may not be possible to get a good fit. A user code in STAR-CCM+ is available at "community.sw.siemens.com/.../User-Coding-for-2-dimensional-T-p-Table-interpolation-Linux-OS" which can be adapted for ANSYS FLUENT as well.Set Solution Limits

In ANSYS FLUENT, limiting values for pressure, static temperature, and turbulence quantities can be set to keep the absolute pressure or the static temperature from becoming 0, negative, or excessively large during the calculation. However, there are no static temperature and absolute pressure solution limits for incompressible flows such as those with constant density gases.Define Monitor Points

It is not known in advance how many iterations a particular set-up would require to get fully converged. Using a default high number of iterations say 5000 iterations may end in unnecessary use of computational power. It is strongly advised to use a monitor point such as inlet pressure (when mass flow rate or velocity inlet boundary condition is used) as convergence criteria instead of using only the equation residuals. ANSYS FLUENT has option to specify number of iterations to be used to create a report definition. This can be used in following ways to define convergence criteria.Option-1: Create report definition say rep_200 to compute average inlet static pressure over last 200 iterations. Create another report definition rep_010 to average inlet static pressure over last 10 iterations. Use the third report definition rep_dP = abs(rep_200 - rep_010). The solution can be marked converged if rep_dP ≤ 0.1 [Pa]

Option-2: Create report definition say rep_002 to compute average inlet static pressure over last 2 iterations. Create another report definition rep_001 to get inlet static pressure at last iteration. Use the third report definition dp_frac = abs(rep_002 - rep_001) / rep_001 * 100. The solution can be marked converged if dp_frac ≤ 0.1%

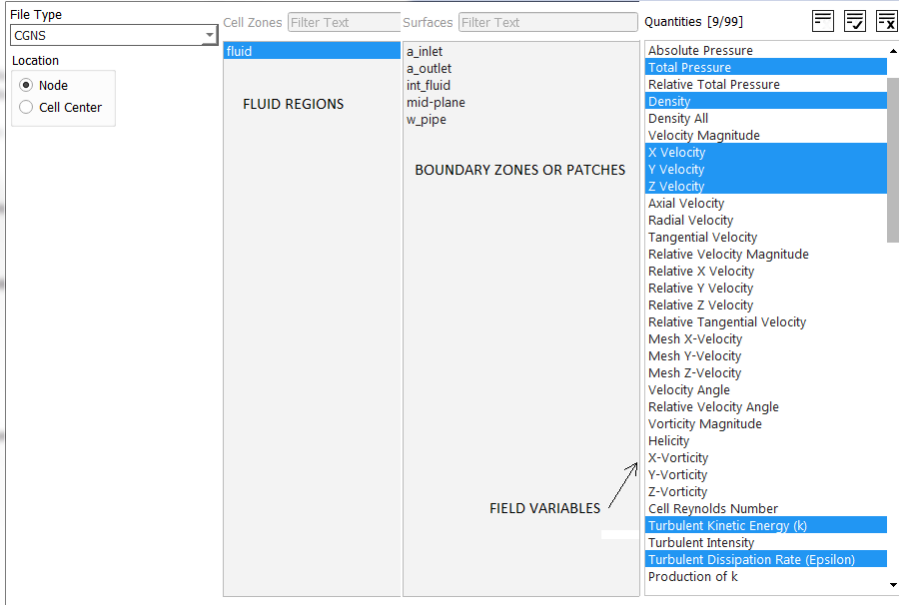

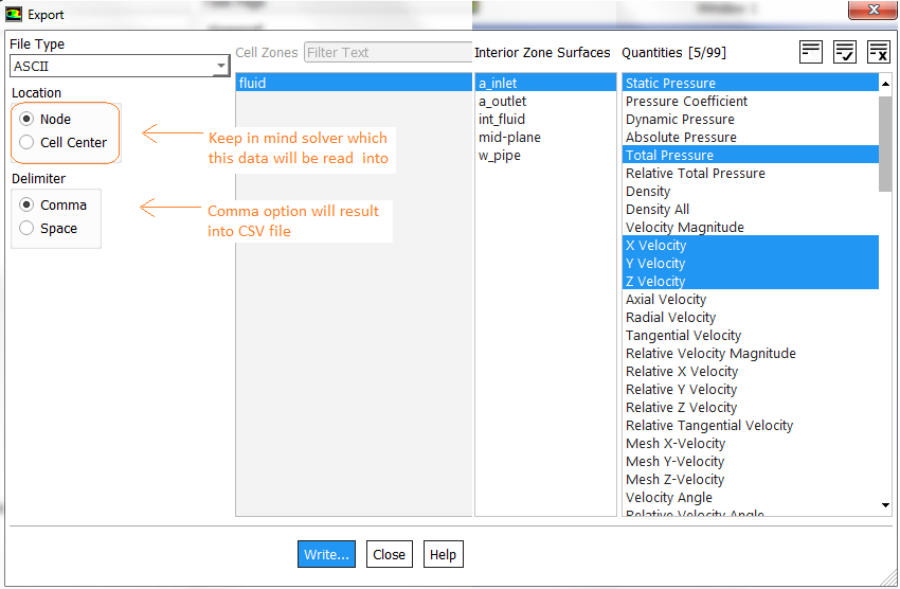

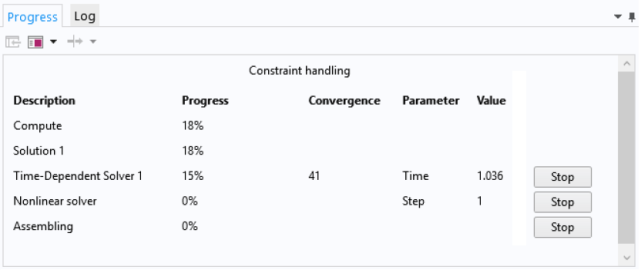

Convergence History

The residuals of continuity, momentum (u, v and w), energy (T), k and ε is used to check the convergence of iterative solution process. Convergence refers to the situation where the calculated value is assumed to tend to correct inversion of equation [A]{x}={b}. To find the cell(s) related to maximum residual, use the TUI: solve/set/expert yes yes no. Here the answers yes, no and not are responses to the questions "Save cell residuals for post-processing? [no]", Keep temporary solver memory from being freed? [no]" and "Allow selection of all applicable discretization schemes? [no]" respectively. To store the residuals in *.dat file, adjust the data file quantities using "File > Data File Quantities" option. This option is available (as on V24R1) in Legacy format only and both cas.gz / dat.gz files should be loaded. The Data File Quantities gets active after script is run in TUI console. If run is already completed, make one iteration so that the specific data fields are created.

Sometimes k (designated as Tke in STAR CCM+) and and ε (referred to as Tdr in STAR CCM+) may be as high as few thousand and the results are reasonable. The scale can be reduced by turning off the normalization for k and ε. Normalization takes the very first iteration's values to normalize all subsequent residuals. When solution already start with a good solution or guess value for k and ε, the first value is already very low, and residuals may not reduce further. It then sometimes can happen that the residual increases instead of decreasing even though the absolute (without normalization) value might still be very low.

In ANSYS FLUENT, the denominator to calculate the scaled residual for the continuity equation of a pressure-based solver is the largest absolute value of the continuity residual in the first 5 iterations. When iteration starts with a good solution, the largest residual of first 5 iterations may be low. Hence in subsequent iterations, significant reduction in order of magnitude of the residuals may not be observed even though the convergence can be deemed to be of good accuracy. Note 'scaling' and 'normalization' of residuals are different and interpretation should be different as well.

Under Relaxation Factors (URF): As per ANSYS documentation and training material, Pressure and Momentum URFs MUST sum to 1.00 and flow stability is best with smaller pressure URF (0.03 is not uncommon).Thin Walls

Natural Convection (Buoyancy-driven Flows)

Boussinesq approximation: ρEFF = 1 - β(TWALL - TREF). Here: ρEFF= effective driving density β = coefficient of thermal expansion (equal to 1/T for ideal gas, T in [K]). Boussinesq approximation is only valid for β × (TWALL - TREF) << 1.0 say β × (TWALL - TREF) < 0.10. In other words, the temperature difference should be < 30 [K] for reference temperature of 300 [K].- Use Boussinesq approximation to start the simulation. Specify correct density and expansion factor of the fluid

- Initialize the domain with small velocity say 0.02 or 0.05 [m/s] in the direction opposite to the gravity

- Alternatively, solve a force convection flow with small velocity is expected inlet(s) and then switch on to natural convection mode

- Turn-on radiation once flow and temperature fields have stabilized

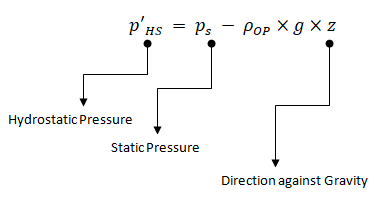

As per FLUENT User's Manual: "The boundary pressures that you input at pressure inlet and outlet boundaries are the redefined pressures as given by equation above. In general you should enter equal pressures, p'HS at the inlet and exit boundaries of your ANSYS FLUENT model if there are no externally-imposed pressure gradients. For example, if you are solving a natural-convection problem with a pressure boundary, it is important to understand that the pressure you are specifying is p'HS. Therefore, you should explicitly specify the operating density rather than use the computed average. The specified value should, however, be representative of the average value. In some cases the specification of an operating density will improve convergence behaviour, rather than the actual results. For such cases use the approximate bulk density value as the operating density and be sure that the value you choose is appropriate for the characteristic temperature in the domain."

Refer the document at "innovationspace.ansys.com/.../setting-operating-density-for-natural-convection-cases-when-density-is-modelled-using-ideal-gas-law" to appropriate boundary condition settings.

Mesh Reordering - Node Renumbering

Both elements and nodes are numbered where elements are described as a set of nodes forming its vertices. The more compact is the arrangement of elements and nodes, lesser will be the memory requirements. Some terms associated with elements and node arrangements in a mesh are as follows.

- Bandwidth: It is the maximum difference between neighbouring cells in a zone i.e. if each cell in the zone is numbered in increasing order sequentially, bandwidth is the maximum differences between these indices.

- Excerpt from user manual - FLUENT: Since most of the computational loops are over faces, you would like the two cells in memory cache at the same time to reduce cache and/or disk swapping i.e. you want the cells near each other in memory to reduce the cost of memory access.

- In general, the faces and cells are reordered so that neighboring cells are near each other in the zone and in memory resulting in a more diagonal matrix that is non-zero elements are closer to diagonal. Refer to "banded-matrices" in context with numerical simulations.

Solver Settings for Multi-Phase Flows

Multi-phase flows have wide applications in process, automotive, power generation and metal industries including phenomena like mixing, particle-laden flows, CSTR - Contunuously Stirred Tanks Reactor, Water Gas Shift Reaction (WGSR), fluidized bed, fuel injection in engines, bubble columns, mixer vessels, Lagrangian Particle Tracking (LPT). Some of the general characteristics and categories of multi-phase flow are described below along with setting parameters in ANSYS FLUENT.

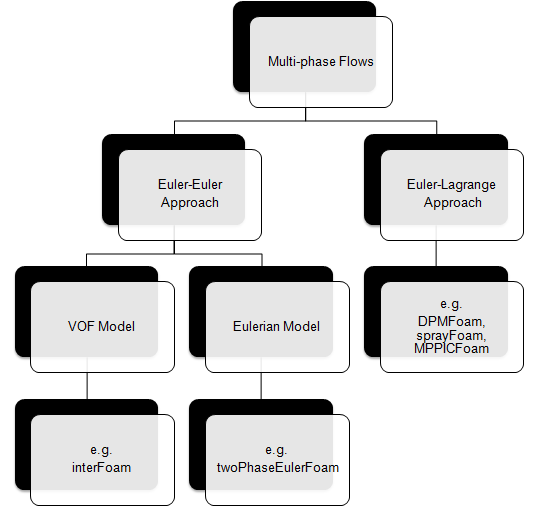

Multiphase flow regimes are typically grouped into five categories: gas-liquid (which are naturally immiscible) flows and (immiscible) liquid-liquid flows, gas-solid flows, liquid-solid flows, three or more phase flows. As can be seen, the immiscibility is a important criteria. In a multi-phase flow, one of the phase is usually continuous and the other phase(s) are dispersed in it. The adjective "Lagrangian" indicates that it relates to the phenomena of tracking a moving points ("fluid particles") - such as tracking a moving vehicle on a road. On the other hand, the adjective "Eulerian" is used to describe correlations between two fixed points in a fixed frame of reference - such as counting the type of vehicles and their speeds while passing through a fixed point on the road.

Gas-liquid flows are further grouped into many categories depending upon the distribution and shape of gas parcels. Three such types are described below.Bubbly Flow: it represents a flow of discrete gaseous or fluid bubbles in a continuous phase.

From FLUENT User Guide V12: "For fluid-fluid flows, each secondary phase is assumed to form droplets or bubbles. This has an impact on how each of the fluids is assigned to a particular phase. For example, in flows where there are unequal amounts of two fluids, the predominant fluid should be modeled as the primary fluid, since the sparser fluid is more likely to form droplets or bubbles."

Some other types of flows are particle-laden flow such as air carrying dust particles, slurry flow where particles are transported in a liquid, hydro-transport which describes densely-distributed solid particles in a continuous liquid such as cement concrete mix. Gas assisted mixing of solid such as fluidized-bed and settling tank where particles tend to sediment near the bottom of the tank forming thick sludge are some other examples of multi-phase flows.

The two dominant method of multi-phase simulations are listed below.

- A phase cannot be occupied by the other phases in space, the concept of "phasic volume fraction" is used and assumed to be continuous functions of space and time. Distribution of phases are plotted by volume fraction whereas in DPM (Euler-Lagrange approach) the distribution of phases are plotted by particle track.

- Globally, the sum of this phasic volume fraction equals to one.

- Conservation equations for each phase are derived as a set of equations having similar structure for all phases, analogous of momentum equations.

- These equations are closed by constitutive relations obtained from empirical information.

Atomization and Sprays

This formation of droplets from a liquid jet is known as Atomization. One of the dimensionless parameters that governs the formation of droplet is Weber Number. We = [ρCP × u2 × λ / σ] where ρCP is the density of primary fluid (fluid in which droplet is moving), λ is radial integral length scale (d/8 based on particle diameter) and σ is the surface tension between liquid and surrounding gas. For single-phase nozzles and primary breakup distribution of droplets, estimate for the Sauter mean diameter of droplets as per Wu et al. (1992): d32 = 133 × We−0.74. The derivation of Weber number on first principles are as follows.

- Aerodynamic force on the droplet, FA = 0.5 x CDRAG x π/4 x DP2 x ρCP x VCP2 where CDRAG = Drag Coefficient, DP = Particle Diameter, ρCP = Density of Continuous Phase, VCP = Relative velocity of continuous phase over particle.

- Cohesive or interfacial tension force, Fσ = π x DP x σ

- Weber Number, We = [8 x FA] / [CDRAG . Fσ] = ρCP × VCP2 × DP / σ

The Sauter mean diameter (SMD) is the diameter of a sphere that has the same volume to surface area ratio as the total volume of all the drops to the total surface area of all the drops. As per ANSYS (2016) Theory Guide, the most probable droplet diameter is d0 = 1.27 × d32 (1 − 1/n)1/n where n is the spread parameter (n = 3.5 for a single-phase nozzle).

When larger liquid droplets move beyond certain speed, the surface stress acting on them make the droplets become unstable and break into finer (smaller) droplets. "Critical Weber number in the range 10 ~ 20 are sufficient to cause aerosolization of small orifice diameter nozzles. [Ref: Brown and York: Sprays Formed by Flashing Liquid Jets]." Inside gas-liquid separation equipments, situations exist where the gas phase leaves the separation interface with high velocities and carry liquid phase along with it in the form of droplets. This is known as entrainment or carry over.

As per paper "Memoryless drop breakup in turbulence", "The theoretical foundations for the breakup of fluid particles larger than the viscous scale in turbulence (η = ν3/4/ε1/4, where ε is the kinetic energy dissipation and ν the kinematic viscosity of the carrier fluid) were laid out by Kolmogorov and Hinze." The article further says: "Breakup is inhibited when the diameter falls below the corresponding critical (Kolmogorov-Hinze) diameter dKH = C x (ρ / σ)−3/5 x ε−2/5 where C is a constant. Hinze fitted this equation to experimental measurements of d95, the diameter below which 95% of the volume of the disperse phase is contained, and obtained good agreement for C = 0.725." Here ρ is the density of the carrier phase and σ is the surface tension. ε = Cμ0.75 × k1.5/L where Cμ is an empirical constant = 0.09, and L is the length scale of turbulence producing eddies in the flow. k = 3/2 * [UMEAN * I]2 and I=0.16 / ReDH1/8. The size of largest eddies are approximated to be equal to the hydraulic diameter (sometimes also called as integral length scale).

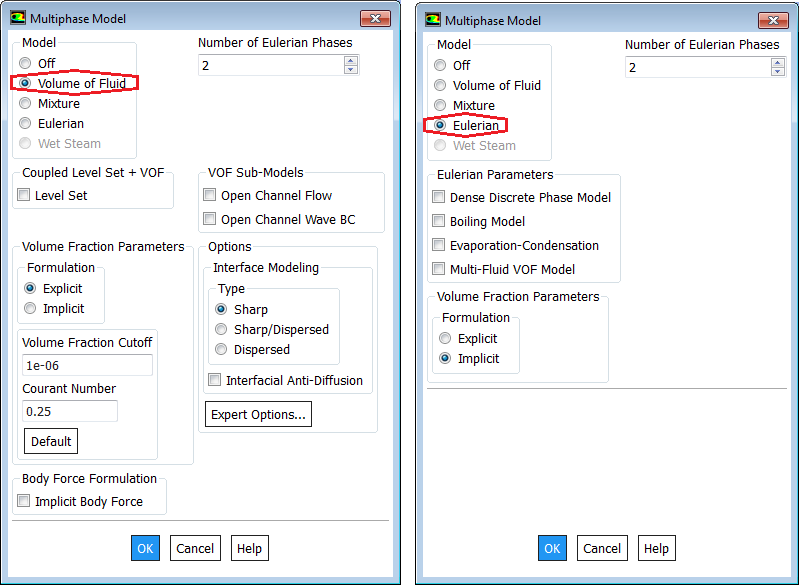

Improve convergence behavior of Eulerian Model

Reference: FLUENT User Guide V12- Compute an initial solution before solving the complete Eulerian multiphase model. There are three methods you can use to obtain an initial solution for an Eulerian multiphase calculation:

- Set up and solve the problem using the mixture model (with slip velocities) instead of the Eulerian model. You can then enable the Eulerian model, complete the setup, and continue the calculation with Eulerian Model using the mixture-model solution as a starting point.

- Set up the Eulerian multiphase calculation as usual, but compute the flow for only the primary phase. To do this, deselect Volume Fraction in the Equations list in the Equations dialogue box. Once you have obtained an initial solution for the primary phase, turn the volume fraction equations back on and continue the calculation for all phases.

- Use the mass flow inlet boundary condition to initialize the flow conditions. It is recommended that you set the value of the volume fraction close to the value of the volume fraction at the inlet.

- You should not try to use a single-phase solution obtained without the mixture or Eulerian model as a starting point for an Eulerian multiphase calculation. Doing so will not improve convergence, and may make it even more difficult for the flow to converge.

- At the beginning of the solution, a lower time step is recommended to obtain convergence. If using explicit scheme for the volume fraction, start with a lower Courant number.

- If using the volume fraction explicit scheme, start a run with a lower time step and then increase the time step size. Alternatively, this could be done by using variable time stepping, which would increase the time step size based on the input parameters. Variable time stepping is not recommended for compressible flows.

- For problems involving a free surface or sharp interfaces between the phases, it is recommended to use the symmetric drag law.

Particle Depositions

Particles tend to deposit on solar panels, turbine blades and even rotating blades of ceiling and exhaust fans in our houses. They gradually grow in thickness and density and remain stuck the blades till they are mechanically wiped away. This deposition predictions is computed in terms of sticking efficiency which tend to be high at lower diameter and low at higher diameters of the particles. The sticking efficiency is also dependent on particle Young's modulus and temperature of the surface where deposition is likely to occur. Most commercial programs such as ANSYS FLUENT has built-in boundary conditions to define the phenomena when particle strikes a boundary face. However, none of these boundary conditions accurately represent the particle-wall interaction which governs the rate of deposition and the deposition phenomena itself.

- Reflect – elastic or inelastic collision

- Trap – particle is trapped at the wall

- Escape – particle escapes through the boundary

- Wall-jet – particle spray acts as a jet with high Weber number and no liquid film

- Wall-film – stick, rebound, spread and flash based on impact energy and wall temperature

- Interior – particle passes through an internal boundary zone

The collision phenomena is defined in terms of coefficient of restitution which is the ratio of the particle rebound velocity to the particle normal velocity. As the particle normal velocity decreases, the particle rebound velocity decreases and eventually reaches a point where no rebound occurs and the particle is captured. This velocity at which capture of a particle occurs is known as the capture/critical velocity. Brach and Dunn (59) formulated an expression to calculate the capture velocity of a particle using a semi-empirical model. In this model, the capture velocity of the particle was calculated based on the experimental data and is given as follows: VCRIT = (2E/DP)1.428 where E is the composite Young's modulus which is determined based on the Young's modulus of the particle and that of the wall.

Coefficient of restitution also needs to be applied on walls in a Discrete Phase Modeling (DPM) when the particles get reflected after hitting the wall. en = normal coefficient of restitution, et = tangential coefficient of restitution, α1 = angle of impingement, α2 = angle of reflection, u1 = velocity of impingement, u2 = velocity of reflection. u2.sin(α2) = en u1.sin(α1) and u2.cos(α2) = et u1.cos(α1).

De-fogging and De-icing

Condensation is a phenomenon that occurs when the headlamp is submitted to cold and moist environments. This will sometimes lead to fogging of the headlamps outer lens. ANSYS provides a tutorial on Windshield De-fogging Analysis using EWF Model where EWF = Eulerian Wall Film. Also, it is reported in "CFD Modelling of Headlamp Condensation" - Master’s Thesis in Automotive Engineering by Johan BrunbeRG and Mikael Aspelin, De-Fogging Module (DFM) was at first developed for de-fogging and de-icing simulations for HVAC applications. The DFM is written as a User-defined Function (UDF) and provided by ANSYS. Some assumptions and simplifications are made in the DFM and stated below:- Condensation will only occur at specified surfaces

- The condensation film is fully contained by the first layer of cells adjacent to the specified walls

- Condensation mass transfer rates are determined only by the gradient of water vapour mass fraction in the cells containing the condensation film

- The condensation film is stationary

- The inlet relative humidity is constant in time

- Gravity and surface tension effects of the condensation film is neglected

- The Diffusion coefficient of water vapour in moist air is known as a function of local pressure and temperature, Mollier chart or the Bahrenburg approach

- Inlet and outlet mass flow rates

Excerpt from "CFD modeling of annular flow for prediction of the liquid film behaviour" by MARIA CAMACHO: "Annular flow is characterized by gas flowing as a continuous phase in the middle of a channel while liquid is present as dispersed droplets in the gas and as a liquid film at the walls of the surface. The liquid film mass is constantly changing due to three main interactions: deposition of liquid droplets onto the liquid film surface, liquid film evaporation on the liquid-gas interface and liquid film entrainment as droplets into the vapour flow." Mist flow: liquid phase is present only as dispersed droplets across the continuous vapour phase.

Discrete Phase Model: DPM

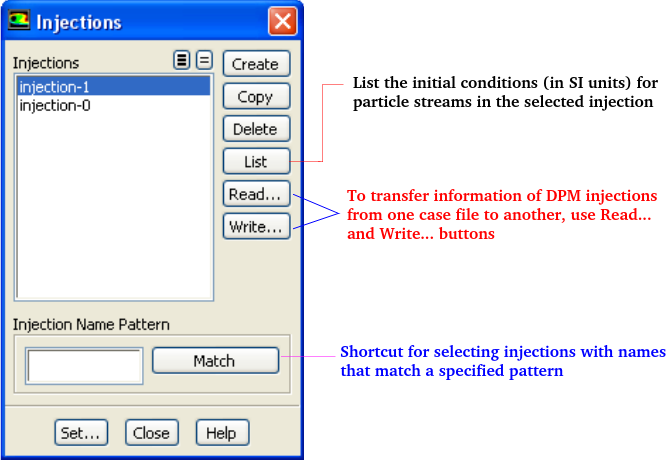

In Lagrangian particle tracking (Euler-Lagrangian approach), the discrete or dispersed phase comprises of particles and bubbles. While the term 'particle' is used to represent the dispersed phase, it inherently refers to a 'parcel' or 'agglomeration' or 'assemblage' of similar particles. Note: in ANSYS FLUENT, in steady-state discrete phase modeling, particles do not interact with each other and are tracked one at a time in the domain. Even when in reality droplets or solid particles are individual physical particles, ANSYS Fluent does not track each physical particle but groups of particles known as parcels. Thus, the model tracks a number of parcels, and each parcel is representative of a fraction of the total mass flow released in a time step. The number of "actual physical" particles in a parcel is adjusted in such a way that it satisfies the mass flow rate.The primary inputs that a user must provide for DPM calculations are the initial conditions that define the starting positions, velocities, parameters for each particle stream and the physical effects acting on the particle streams. Initial conditions of a particle/droplet stream is specified by creating an 'injection' and by specifying its attributes. The initial conditions describe the instantaneous conditions of an individual particle which are:

- position (x, y, z coordinates) of the particles or parcels

- velocities (u, v, w) of the particle. For cone injections, velocity magnitudes and spray cone angle are used to define the initial velocities

- diameters of the particle

- temperature of the particle

- mass flow rate of the particle stream that will follow the trajectory of the individual particle/droplet though it is required only for coupled calculations

- additional parameters for special injections such as atomizer models

The particle positions are always computed when particles enter/leave a cell even if a very large length scale is specified. The time step used for integration will be such that the cell is traversed in one step.

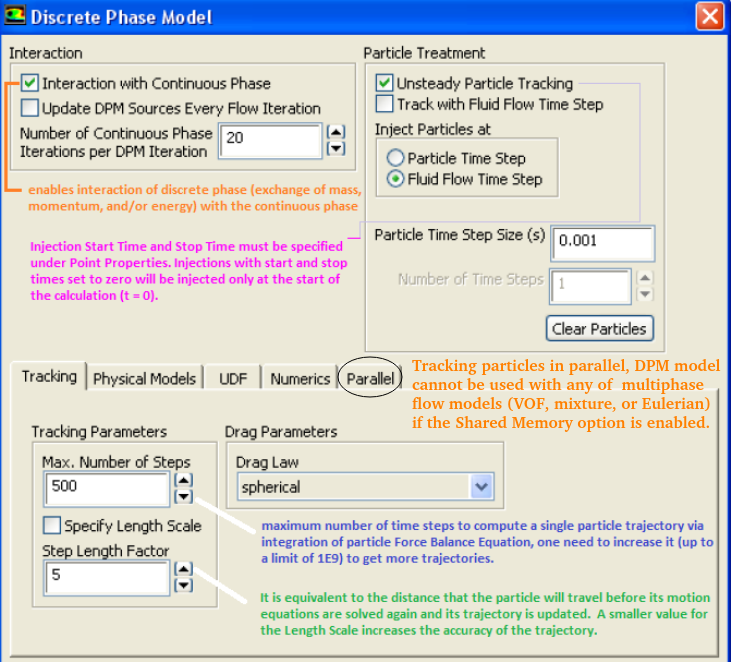

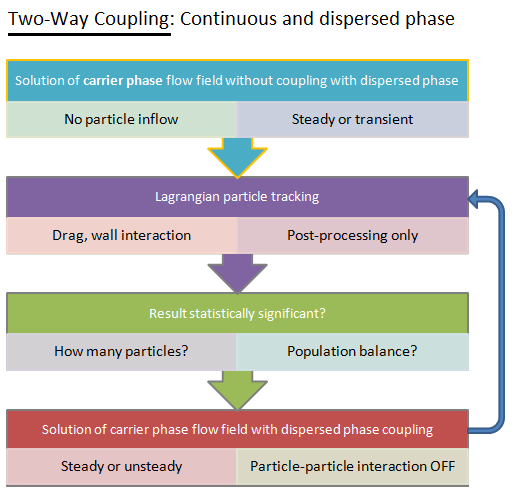

Also, if "Number Of Continuous Phase Iterations Per DPM Iteration" field is reduced, the coupling between dispersed and continuous flow increases and vice-versa. For a closer coupling, this value should be set to a value ≤ 5 though no unique upper limit exists. Increasing the under-relaxation factor (setting value closer to 1) for discrete phase improves coupling and reducing the under-relaxation factor (setting value away from 1 and closer to 0) for discrete phase weakens the coupling.

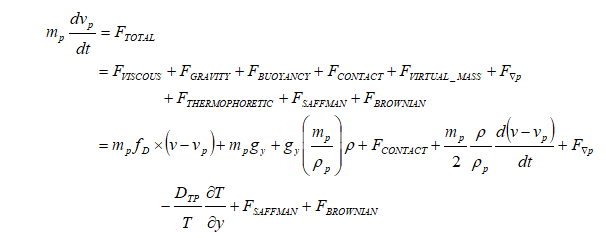

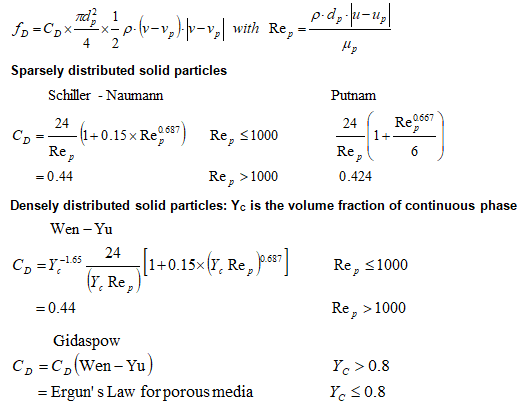

DPM Trajectory Equation

ANSYS FLUENT calculates drag coefficient of non-spherical particle using shape factor defined as [s/S] where 's' is the surface area of a hypothetical sphere having the same volume as the particle and 'S' is the actual surface area of the particle.

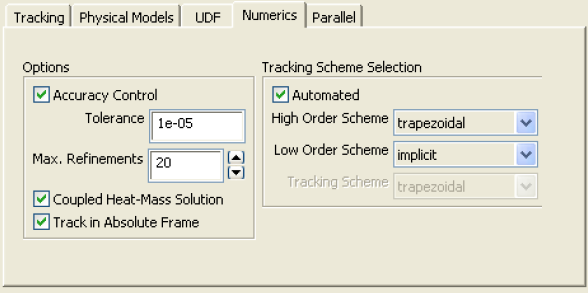

As explained above, the underlying physics of DPM is described by ordinary differential equations (ODE) as opposed to the Navier-Stokes equation for continuous flow which is expressed in the form of partial differential equations (PDE). Therefore, DPM can have its own numerical mechanisms and discretization schemes, which can be completely different from other numerics (such as discretization schemes) to solve the governing equations. The Numerics tab gives users control over the numerical schemes for particle tracking as well as solutions of heat and mass equations related to particles - continuous phase interactions.

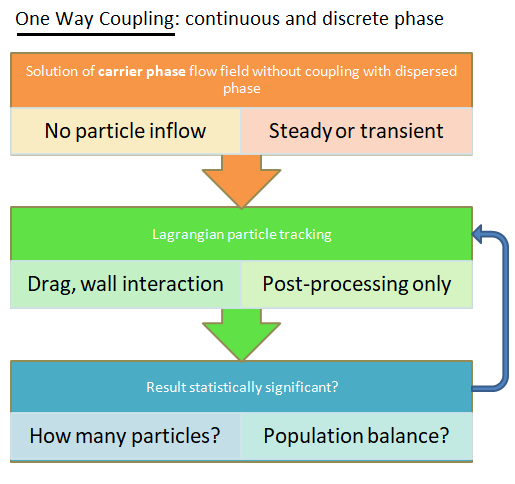

Type of coupling: continuous (carrier) phase and discrete phase

- no collisions at all (used for dilute suspensions): one-way or two-way coupling, Newton's equation of motion for dispersed phase.

- 3-way coupling - soft-sphere approach (the discrete element method, DEM): the instantaneous inter-particle contact forces due to oblique collisions (the normal, damping and sliding forces) are computed using equivalent mechanical elements springs, dashpots and sliders respectively. This approach requires a very small time step which can be as low as < 1 [μs].

- 3-way coupling - hard-sphere approach: interactions between particles are pair-wise (binary collision), instantaneous, momentum-conserving. Faster than soft-sphere method due to feasibility to use longer time steps.

In ANSYS FLUENT, the DDPM option is available only under Eulerian multi-phase model.

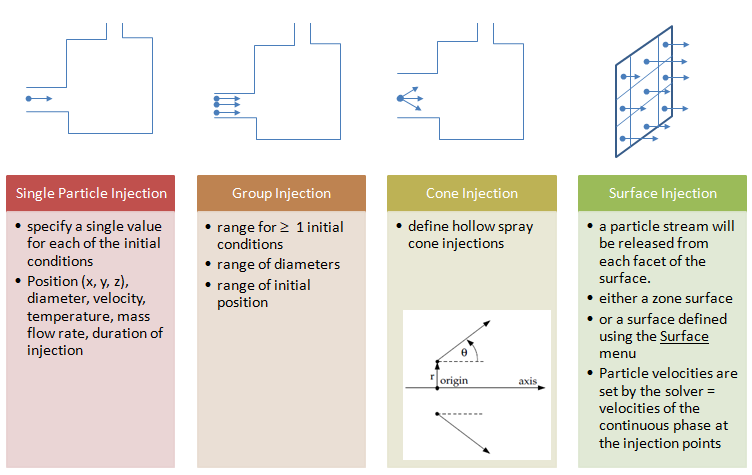

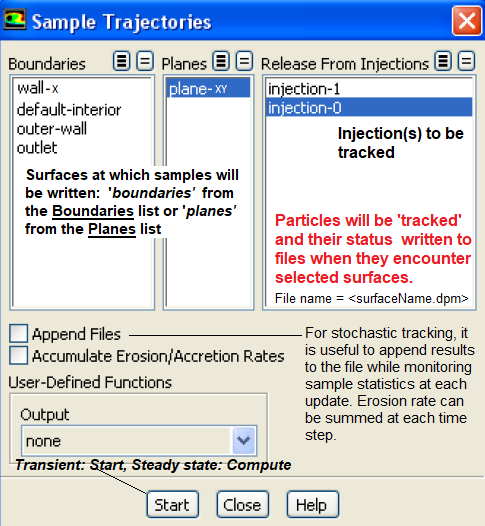

Type of Particle Injections

For typical applications, the computational cost of tracking all of the particles individually is prohibitive. Instead, the approach adopted in DPM and Discrete Element Method (DEM) Collision Model is that like particles are divided into parcels and then the position of each parcel is determined by tracking a single representative particle. Thus, in such calculations, following differences between DPM and DEM are mentioned in FLUENT user manual.- The mass used in the DEM calculations of the collisions is that of the entire parcel, not just that of the single representative particle.

- The radius of the DEM parcel is that of a sphere whose volume is the mass of the entire parcel divided by the particle density.

Group injections requires to set the properties position, velocity, diameter, temperature and and flow rate for the First Point (φ1) and Last Point (φN) in the group. ANSYS FLUENT assigns a value of φi to the i-th injection in the group using a linear variation between the first and last values for φ. The specified flow rate is defined per particle stream and can also be interpolated linearly between mass flow rates of the first and last injection points.

In ANSYS FLUENT, particle initial conditions (position, velocity, diameter, temperature and mass flow rate) can also be read from an external file to describe injection distribution. The file has the following form with all of the parameters in SI units. All variables inside the parentheses are required, name is optional.

(( x y z u v w diameter temperature mass-flow) name)

Sample files generated during sampling of trajectories (path Reports → Sample → Set-up...) for steady particles can also be used as injection files since they have a similar file format. Note that one line in the file = one parcel, 10 lines in the file = 10 parcels, 100 lines in the file = 100 parcels ... "parcels" = groups of particles which have similar properties (diameter, velocity, temperature) as each particle. Parcels are used as computationally it is impossible to track very large number say millions of particles. The mass-flow of the parcel = mass-flow of the group of particles. Generally the more parcels you use, the more accurate the solution shall be.

Mass flow rate: Only for coupled calculations (discrete phase affecting flow field of continuous phase), the mass of particles per unit time that follows the trajectory can be defined in the injection setting option. Also note that the number of streams (say 100) is not the same as the number of particles. The DPM uses a parcel method where many particles are tracked in each parcel and ANSYS FLUENT allocates the number of particles in each parcel based on the particle diameter, density and mass flow rate. Number of particles in each parcel NP = mPS × Δt / mP where mPS = mass flow rate in particle stream, mP = mass of each particle and Δt is the time step. Under steady state operations, it is assumed that any particle released from the same location with the same conditions will follow the same trajectory. P_FLOW_RATE macro can be used in a UDF to return the flow rate of a parcel in [kg/s].

Example: particle release from a surface - assuming there are 200 faces in the surface.

- The particle injected is sand with density of 2000 [kg/m3] and diameter 250 [μm]. Thus, mass of each particle is 8.18 [μg]

- Total mass flow rate of sand particles = 0.1 [kg/s].

- Total number of particles = 0.10 [kg/s] / 8.18×10-9 [kg] = 1.22×109 [particles/s]

- Thus, the 200 trajectories releases from 200 faces of the surface represents 1.22×109 actual [particles/s].

- If one defines a range of diameters by specifying "minimum diameter", "maximum diameter" and "number of diameters", the total number of trajectories computed will increase to 200 × "number of diameters".

- If stochastic tracking is switched on to account for Turbulent Dispersion and number of tries specified is 20, now the total number of trajectories computed will be 200 × "number of diameters" × "number of tries".

Number of Particle Streams: This option controls the number of droplet parcels that are introduced into the domain at every time step.